Data topics

Select a topic to explore related insights and resources.

Related articles

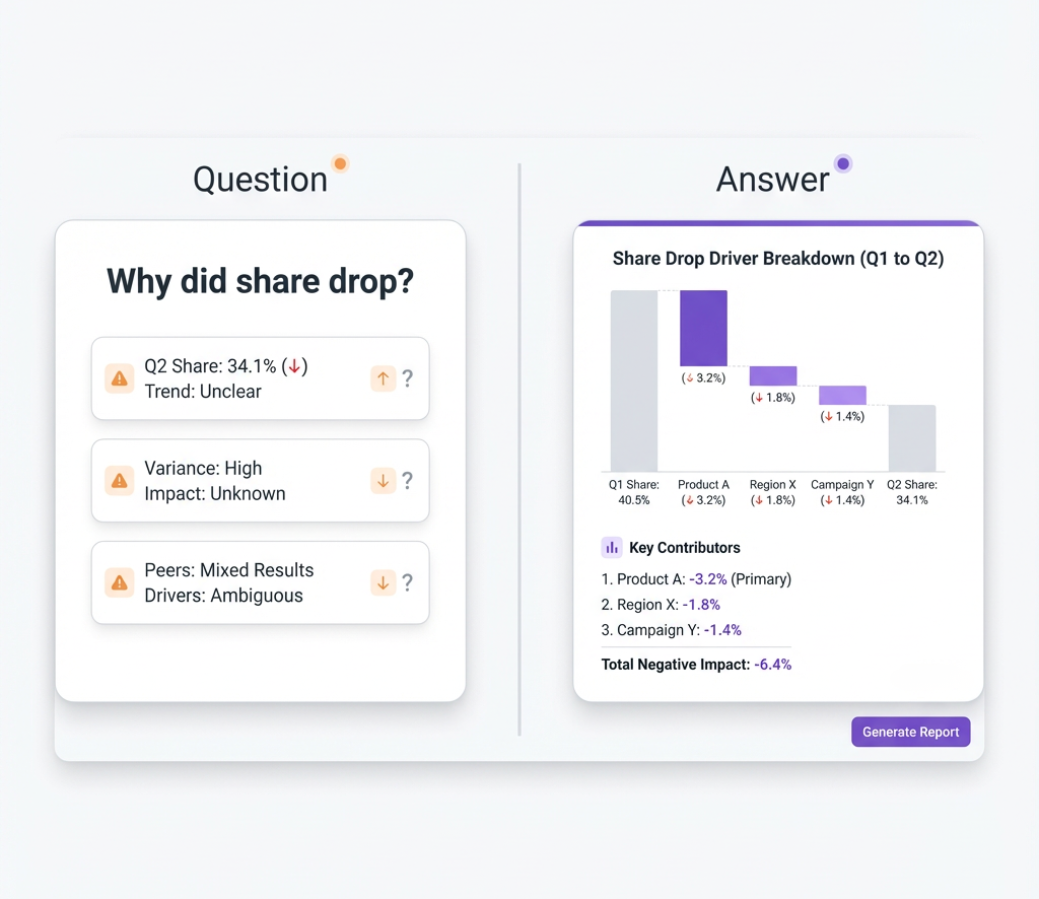

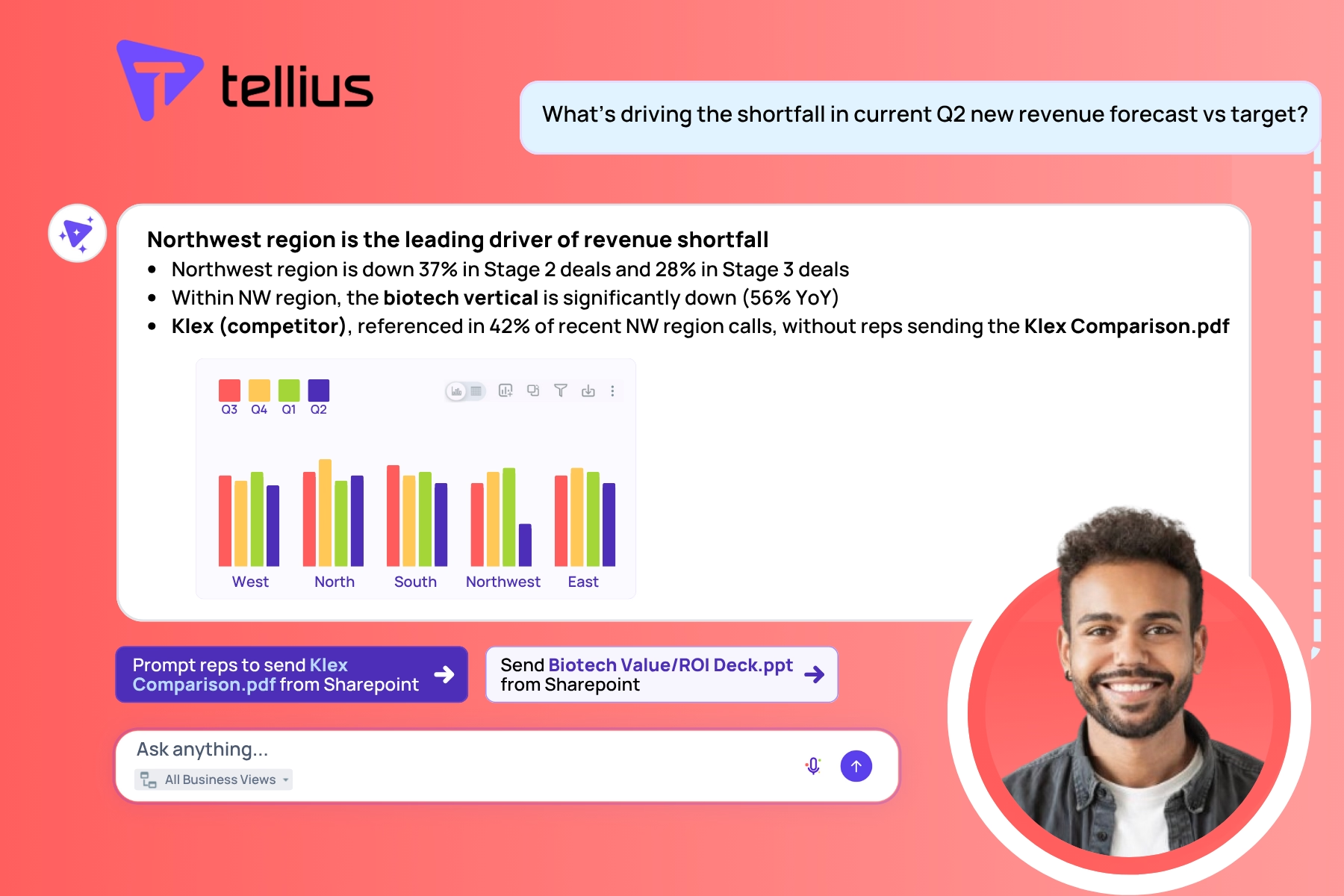

See how Kaiya helps Customer Success teams spot real renewal risk by reading what customers say—not just what dashboards show. Combine usage trends, support friction, and unstructured conversations into one clear renewal risk signal across your portfolio.

Learn the 3 must-haves you need to transform your modern data analytics stack to go beyond traditional dashboarding and get advanced insights to everyone who needs it.

Watch industry experts from ZS discuss key lessons learned in 2023 and ways to stay ahead of the curve of pharma commercial execution in 2024.

Watch this on-demand webinar to learn how financial institutions and fintech companies thrive by efficiently capturing new opportunities, balancing risk, and strengthening customer relationships with decision intelligence.

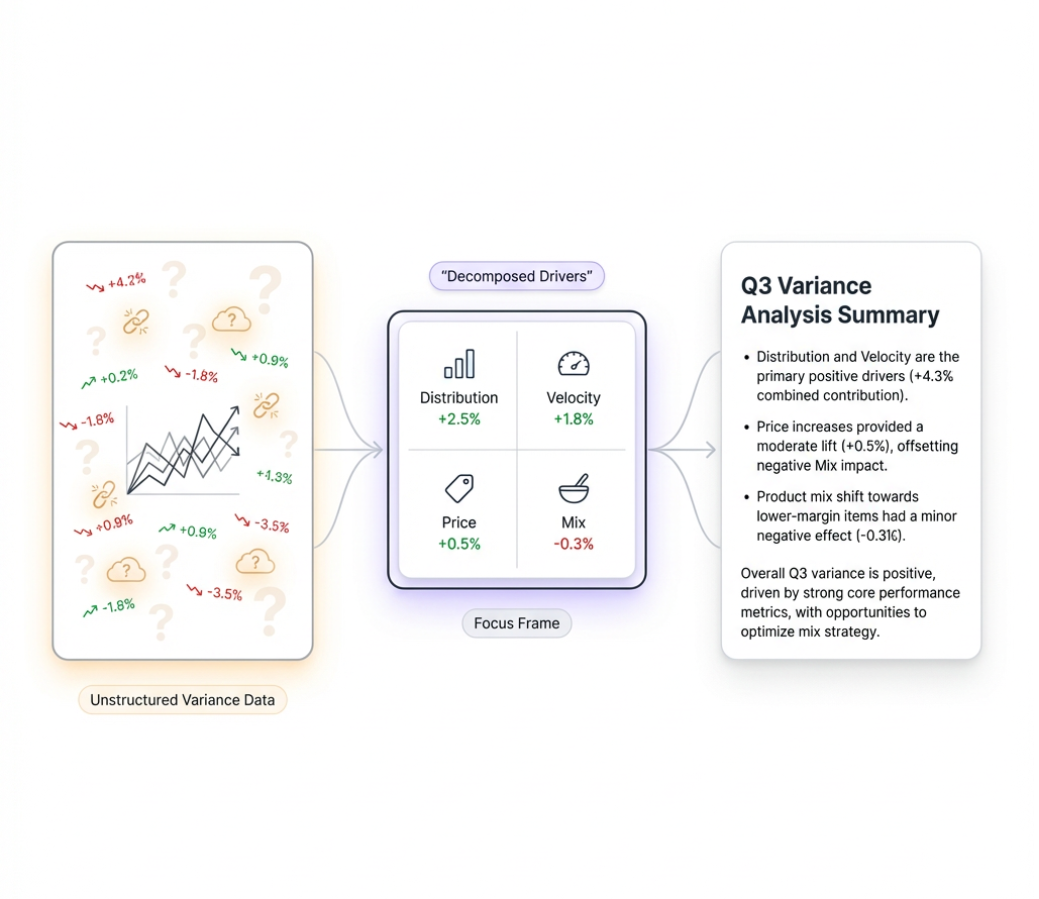

Join M2E and Tellius as we dive into the issues facing CPG organizations and explore how AI analytics can help. AI-powered analytics helps drive efficiency so you can spend more time taking action to drive performance.

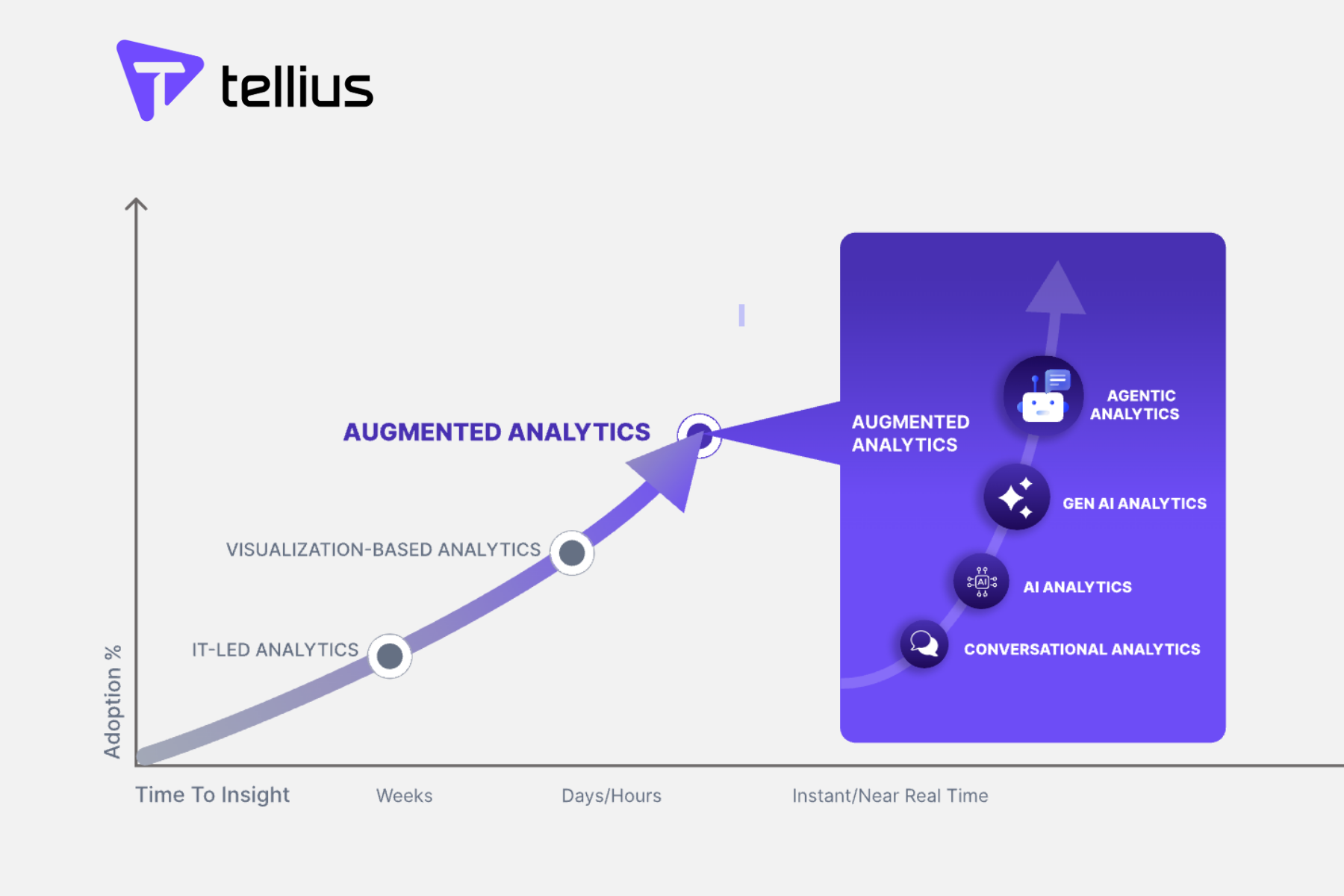

Every company is on a journey to become more data-driven. Learn how to transform data culture with AI-powered analytics, intelligent UX, and machine learning automation.

Learn how modern cloud analytics delivers a superior user experience to business and data teams, augments data intelligence with AI automation, and scales with elasticity - regardless of number of users or data volume.

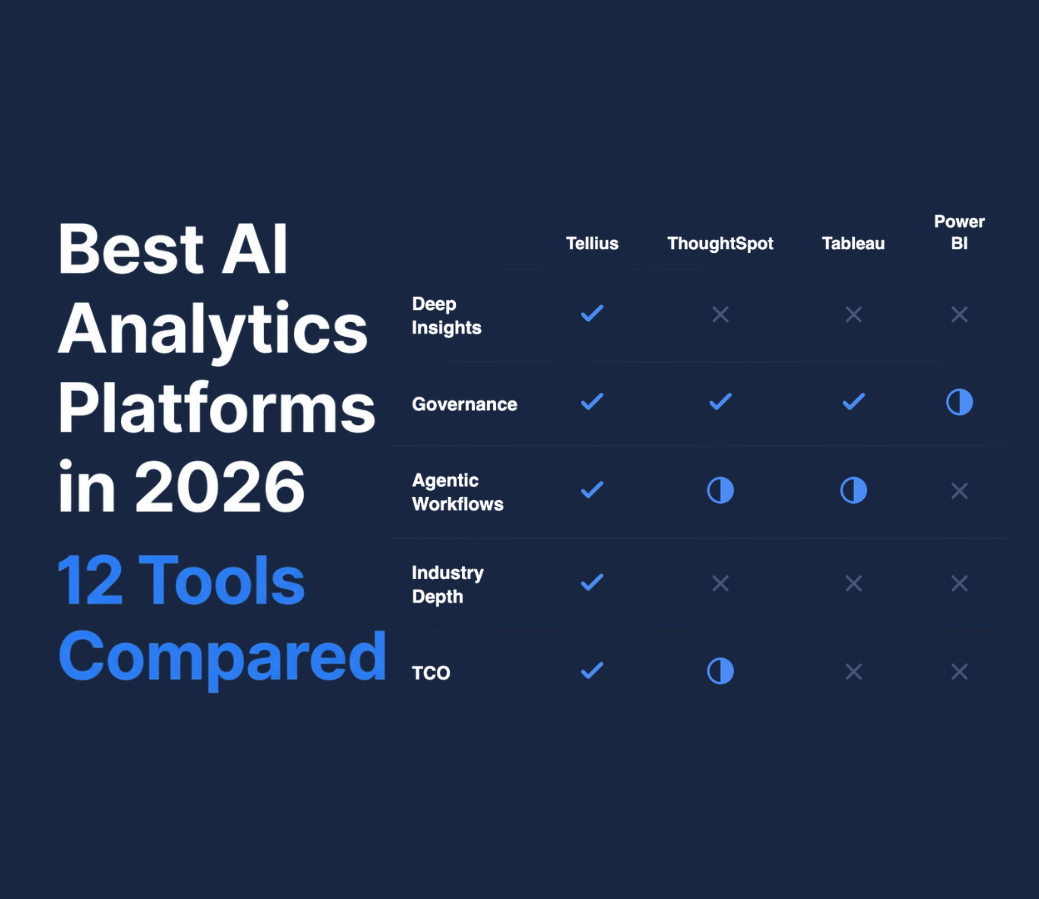

AI can can seemingly revolutionize practically everything, including data and analytics. But all this buzz makes it hard for analytics teams to identify the true potential of AI analytics. Explore misconceptions and best practices for implementing AI analytics in your business.

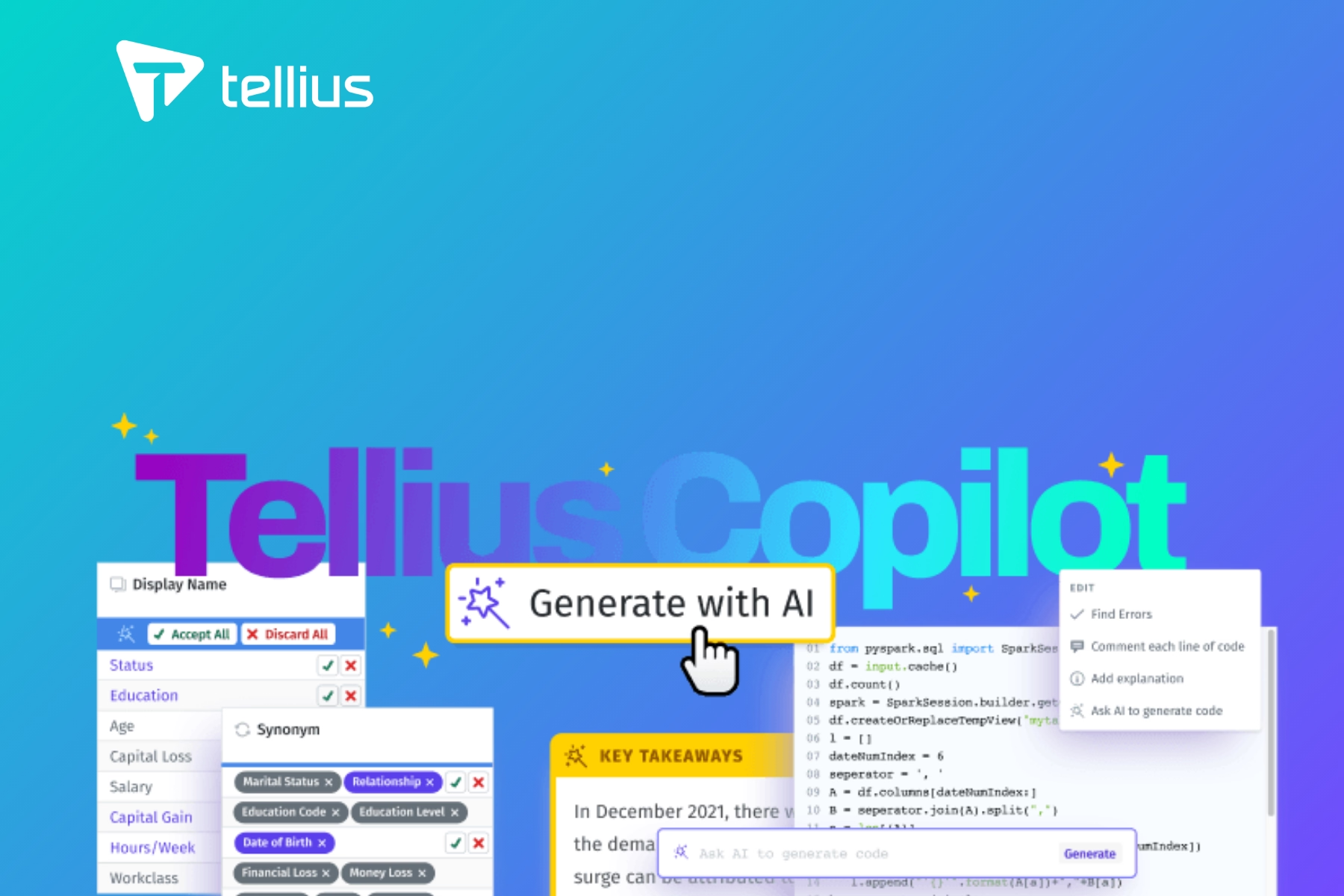

Watch us as we unveil our groundbreaking GenAI product, Tellius Kaiya. See how Kaiya enables your organization to move from business questions to answers faster than ever before.

With the Tellius decision intelligence platform alongside Snowflake's cloud data platform, organizations have both an easy way store, manage, and share data, and the fastest way to generate instant, intelligent insights from that data.

.png)

Learn how business and analytics teams gain newfound power to answer critical data questions in areas such as marketing, supply chain, and operations with a unified approach to data preparation, self-service analytics, insights generation, and machine learning.

Unlocking your data's potential through self-service analytics is powerful but tricky. Tools that were supposed to make things easier still take too much time, have too high a learning curve, and old habits die hard. Learn how AI can help.

Tellius 4.2 contains 40+ new features and enhancements to help customers get faster real-time insights from live data, create interactive data experiences, and drive easier onboarding and user adoption.

With Tellius 5.0, learn how embracing GenAI within your analytics processes can keep you ahead, agile, and adaptive in a competitive landscape. Watch this webinar to learn more.

%20(14).png)

.avif)

.png)

.png)

.png)

.avif)

.jpg)

.jpg)

.png)