Best AI Analytics Platforms in 2026: 12 Tools Compared for Deep Insights, Governance, and Agentic Capabilities

An AI analytics platform is a software system that uses artificial intelligence — including natural language processing, machine learning, and autonomous AI agents — to analyze enterprise data, surface insights, and automate analytical workflows without requiring users to write code or build dashboards manually. Unlike traditional business intelligence tools that visualize data for human interpretation, AI analytics platforms perform the interpretation themselves: decomposing metric changes into ranked contributing factors, detecting anomalies proactively, and delivering finished insights and recommendations. The category ranges from conversational Q&A interfaces on data warehouses to fully agentic systems that autonomously investigate and explain business performance changes.

The category is moving fast. Gartner predicts that 40% of enterprise applications will integrate task-specific AI agents by the end of 2026, up from less than 5% in 2025. But "AI analytics" now describes everything from a chatbot on top of a data warehouse to a fully autonomous insight engine. The differences matter, and most comparison guides gloss over them.

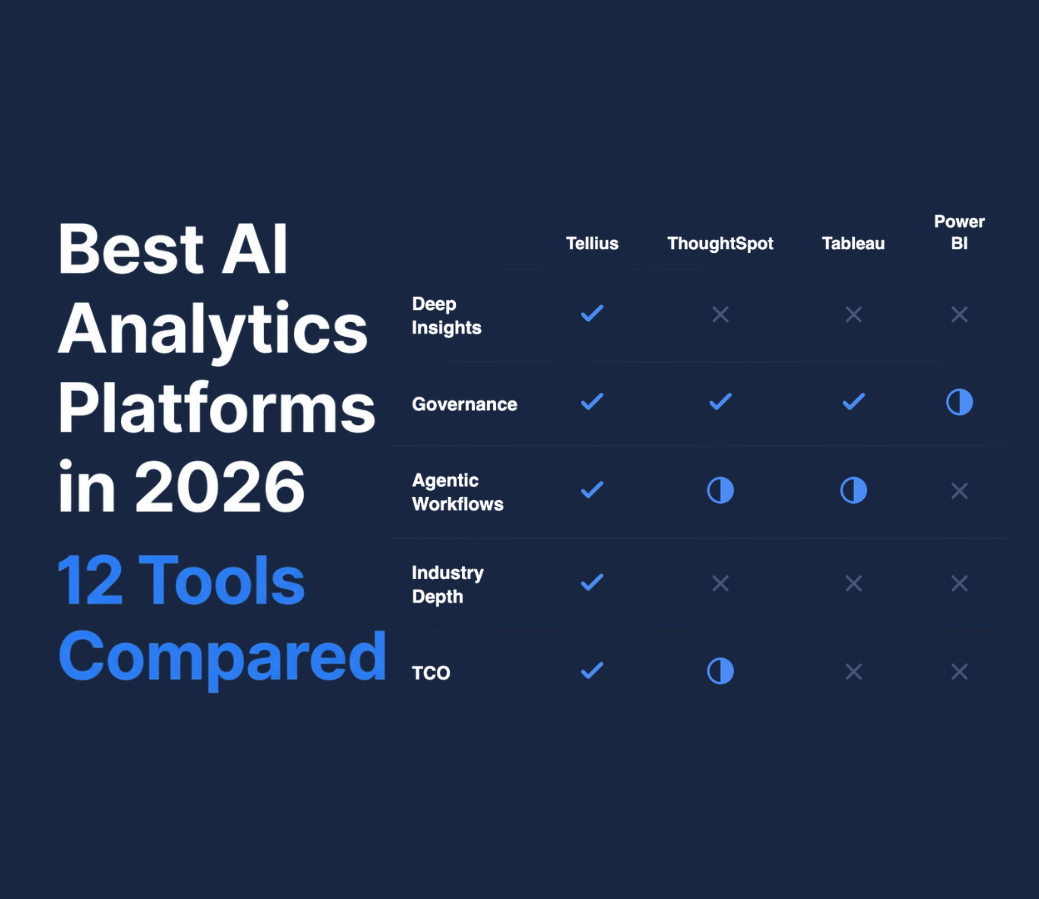

This guide evaluates 12 platforms across what actually separates useful AI analytics from marketing claims: conversational analytics depth, automated root cause analysis, governance and consistency, persistent knowledge and context, industry specificity, agentic workflow orchestration, and total cost of ownership. We tested, researched, and compared each platform against a consistent evaluation framework — and we're transparent about our methodology and our biases (see Methodology and Disclosure).

Key Takeaways

Tellius is best for enterprise analytics teams in pharma, CPG, finance, and B2B RevOps that need both governed conversational analytics for on-demand questions and agentic intelligence for automated root cause decomposition, proactive KPI monitoring, persistent business context, and governed insight delivery through orchestrated workflows.

ThoughtSpot is best for organizations that want powerful search-based self-service analytics across clean, well-modeled warehouse data, particularly when the primary goal is letting business users explore data independently.

Tableau Next is best for organizations already deep in the Salesforce ecosystem that want AI-augmented visualization with strong brand recognition and extensive community support.

Power BI + Copilot is best for Microsoft-standardized enterprises that need cost-effective reporting and visualization with emerging AI assistance, particularly when the analytics stack must integrate tightly with Excel, Teams, and Azure.

Snowflake Cortex is best for organizations with data already consolidated in Snowflake that want native natural language querying without adding a separate analytics tool — and where root cause investigation will be done by human analysts.

What Separates an AI Analytics Platform from an AI Chatbot on a Data Warehouse

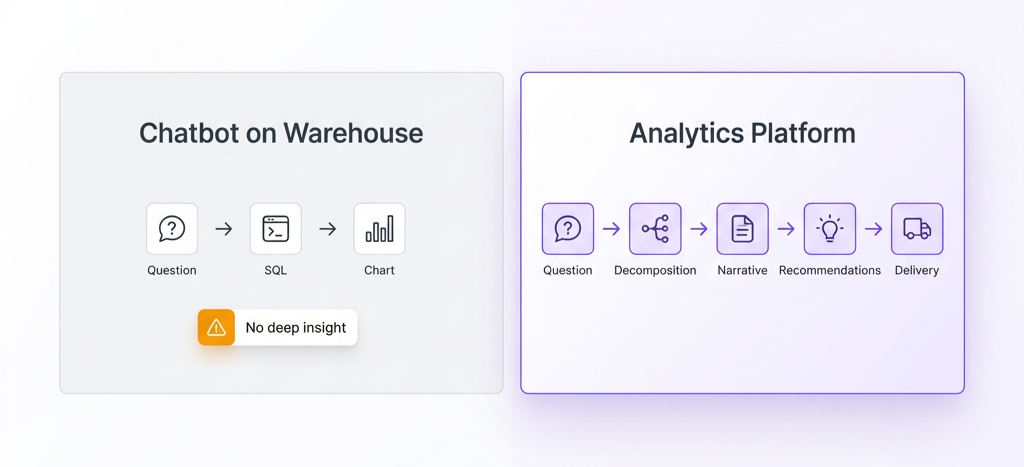

Most platforms marketed as "AI analytics" deliver one thing well: conversational analytics — a user types a question in natural language, the system generates SQL, runs it, and returns a chart. This is genuinely valuable when backed by a governed semantic layer, and it's the table-stakes capability for the category. ThoughtSpot and Tellius both do this well.

But conversational analytics alone means every question starts from zero. There's no memory of what was asked before, no accumulated understanding of the business, and no ability to chain analytical steps into a connected investigation. The question is answered, but the analysis isn't done.

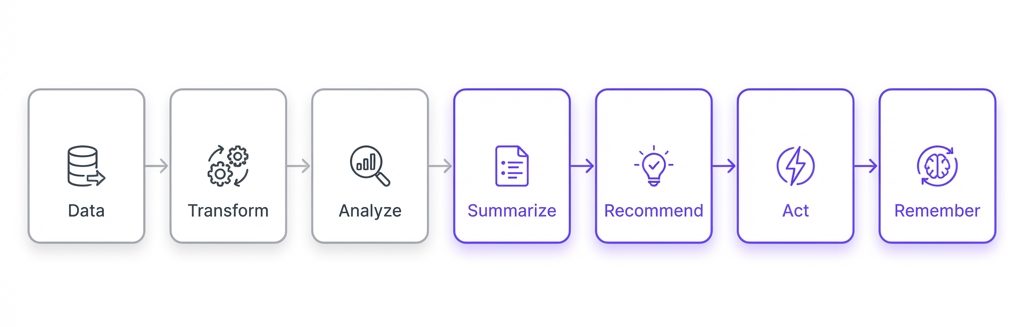

The platforms that actually perform analysis — rather than just retrieve data — build on top of conversational Q&A with four additional architectural capabilities:

Knowledge. The system maintains a governed understanding of your business: metric definitions, calculation logic, domain-specific terminology, hierarchies, fiscal calendars, and business rules. When a pharma commercial team asks about "market share," the platform already knows whether that means TRx share, NBRx share, or payer-level share — and applies the right calculation without being told. This isn't a glossary. It's an embedded business intelligence layer that ensures every analysis uses the same definitions, every time, for every user.

Context. The system remembers what happened before — within a conversation, across conversations, and across the organization. When a user asks "now break that out by territory," the platform knows what "that" refers to. When an AI agent detects a TRx anomaly on Monday, it connects that finding to the payer access change it flagged two weeks ago. Context transforms isolated queries into connected investigations where each step builds on the last.

Agentic Workflows. The system executes multi-step analytical pipelines autonomously — not single queries. A workflow might ingest new data, apply transformations, run root cause decomposition, generate a summary narrative, produce recommendations, and deliver a finished report — all from a single trigger or schedule. This is the difference between asking one question at a time and deploying an analytical process that runs itself.

Orchestration. The system coordinates multiple AI agents, data sources, and analytical steps into coherent end-to-end pipelines. One agent monitors KPIs. Another investigates anomalies when they're detected. A third generates the executive summary. A fourth routes the finished insight to the right stakeholder. Orchestration is what makes the difference between "AI that answers questions" and "AI that does the analytical work."

The simplest way to evaluate any AI analytics platform: Start with conversational analytics — can business users ask questions and get governed answers? Then ask the harder question: does it operate as connected pipelines .

The Deep Insights Gap: The Category's Defining Differentiator

If there is one capability that separates AI analytics platforms from AI-assisted BI tools, it's automated deep insights — the ability to not just show you what changed, but autonomously investigate why, rank the contributing factors, generate executive summaries, surface related trends, and deliver finished recommendations. When a KPI changes, can the platform deliver a complete analytical narrative — without a human analyst spending days decomposing the change manually?

Of the 12 platforms evaluated, only Tellius offers full automated deep insights: root cause decomposition with quantified driver ranking, trend explanations, anomaly context, executive summaries, and actionable recommendations — all generated from a single question or proactive alert. Every other platform shows you what changed and relies on humans to figure out why, assemble the narrative, and write the recommendations. This isn't a minor feature gap — it's the difference between a tool that answers questions and a system that performs complete analysis.

For context: in a typical enterprise analytics team, root cause investigation takes 3-5 business days per question. A pharmaceutical brand team tracking 20+ KPIs across territories, payers, and HCP segments can generate dozens of root cause questions per week. The bottleneck isn't data access — it's the analytical labor required to decompose metric changes into contributing factors, rank them, and explain them to stakeholders.

Four levels of AI analytics maturity:

Level 1: Answer questions. Conversational analytics — users ask in natural language, the platform returns governed answers. This is table stakes for the category and where most platforms perform well. ThoughtSpot and Tellius both excel here.

Level 2: Show what changed. Most platforms stop here — natural language query → SQL → chart. The user sees that revenue dropped 12% but has to figure out why on their own.

Level 3: Explain why it changed — with deep insights. Automated decomposition into ranked drivers with quantified impact, executive summaries, trend context, and actionable recommendations. The platform tells you that Region X contributed 42% of the decline, driven by payer mix shift in the 25-34 cohort — and delivers a finished narrative with next steps.

Level 4: Alert before you ask. 24/7 monitoring → anomaly detection → deep insight investigation → insight delivery. The platform detects the decline, generates a complete deep insight with persistent context from prior findings, orchestrates the full analytical pipeline, and delivers the finished analysis before anyone opens a dashboard.

.png)

How We Evaluated These Platforms

Every platform in this guide was evaluated against eight dimensions that reflect what actually matters when AI analytics moves from a demo to daily use:

1. Conversational Analytics. Can business users ask complex questions in natural language and get accurate, governed answers — without writing SQL, building reports, or submitting requests to an analyst? This is the table-stakes capability for the category, but depth varies dramatically. Some platforms handle simple lookups; others sustain multi-turn investigations where each question builds on the last. The key differentiator: does the conversational layer sit on top of a governed semantic model that ensures consistent answers, or does it generate ad-hoc SQL with no consistency guarantees?

2. Automated Deep Insights. Can the platform autonomously decompose a metric change into ranked contributing factors, generate executive summaries, and surface hidden patterns — or does it just show you the drop and leave investigation to humans? This is the single most important differentiator in the category, and most platforms fail here entirely. Deep insights go beyond root cause analysis: they include trend explanations, driver ranking, anomaly context, recommendations, and finished narratives ready for stakeholder consumption.

3. Proactive Monitoring. Does the platform continuously watch your KPIs and alert you when something meaningful changes? Or does it only work when someone thinks to ask a question? McKinsey research shows that organizations using AI for proactive decision-making achieve measurably better outcomes than those using AI reactively.

4. Governance and Consistency. When five different people ask the same question, do they get the same answer? Does the platform enforce metric definitions, manage access controls, and maintain audit trails? For enterprise analytics, this is non-negotiable.

5. Persistent Knowledge and Context. Does the platform maintain a governed understanding of your business — metric definitions, domain terminology, business rules — and carry context across conversations and investigations? Or does every question start from zero with no memory of what came before?

6. Industry Specificity. Does the platform understand your domain — your data sources, your terminology, your workflows? A pharma commercial team needs native understanding of TRx, NBRx, and payer hierarchies. A RevOps team needs native understanding of pipeline stages, win rates, and deal velocity. Or is it a general-purpose tool that requires months of custom configuration to become useful?

7. Agentic Workflow Orchestration. Can AI agents plan and execute multi-step analytical workflows autonomously — chaining data ingestion, transformation, analysis, narrative generation, and delivery into governed pipelines? Or is "agentic" being used as a marketing label for what is essentially a chatbot with SQL generation? Gartner has warned that many vendors engage in "agent washing" — rebranding chatbots as agents without substantive agentic capabilities.

8. Total Cost of Ownership. What does it actually cost to deploy, configure, and maintain — including the analyst time required for setup and ongoing management?

Platform Deep Dives

1. Tellius — Best for Automated Deep Insights and Proactive Insight Delivery

Tellius is an agentic analytics platform purpose-built for pharmaceutical, CPG, finance, and B2B RevOps teams. It combines conversational analytics — where users ask questions in plain English and receive governed, consistent answers — with agentic intelligence, where AI agents monitor KPIs 24/7, detect anomalies, investigate root causes, and deliver finished insights proactively. Unlike generic BI tools or general-purpose AI that require users to interpret results, Tellius explains why metrics changed and ranks contributing factors by quantified impact.

Key capabilities:

- Governed conversational analytics where business users ask complex questions in plain English and get consistent, auditable answers

- Automated deep insights: root cause decomposition with quantified driver ranking (Impact Scores), trend analysis, executive summaries, and actionable recommendations — generated from a single question

- 24/7 KPI monitoring with proactive anomaly detection and alerting

- Governed semantic layer with business glossary, hierarchies, fiscal calendars, and synonym management

- AI Agent Mode that plans and executes multi-step analyses from a single question

- Industry-specific System Packs for pharma (IQVIA, Veeva), CPG (Nielsen, Circana), FP&A, and B2B RevOps (Salesforce, HubSpot, Gong)

- Finished artifact delivery: PowerPoint, Excel, PDF generated automatically

- Pre-built connectors to 30+ data sources including Snowflake, Databricks, BigQuery, and Redshift

What makes this work architecturally:

Tellius operates as connected analytical pipelines, not isolated queries. Four layers make this possible:

A knowledge layer that maintains governed metric definitions, business rules, calculation logic, and domain-specific terminology — so every analysis is grounded in your organization's truth, not generic AI guessing. When Tellius says "net revenue declined 12%," it's using your finance team's definition of net revenue, applied consistently across every user and every agent.

A context layer that carries understanding across interactions. Tellius remembers what was investigated before, what anomalies have been flagged, and what patterns are emerging across time. A root cause finding from last Tuesday informs this week's proactive alert. An analyst's drill-down path becomes institutional knowledge, not a lost chat thread.

Agentic workflows that chain analytical steps into repeatable, schedulable, shareable pipelines. A single workflow can filter data, compute derived metrics, run root cause analysis, generate an executive narrative, and deliver a finished PDF — triggered on schedule or on anomaly detection. These aren't prompt chains. They're governed analytical processes with defined inputs, steps, and outputs.

Orchestration that coordinates multiple agents working in concert. A monitoring agent detects that NBRx dropped in the Southeast. It hands off to an investigation agent that decomposes the drop into payer mix, HCP access, and competitive factors. That agent triggers a narrative agent that produces a stakeholder-ready summary. In RevOps, the same architecture detects pipeline velocity changes, decomposes win-rate shifts by segment, rep, and deal stage, and delivers weekly forecast intelligence without anyone building a report. The entire pipeline runs without human intervention — and every step is auditable.

Where Tellius excels: The combination of automated deep insights, proactive monitoring, and workflow orchestration is where Tellius creates the most distance from competitors. When revenue drops 15%, Tellius doesn't just show you the decline — it generates a complete deep insight: decomposing the change into ranked drivers (e.g., "Region X contributed 42% of the decline, driven by Product Y losing share in the 25-34 cohort"), quantifying each factor's impact, surfacing related trends and anomalies, producing an executive summary with recommendations, and delivering the finished analysis before you ask. No other platform in this comparison performs this level of automated analytical investigation or delivers insights this complete.

Tellius has been recognized as a Gartner Magic Quadrant Visionary four consecutive years (2022-2025) and is trusted by 8 of the top 10 pharmaceutical companies, including Novo Nordisk, AbbVie, and Bristol Myers Squibb.

Where Tellius falls short: Tellius is built for teams that need governed, consistent analytics — which means it's not the right tool for individual analysts exploring one-off CSV files. There's no free tier or self-serve signup — you can't evaluate Tellius without talking to sales, which frustrates teams used to product-led growth tools. The Pro tier makes Tellius accessible to mid-size teams with a streamlined onboarding process. The platform's deepest pre-built intelligence is in pharma, CPG, finance, and RevOps — teams in other verticals can still use the full platform but may need more initial configuration to match their domain. Like any governed analytics platform, there's upfront setup to define your metrics, business rules, and hierarchies in the semantic layer — this is the investment that ensures every user and every AI agent operates from the same source of truth going forward.

Pricing: Two tiers, both with no per-user fees. Pro is designed for mid-size teams that need governed conversational analytics and automated deep insights — with streamlined setup and faster deployment. Enterprise adds agentic workflows, orchestration, proactive monitoring, custom System Packs, SSO/RLS, and dedicated implementation support for large organizations. Custom pricing on both tiers.

Ideal for: Analytics teams at mid-size and large enterprises in pharma, CPG, finance, or B2B RevOps that are drowning in ad-hoc analysis requests and need AI that does the analytical work, not AI that helps analysts do the work faster. Pro tier for mid-size teams needing conversational analytics and deep insights; Enterprise tier for large organizations needing full agentic workflows and orchestration.

2. ThoughtSpot — Best for Search-Driven Self-Service Analytics

ThoughtSpot is a search-first analytics platform that lets business users type natural language questions and get instant visual answers from their cloud warehouse data. ThoughtSpot recently rebranded as an "Agentic Analytics Platform" and introduced Spotter agents (SpotterViz, SpotterModel, SpotterCode) that assist with visualization, modeling, and code generation. However, Spotter agents help users build analytics faster — they do not autonomously investigate data, detect anomalies, or explain why metrics changed. By Gartner's own framework for agent washing, this is closer to AI-assisted copilot functionality than agentic intelligence.

Key capabilities:

- Powerful NL search interface with strong query accuracy on well-modeled data

- Spotter agents for AI-assisted visualization and data modeling

- ThoughtSpot Analyst Studio (via Mode acquisition) for SQL, R, and Python workflows

- Embedded analytics with developer-friendly APIs

- Free tier available for small-scale use

Where ThoughtSpot excels: Search experience. ThoughtSpot's core search interface is mature, responsive, and handles complex multi-dimensional queries well when the underlying data model is clean. Spotter agents are genuinely useful for helping users build better visualizations and explore data paths they wouldn't have found on their own.

Where ThoughtSpot falls short: ThoughtSpot doesn't offer automated deep insights — there's no root cause decomposition, driver ranking, or proactive monitoring that explains why metrics changed. It answers questions well but doesn't tell you what questions to ask. Every search is independent with no persistent context connecting investigations over time. The platform is horizontal with no industry-specific depth, and lacks native predictive modeling.

Pricing: Essentials ($25/user/mo), Pro ($50/user/mo), Enterprise (custom). Average enterprise contract: $100K-$500K/year.

Ideal for: Data-forward enterprises with clean warehouse data and technical analytics teams that prioritize self-service exploration over automated insight delivery.

3. Tableau Next — Best for Salesforce-Ecosystem Visualization

Tableau Next represents Salesforce's bet on AI-augmented analytics, shipping with three AI agents (Data Pro, Concierge, Inspector), a new semantic layer (Tableau Semantics), and deep Agentforce integration. It claims the title of "the world's first agentic analytics platform," though the substance behind that claim warrants scrutiny.

Key capabilities:

- Three AI agents: Data Pro (data prep), Concierge (exploration), Inspector (alerting)

- Tableau Semantics — a new governed semantic layer

- Deep integration with Salesforce Data Cloud and Agentforce

- Industry-leading visualization engine with massive community and ecosystem

- Tableau Marketplace for composable agent skills

Where Tableau Next excels: Visualization remains Tableau's strength, and the brand recognition is unmatched. For organizations already invested in Salesforce (CRM, Data Cloud, Agentforce), Tableau Next offers a tightly integrated analytics layer. Tableau Semantics is a meaningful addition, bringing governed metric definitions to a platform that previously lacked them.

Where Tableau Next falls short: Tableau Next's agents assist with tasks like data prep and exploration, but don't perform autonomous analytical investigation or deliver automated deep insights. Inspector provides basic alerting, not proactive monitoring with root cause explanations. The platform requires data to flow through Salesforce Data Cloud, which creates ecosystem lock-in. No industry-specific depth in pharma, CPG, or FP&A. Pricing is complex: Tableau+ plus Agentforce and Data Cloud credits.

Pricing: Tableau+ ($115/user/mo Enterprise Creator), plus Agentforce and Data Cloud credits. Contact sales for Tableau Next-specific pricing.

Ideal for: Organizations deeply invested in the Salesforce ecosystem that want AI-augmented visualization and are comfortable with Data Cloud as their analytics data layer.

4. Power BI + Copilot — Best for Microsoft-Standardized Enterprises

Power BI holds roughly 30-36% of the global BI market, and Copilot adds AI capabilities that help users create reports, summarize dashboard pages, and ask questions of their data in natural language. The combination is competent for visualization and reporting — and encountered in over 70% of enterprise analytics evaluations simply because most organizations already have Microsoft licenses.

Key capabilities:

- Copilot report pane: summarizes visible report pages, generates visuals from natural language

- Standalone Copilot experience (Preview): cross-report Q&A with data attachment

- DAX query generation and measure descriptions

- Deep integration with Microsoft Fabric, Excel, Teams, and Azure

- Mobile Copilot with voice input (iOS Preview)

Where Power BI + Copilot excels: Distribution, cost, and conversational access to live data. If your organization runs on Microsoft, Power BI Pro at $10/user/mo is the most affordable analytics option, and the integration with Excel, Teams, and SharePoint creates genuine workflow advantages. The semantic model (formerly dataset) provides reasonable metric governance, and Copilot enables natural language Q&A on top of live data connections — letting business users ask questions and get answers without writing DAX. For basic conversational analytics within the Microsoft ecosystem, it's a solid offering.

Where Power BI + Copilot falls short: Copilot handles conversational Q&A and report summarization well, but doesn't go deeper. There are no automated deep insights, no proactive monitoring, and no multi-step investigation capabilities. When a metric drops, Copilot shows you the drop — your team still figures out why. No industry-specific capabilities; Power BI relies on its partner ecosystem for vertical solutions.

Pricing: Pro ($10/user/mo), Premium Per User ($20/user/mo). Copilot requires Fabric F64+ capacity (starting ~$5,000/month).

Ideal for: Microsoft-standardized enterprises that need cost-effective reporting and visualization and will pair Power BI with a deeper analytics layer for root cause investigation.

5. Snowflake Cortex — Best for Native NL Querying on Snowflake Data

Snowflake Cortex adds an AI intelligence layer to the Snowflake data platform, with Cortex Agents (GA November 2025) orchestrating between Cortex Analyst (text-to-SQL on structured data) and Cortex Search (RAG over unstructured text). Snowflake Intelligence (ai.snowflake.com) provides an end-user chat interface.

Key capabilities:

- Cortex Analyst: text-to-SQL with semantic model support (YAML-based)

- Cortex Search: RAG-based retrieval over unstructured documents

- Cortex Agents: orchestration layer routing between structured and unstructured queries

- Snowflake Intelligence: consumer-grade chat UI at ai.snowflake.com

- SQL-callable AI functions (classify, extract, summarize) via ML.GENERATE_TEXT

Where Snowflake Cortex excels: If your data is already in Snowflake, Cortex provides zero-friction NL querying with native semantic model integration. Cortex Agents can combine structured and unstructured data analysis in a single workflow, which is genuinely useful. The consumption-based pricing means no additional licensing — just compute costs.

Where Snowflake Cortex falls short: Cortex Analyst translates natural language into SQL and returns results — the user still interprets those results and investigates why a metric changed. There are no automated deep insights: no root cause decomposition, no driver ranking, no executive summaries. Each query is stateless with no persistent context across sessions. Cortex only works on Snowflake-resident data, so teams working across multiple sources (IQVIA, Veeva, MMIT, Salesforce) need to consolidate everything into Snowflake first. The consumption-based pricing means AI costs are additive to existing Snowflake spend and can be difficult to predict at scale.

Pricing: Consumption-based Snowflake credits. AI costs are additive: Cortex Analyst at 6.7 credits/100 messages, per-token for AI functions, per-GB for Cortex Search.

Ideal for: Organizations with data consolidated in Snowflake that want native NL querying without deploying a separate analytics platform, and where root cause investigation will be done by human analysts.

6. Databricks AI/BI Genie — Best for Conversational Q&A on Lakehouse Data

Databricks Genie is a conversational analytics layer built into the Databricks Data Intelligence Platform. It translates natural language questions into SQL and runs them against Unity Catalog data, with curated "Genie Spaces" that analysts configure per use case.

Key capabilities:

- NL-to-SQL with Unity Catalog metadata integration

- Genie Spaces: analyst-curated environments with knowledge stores and value dictionaries

- Trusted answer marking for queries matching certified SQL templates

- Built-in benchmarking tools for NL-to-SQL accuracy evaluation

- Included with Databricks SQL — no additional license

Where Databricks Genie excels: Free with Databricks SQL. For organizations already running Databricks, Genie adds conversational Q&A at zero incremental licensing cost. The Trusted answer marking is a genuinely useful trust signal that other platforms lack.

Where Databricks Genie falls short: Genie is a text-to-SQL interface, not a full analytics platform. Each Space is limited to 25 tables and requires analyst curation. There are no automated deep insights, no proactive monitoring, and no multi-source connectivity beyond Databricks-resident data. No persistent context across conversations. The Research Agent (deeper analysis) remains in beta.

Pricing: Included with Databricks SQL. Cost = SQL warehouse compute (DBU-based, pay-per-second).

Ideal for: Databricks-native organizations that want to add NL querying to existing data without deploying a separate tool, and where analytics depth will come from human analysts, not the platform.

7. Qrvey — Best for Embedded Multi-Tenant SaaS Analytics

Qrvey is purpose-built for SaaS companies that need to embed analytics into their products with native multi-tenant isolation, flat-rate pricing, and no-code workflow automation. It is not an enterprise analytics platform for internal use — it's infrastructure for product teams building analytics into their applications.

Key capabilities:

- Multi-tenant architecture with native tenant isolation

- Embedded authoring with pure JavaScript widgets (no iframes)

- Built-in data engine powered by Elasticsearch

- No-code workflow automation with event triggers

- GenAI features with BYO LLM support

Where Qrvey excels: Multi-tenant embedded analytics. Qrvey is the most mature option for SaaS companies that need analytics embedded in their product with proper tenant isolation, and flat-rate pricing means costs scale predictably.

Where Qrvey falls short: Qrvey is built for embedded SaaS analytics, not enterprise self-serve. There are no automated deep insights, no proactive monitoring, and no industry-specific intelligence. It's the right tool for product teams embedding analytics into their applications — not for analytics teams investigating their own data.

Pricing: Flat-rate, custom pricing. No per-user fees.

Ideal for: SaaS product teams embedding customer-facing analytics into multi-tenant applications.

8. Julius AI — Best for Individual Analysts on a Budget

Julius AI is a consumer-grade "chat with your data" tool that lets users upload CSV, Excel, and other files and ask questions in natural language. With over 2 million users and a PLG motion, it's one of the fastest-growing tools in the ad-hoc data analysis space.

Key capabilities:

- Upload-and-chat interface with instant chart generation

- Support for CSV, Excel, PDF, images, and Google Sheets

- Advanced Reasoning mode with step-by-step analysis plans

- Basic warehouse connectivity (Snowflake, PostgreSQL, MySQL)

- $20-45/month pricing with a free tier

Where Julius AI excels: Time to first insight and conversational accessibility. Julius's onboarding is frictionless — upload a file, start chatting, get a chart in seconds. The conversational interface is genuinely easy to use, and basic warehouse connectivity to Snowflake, PostgreSQL, and MySQL extends it beyond pure file uploads. For individual analysts or academics doing quick exploratory analysis, it's hard to beat at $20-45/month.

Where Julius AI falls short: Julius is designed for individual exploration, not team-scale analytics. There's no governed semantic layer, so different users asking the same question may get different results. No automated deep insights, no persistent context between sessions, and no enterprise security features like row-level access controls.

Pricing: Free (15 messages/month), Starter ($20/mo), Pro ($45/mo), Enterprise (custom).

Ideal for: Individual analysts, researchers, and small teams that want quick exploratory analysis on uploaded files without enterprise governance requirements.

9. ChatGPT Advanced Data Analysis (OpenAI) — Best for Ad-Hoc Exploratory Analysis

ChatGPT's data analysis feature gives the world's most popular AI assistant a sandboxed Python environment where it writes and executes code against uploaded files. For one-off analysis by a single user, it's genuinely impressive. For enterprise analytics, it's a fundamentally different category.

Key capabilities:

- File uploads: CSV, Excel, PDF, JSON, images

- Code execution: writes and runs Python (pandas, matplotlib, scikit-learn)

- Interactive and static chart generation

- Downloadable outputs: Excel, PowerPoint, PDF

- Enterprise MCP connectors for data source connectivity (Enterprise plan only)

Where ChatGPT excels: Accessibility, flexibility, and conversational breadth. 200+ million weekly active users already know the interface. For a quick "upload this CSV and tell me what's interesting," ChatGPT is remarkably capable. The code execution environment handles sophisticated statistical analysis when prompted well. Enterprise MCP connectors now extend ChatGPT's reach to live data sources including Snowflake, making it more than a file-upload tool for organizations on the Enterprise plan.

Where ChatGPT falls short: MCP connectors expand data access, but ChatGPT remains a general-purpose AI assistant without a governed semantic layer. Two people asking the same question may get different answers — there's no enforced metric definitions ensuring consistency. No automated deep insights, no proactive monitoring, no audit trail, and no industry-specific intelligence.

Pricing: Plus ($20/mo), Pro ($200/mo), Team ($25/user/mo), Enterprise (custom).

Ideal for: Knowledge workers who need quick, one-off data exploration and are comfortable with results that depend on prompt quality rather than governed definitions.

10. Google Gemini (BigQuery + Looker) — Best for Google Cloud-Native Teams

Google's AI analytics story is fragmented across multiple products: Gemini in BigQuery (SQL/Python code assist, Data Canvas, Data Insights), Gemini in Looker (NL exploration), and Gemini in Google Sheets (formula generation). Stitching them together approximates a cohesive analytics experience — but it requires work.

Key capabilities:

- BigQuery Data Canvas: NL-based multi-step data exploration

- BigQuery Code Assist: SQL and Python generation from natural language

- Looker Gemini: NL exploration on governed Looker models

- Google Sheets AI: formula generation and data analysis

- ML.GENERATE_TEXT: invoke Gemini models in SQL for text analysis on BigQuery data

Where Google Gemini excels: If your organization is Google Cloud-native (BigQuery + Looker), Gemini features are increasingly bundled at no extra cost. BigQuery Data Canvas is a genuinely useful NL exploration interface, and Looker's semantic layer (LookML) provides strong metric governance.

Where Google Gemini falls short: The analytics experience is fragmented across BigQuery, Looker, and Sheets — there's no unified workflow. No automated deep insights, no proactive monitoring, and no industry-specific capabilities. Gemini augments existing tools rather than performing autonomous analysis.

Pricing: Consumption-based (BigQuery), per-user (Looker), per-seat (Workspace). AI features increasingly bundled.

Ideal for: Google Cloud-native organizations that want AI augmentation across their existing BigQuery + Looker stack without introducing another vendor.

11. Claude (Anthropic) — Best for Sophisticated Code-Driven Data Analysis

Anthropic's Claude offers data analysis through a sandboxed code execution environment where Claude writes and runs Python, creates downloadable files (Excel, PowerPoint, PDF), and processes uploaded datasets. Claude for Excel (beta) adds NL formula generation directly in spreadsheets.

Key capabilities:

- Code execution: Python, Node.js, Bash in sandboxed Ubuntu container

- File upload: CSV, Excel, JSON, XML, PDF, images

- Claude for Excel (beta): NL commands for spreadsheet manipulation

- MCP integrations: Google Workspace, growing partner ecosystem

- Downloadable outputs: Excel, Word, PowerPoint, PDF

Where Claude excels: Reasoning quality. Claude's analytical reasoning on uploaded data is exceptionally strong, particularly for complex multi-step analyses that require nuanced interpretation. The code execution environment is robust, and the output quality for one-off analytical reports is often superior to other general-purpose AI assistants.

Where Claude falls short: Claude is a general-purpose AI assistant, not an analytics platform. No semantic layer, no governed metric definitions, no proactive monitoring, and no persistent data connections to enterprise warehouses. Excellent for one-off analytical work — not designed for governed, repeatable enterprise analytics.

Pricing: Pro ($20/mo), Team ($25/user/mo), Enterprise (custom). Code execution: 50 free hours/day per org, then $0.05/hr.

Ideal for: Power users and analysts who want high-quality, one-off data analysis with strong reasoning and code generation, without enterprise governance requirements.

12. Domo — Best for Mid-Market Cloud BI with Integrated Data Pipeline

Domo offers a cloud-native BI platform with integrated data pipeline (Magic ETL), AI-powered alerts, and an increasingly AI-augmented analytics experience. Its strength lies in combining data integration, visualization, and collaboration in a single platform.

Key capabilities:

- Magic ETL: low-code data integration and transformation

- AI-powered alerts and threshold monitoring

- Domo.AI: NL querying and AI-assisted data exploration

- App framework for building custom data apps

- Extensive pre-built connector library (1,000+)

Where Domo excels: All-in-one platform that combines data integration, visualization, and alerts without requiring a separate data warehouse. Magic ETL is genuinely useful for mid-market companies that don't have dedicated data engineering teams.

Where Domo falls short: No automated deep insights — alerting is threshold-based rather than intelligent anomaly detection with root cause. NL capabilities are less mature than leading competitors. Limited industry-specific depth. Domo's market position has shifted since going private in 2023, and the long-term product roadmap is less visible than publicly traded competitors.

Pricing: Enterprise custom pricing. Previously listed per-user pricing has been replaced with platform-based licensing.

Ideal for: Mid-market companies that need an all-in-one analytics and data integration platform without building a separate data warehouse stack.

What "Agentic Analytics" Actually Means (And Who's Faking It)

The term "agentic analytics" has become the most overused label in the category. At least four platforms in this comparison — Tellius, ThoughtSpot, Tableau Next, and Qrvey — now claim the "agentic analytics platform" title. But there is a material difference between platforms where AI agents autonomously perform analytical work and platforms that rebranded existing features with the word "agent."

Gartner has been direct about this problem, identifying widespread "agent washing" across the enterprise software industry — the rebranding of chatbots, RPA, and AI assistants as "agents" without substantive agentic capabilities.

.png)

A useful litmus test: Can the platform plan and execute a multi-step analytical investigation from a single question, without human guidance at each step, and deliver a finished explanation of what happened and why?

By that standard, Tellius's Agent Mode qualifies — it autonomously decomposes questions into investigation plans, executes them across data sources with full business context from the knowledge layer, chains analytical steps through orchestrated workflows, and delivers finished deep insights with quantified root causes, executive summaries, and recommendations. ThoughtSpot's Spotter agents help users build analytics faster but require human direction at each step and don't carry context between sessions. Tableau Next's agents assist with specific tasks (data prep, exploration, alerting) but don't perform autonomous analytical investigation or chain findings into multi-step workflows. Snowflake Cortex Agents orchestrate between services — deciding whether to route a question to structured data or unstructured search — but the intelligence ends at SQL generation. A Cortex Agent decides which service should handle this question, not what the analysis means or what should be done about it.

The distinction matters because choosing a platform based on its "agentic" marketing rather than its actual autonomous capabilities will leave your team doing the same manual investigation work — just with a nicer chat interface.

Tellius vs ThoughtSpot: Insight-First vs Search-First Analytics

Tellius and ThoughtSpot both offer strong conversational analytics — natural language Q&A on governed enterprise data — but they solve fundamentally different problems beyond that shared foundation. ThoughtSpot is search-first: it excels at letting business users explore clean warehouse data independently. Tellius is insight-first: it excels at automatically explaining why metrics changed and proactively monitoring KPIs without human prompting.

Where both platforms perform well: a business user types a question in plain English and gets a governed, accurate answer backed by a semantic layer. Both handle complex multi-dimensional queries and support follow-up questions. ThoughtSpot's search experience is mature and fast; Tellius's conversational layer is deeply integrated with its knowledge and context layers so that each question benefits from accumulated business understanding.

The core architectural difference beyond conversational Q&A: ThoughtSpot translates questions into queries and returns results for human interpretation. Tellius translates questions into deep insights — chaining multiple analytical steps, applying business context from a persistent knowledge layer, and delivering finished analyses with ranked, quantified drivers, executive summaries, and recommendations. When a pharma brand manager asks "why did TRx drop in the Southeast?", ThoughtSpot returns a chart of TRx by territory. Tellius returns a complete deep insight: payer mix shift contributed 42%, HCP access restrictions contributed 28%, competitive launch contributed 18% — with trend context, an executive summary, and actionable recommendations.

ThoughtSpot has no automated deep insights — no root cause decomposition, no quantified driver ranking, no executive summaries, no proactive monitoring with root cause explanations, and no persistent context that connects investigations across time. Spotter agents help users build visualizations faster but don't orchestrate multi-step analyses. ThoughtSpot is horizontal with no industry-specific depth.

Tellius has no free tier and requires a consultative sales process — though the Pro tier brings deployment timelines and investment closer to mid-size team budgets compared to ThoughtSpot's faster time-to-trial. Tellius is purpose-built for pharma, CPG, finance, and B2B RevOps, which is a strength in those verticals and a limitation outside them.

Choose ThoughtSpot if your primary need is giving business users fast, independent access to explore clean warehouse data. Choose Tellius if your primary need is automated root cause investigation, proactive anomaly detection, and governed insight delivery in pharmaceutical, CPG, or finance analytics.

Tellius vs Snowflake Cortex: Analytics Platform vs Data Platform Add-On

Snowflake Cortex adds conversational querying to the Snowflake data platform. Tellius is a purpose-built analytics platform that connects to Snowflake (and 30+ other sources) and adds the analytical intelligence layer that Cortex doesn't provide: automated deep insights with root cause decomposition, proactive monitoring, persistent business knowledge, key driver ranking, executive summaries, workflow orchestration, and industry-specific data models.

The fundamental difference: Cortex retrieves data. Tellius analyzes it and delivers finished insights. Cortex Analyst translates natural language into SQL and returns results — the user has to interpret those results, draw conclusions, and figure out why a metric moved. Tellius generates a complete deep insight: decomposing the metric change into ranked contributing factors, quantifying each driver's impact, connecting the finding to prior investigations via persistent context, and delivering a finished executive summary with recommendations.

Cortex only works on Snowflake-resident data. For enterprise analytics teams working across IQVIA, Veeva, MMIT, Salesforce, and internal finance systems — much of which doesn't live in Snowflake — Cortex requires a data engineering project to consolidate everything into Snowflake before any analytical value is delivered. Tellius connects to 30+ data sources natively and sits on top of existing infrastructure.

Cortex has no persistent analytical context, no workflow orchestration, and no industry-specific intelligence. Each query is stateless. Tellius maintains a knowledge layer with governed metric definitions, business rules, and domain-specific terminology — and carries context across investigations so findings compound over time.

Choose Snowflake Cortex if your data is already in Snowflake, your primary need is basic NL querying, and your team has the analytical capacity to interpret results and investigate root causes manually. Choose Tellius if your team's bottleneck is the analytical labor of figuring out why metrics moved — and you need an intelligence layer that performs that investigation autonomously, delivers finished deep insights with executive summaries and recommendations, across multiple data sources, with industry-specific understanding.

Frequently Asked Questions

Part 1: Understanding Analytics Platforms

What is an AI analytics platform?

An AI analytics platform is software that uses artificial intelligence to analyze enterprise data, answer business questions in natural language, and — in more advanced platforms — automate analytical workflows that previously required human analysts. Unlike traditional visualization tools that create charts for humans to interpret, AI analytics platforms perform the interpretation: surfacing patterns, decomposing metric changes, and in some cases proactively monitoring data and delivering insights. The category ranges from conversational Q&A interfaces on top of data warehouses to fully agentic systems that autonomously investigate and explain business performance changes. Tellius is an agentic analytics platform purpose-built for enterprise teams in pharma, CPG, finance, and B2B RevOps that need AI to do the analytical work, not just assist with it.

What is the difference between agentic analytics and conversational analytics?

Conversational analytics lets users ask questions in natural language and get visual or text-based answers from governed enterprise data. It's reactive — the system only works when asked — but it's a powerful capability when backed by a semantic layer that ensures consistency. Agentic analytics goes further: AI agents autonomously monitor data, detect anomalies, plan multi-step investigations, carry context across findings, and deliver insights proactively without human prompting. The difference is between a search engine for your data (conversational) and a 24/7 analyst that watches your KPIs, connects today's anomaly to last week's finding, and explains what changed before you ask (agentic). Most platforms marketed as "agentic" today are actually conversational — they answer questions but don't perform autonomous analytical work. The strongest platforms offer both: governed conversational Q&A for on-demand questions, and agentic intelligence for continuous monitoring and proactive insight delivery.

What makes conversational analytics enterprise-grade vs consumer-grade?

Enterprise-grade conversational analytics answers questions against a governed semantic layer — so when five different people ask the same question, they get the same answer using the same metric definitions. Consumer-grade conversational analytics (ChatGPT, Claude, Julius) can now connect to data warehouses via MCP connectors and APIs, but connecting to data is not the same as governing it. Without a semantic layer, two people asking "what's our revenue?" may get different answers depending on how they phrase the question — there's no enforced definition of what "revenue" means. The key differentiators are governance (consistent metric definitions, calculation logic, business rules enforced across every query), persistent context (each question builds on prior conversations and organizational knowledge), multi-turn analytical depth (sustained investigations, not one-off queries), and security (row-level access, audit trails, SSO). Tellius and ThoughtSpot both offer enterprise-grade conversational analytics with governed semantic layers. The difference is what happens after the question is answered — ThoughtSpot stops at the answer, while Tellius can autonomously investigate root causes, monitor KPIs proactively, and deliver insights through orchestrated workflows.

What is the difference between analytics with workflow orchestration and chatbot-style data querying?

Chatbot-style data tools translate individual questions into SQL queries and return results — each question starts from zero with no memory, no context, and no connection to previous findings. Analytics platforms with workflow orchestration operate as connected pipelines: data flows through governed transformations, analysis builds on prior context, multiple AI agents coordinate across steps, and finished insights are delivered proactively. Tellius's agentic workflows chain together data ingestion, metric computation, root cause decomposition, narrative generation, and stakeholder delivery into repeatable pipelines that run on schedule or on anomaly detection — with every step governed and auditable.

Do you still need Tableau or Power BI if you have an analytics platform?

Not entirely eliminated — at least not yet. Traditional visualization tools excel at governed reporting, standardized dashboards for broad organizational distribution, and executive-facing visualizations. AI analytics platforms excel at ad-hoc investigation, root cause analysis, and proactive insight delivery. The most effective enterprise analytics stacks today use both: visualization tools for standardized reporting and distribution, and AI analytics platforms for investigation, explanation, and proactive monitoring. Tellius is designed to complement existing investments, not replace them.

What capabilities should enterprise teams evaluate in an analytics platform?

Six capabilities separate enterprise-grade AI analytics from consumer-grade tools: governed conversational analytics that lets business users ask questions in plain English and get consistent answers, a governed semantic layer that ensures those answers are the same regardless of who asks, automated root cause analysis that explains why metrics changed, proactive monitoring that surfaces issues before quarterly reviews, persistent knowledge and context that carries business understanding across investigations, and enterprise security (SSO, RLS, audit trails, single-tenant deployment options). If a platform lacks conversational governance and automated root cause, your team will still spend most of its time doing the analytical work manually.

Part 2: Evaluating and Comparing Platforms

How does Tellius compare to ThoughtSpot?

ThoughtSpot excels at letting users search and explore clean warehouse data independently; Tellius excels at generating automated deep insights — explaining why metrics changed with ranked drivers, executive summaries, and recommendations — and proactively surfacing issues before users think to ask. ThoughtSpot is search-first and reactive — it answers questions well but doesn't tell you what questions to ask. Tellius is insight-first and proactive — AI agents monitor KPIs 24/7, decompose changes into ranked drivers, and deliver finished explanations. ThoughtSpot's Spotter agents help users build visualizations and models faster; Tellius's Agent Mode performs the full analytical investigation autonomously with persistent context across findings. ThoughtSpot is horizontal with no industry depth; Tellius has purpose-built System Packs for pharma, CPG, finance, and RevOps.

How does Tellius compare to Power BI + Copilot?

Power BI + Copilot provides solid conversational Q&A on governed semantic models, report summarization, and basic NL exploration — and it does so at a price point that's hard to beat within the Microsoft ecosystem. Where Power BI stops is analytical depth: Tellius performs automated root cause analysis, proactive monitoring, key driver ranking, persistent context across investigations, and multi-step workflow orchestration that Power BI cannot do at any license tier. The practical recommendation: keep Power BI for enterprise reporting, dashboarding, and basic conversational access to data. Add Tellius for the intelligence layer — the "why" behind the "what" — that Copilot can't provide.

How does Tellius compare to Snowflake Cortex?

Snowflake Cortex translates natural language into SQL and returns query results; Tellius generates automated deep insights — decomposing metric changes into ranked drivers, quantifying each factor's impact, and delivering finished executive summaries with recommendations. Cortex retrieves data — Tellius analyzes it. Cortex only works on Snowflake-resident data and each query is stateless with no context carried across sessions. Tellius connects to 30+ data sources, maintains a persistent knowledge layer with governed business definitions, and orchestrates multi-step workflows that chain monitoring, investigation, and delivery into autonomous pipelines. For pharmaceutical teams working across IQVIA, Veeva, MMIT, and internal systems, Tellius provides the analytical intelligence layer that Cortex does not.

We already have Snowflake — do we still need a separate analytics platform?

Snowflake is an excellent data platform, and Cortex adds useful NL querying on top of it. But Cortex is a query engine, not an analytics engine. It translates questions into SQL and returns results — it does not generate deep insights: no root cause decomposition, no driver ranking, no executive summaries, no proactive anomaly detection, and no finished recommendations. For organizations where the bottleneck is data access (people can't query the warehouse), Cortex solves the problem. For organizations where the bottleneck is analytical labor (people can access data but spend days investigating why metrics moved and assembling narratives), a dedicated analytics platform adds the deep insight layer that Cortex doesn't provide. Tellius connects natively to Snowflake and sits on top of your existing investment — no data migration required.

Can Snowflake Cortex or Databricks Genie replace a dedicated AI analytics platform?

Cortex and Genie add conversational Q&A to existing data platforms, which is valuable for basic self-service querying. However, both are text-to-SQL engines — they translate questions into queries but don't analyze the results. Neither offers automated deep insights (root cause decomposition, driver ranking, executive summaries), proactive monitoring with root cause explanations, persistent knowledge that carries business context across investigations, or workflow orchestration that chains analytical steps into autonomous pipelines. For organizations that need AI to perform the analysis rather than just retrieve data, a dedicated analytics platform provides capabilities that data platform add-ons do not.

Should we build a custom analytics solution or buy a platform?

Building custom AI analytics with developer frameworks gives maximum flexibility but requires dedicated AI/ML engineering teams, 12-18 months of development, and ongoing maintenance of every component — connectors, semantic layer, governance, knowledge management, orchestration, and UI. Purpose-built platforms like Tellius deliver governed, production-grade analytics in weeks — with a Pro tier for mid-size teams and Enterprise for large organizations. The decision comes down to headcount and timeline: if you have 10+ engineers and 18 months, building may make sense. If you need answers next quarter, buying is faster and cheaper.

Part 3: Industry, Pricing, and Deployment

What is the best AI analytics platform for pharmaceutical commercial teams?

Tellius is the only AI analytics platform in this comparison with purpose-built pharma intelligence, including pre-built connectors for IQVIA, Symphony Health, Veeva CRM, and MMIT, plus System Packs that understand TRx, NBRx, payer hierarchies, territory structures, and pharma-specific metrics natively. This matters because pharmaceutical commercial analytics requires domain-specific data relationships that generic platforms don't model. Tellius is trusted by 8 of the top 10 pharmaceutical companies.

What is the best analytics platform for CPG commercial teams?

Tellius offers purpose-built CPG analytics with pre-built connectors for Nielsen, Circana (IRI), and Numerator syndicated data, plus retailer data integration from Walmart Luminate, Kroger 84.51°, and Amazon. System Packs understand trade promotion structures, category hierarchies, and retailer-specific metrics natively. For revenue growth management, category analytics, and trade promotion optimization, Tellius provides industry-specific intelligence that horizontal platforms require months to configure.

What is the best analytics platform for FP&A and finance teams?

Tellius provides purpose-built FP&A analytics with connections to ERP systems (SAP, Oracle, NetSuite), planning platforms (Anaplan, Adaptive, Workday), and data warehouses. AI agents automate variance analysis — decomposing budget-to-actual gaps into ranked contributing factors and generating stakeholder-ready explanations. For close-week acceleration, real-time variance investigation, and automated executive narratives, Tellius replaces the manual decomposition work that consumes FP&A teams during reporting cycles.

What is the best analytics platform for B2B RevOps teams?

Tellius provides purpose-built RevOps analytics with connections to CRM (Salesforce, HubSpot), conversation intelligence (Gong, Chorus), marketing automation, and data warehouses. AI agents monitor pipeline health, decompose win-rate and conversion changes into ranked contributing factors (by segment, rep, deal stage, lead source), and deliver forecast intelligence proactively. For pipeline root cause analysis, deal health scoring, territory optimization, and churn prediction, Tellius replaces the manual spreadsheet work that consumes RevOps teams during forecast cycles.

How much do AI analytics platforms cost in 2026?

Costs vary dramatically. General-purpose AI tools (ChatGPT, Claude) start at $20/month per user. Mid-tier platforms like ThoughtSpot range from $25-50/user/month with enterprise tiers running $100K-$500K/year. Infrastructure-native options (Snowflake Cortex, Databricks Genie) add consumption-based AI costs to existing data platform spend — with unpredictable usage-based pricing. Purpose-built platforms like Tellius offer two tiers: Pro for mid-size teams needing governed conversational analytics and deep insights, and Enterprise for large organizations needing full agentic workflows and orchestration. Both use custom pricing based on deployment scope and data volume, with no per-user fees and payback periods of 6-9 months.

What's the best analytics tool for small teams on a budget?

For individual analysts with limited budgets, Julius AI ($20-45/month) and ChatGPT Plus ($20/month) offer the fastest path to value for file-based analysis. ThoughtSpot's free tier provides basic search analytics for small-scale use. However, these tools lack the governance, consistency, persistent knowledge, and analytical depth required when multiple team members need aligned, trustworthy answers from the same enterprise data. For mid-size teams that need governed analytics without a full enterprise deployment, Tellius Pro provides conversational analytics and automated deep insights with no per-user fees — bringing enterprise-grade capabilities within reach without the overhead of a large-scale implementation.

How long does it take to deploy an AI-powered enterprise analytics platform?

Deployment timelines vary significantly. Consumer tools (ChatGPT, Claude, Julius) are instant. Platform add-ons (Cortex, Genie) can be configured in days to weeks but depend on existing data platform maturity. Enterprise AI analytics platforms typically require more investment: ThoughtSpot estimates 4-8 weeks for basic deployment. Tellius Pro deploys in 4-6 weeks for mid-size teams; Tellius Enterprise deploys in 6-10 weeks for large organizations with complex data environments. Custom-built solutions using frameworks like LangChain or AutoGen typically require 12-18 months of engineering effort.

What ROI should enterprise teams expect from AI-powered analytics?

Enterprise teams deploying AI analytics platforms typically see ROI across three dimensions: speed (root cause investigation drops from 3-5 days to seconds), capacity (analysts reclaim 20+ hours per month previously spent on manual investigation and report assembly), and business impact (proactive detection catches revenue-impacting issues weeks earlier). Tellius customers in pharma report $5-15M in revenue protected per brand through early anomaly detection, with typical payback periods of 6-9 months.

Get release updates delivered straight to your inbox.

No spam—we hate it as much as you do!