Beyond Toy Demos: How Tellius Makes AI Analytics Work at Enterprise Scale

%20(14).png)

The Hype vs. Reality Gap

Every few months, a new viral demo makes the rounds: A data analyst types a natural language question into ChatGPT, it spits out SQL, and magically, they get an answer.

The crowd claps. The vendor smiles. Everyone leaves convinced that AI has solved analytics.

But if you’ve ever worked inside a Fortune 500 data team, you know the truth:

- Your data doesn’t fit in their sandbox.

- Your schemas don’t look like the demo datasets.

- And your warehouses don’t run on generic SQL.

We live in the messy, heterogenous, dialect-heavy world of real enterprise data. Our customers range from retailers with terabytes of sales logs across Redshift and Hadoop, to financial services firms combining Snowflake, Databricks Delta, and ClickHouse into sprawling data estates.

For them, the question isn’t “Can AI generate SQL?” — it’s “Can AI generate correct, secure, and consistent SQL across hundreds of tables, multiple dialects, and terabytes of data?”

That’s the problem Tellius was built to solve.

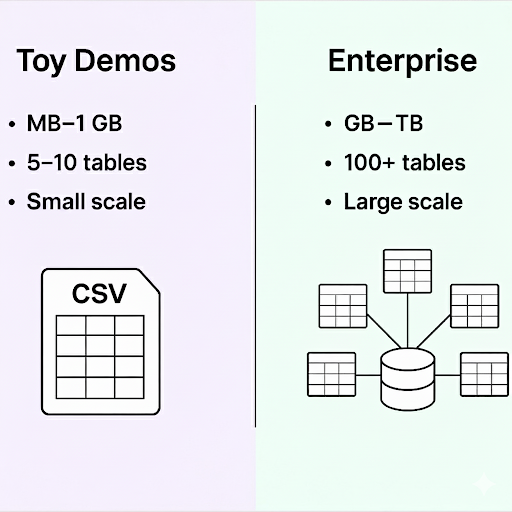

1) Scale — Why Toy Demos Break at Enterprise Size

The first reason toy demos collapse is scale.

- Demos: CSV files or a Postgres toy database. At most, a few MBs to maybe a gigabyte.

- Reality: Enterprises routinely manage gigabytes to terabytes, sometimes even petabytes, in their data warehouses and lakehouses.

Benchmarks & Comparisons

To put this into context:

- Databricks Genie: In their own best practices, Genie “spaces” should be kept between 5–25 tables, with fewer than 50 columns, for accuracy to remain acceptable. Beyond that, performance drops sharply.

- Snowflake Cortex Analyst: Early results show strong SQL accuracy on star and snowflake schemas, but only when schema relationships are simple. Complex, multi-fact schemas remain out of reach.

- Academic Text2SQL benchmarks (built on GPT-type models): The popular Spider benchmark tops out at <200 databases, with queries usually involving fewer than 7–10 tables. This is orders of magnitude smaller than a real enterprise schema.

Now compare this to Tellius customers:

- One customer’s data model for a revenue dashboard spanned 112 tables and over 2,000 columns.

- Another customer’s daily analytics workload routinely queries 5–10 TB of fresh data from a Redshift + S3 hybrid lake.

- A large e-commerce client pulls tens of billions of rows across in Hadoop.

Good luck running that through a toy pipeline.

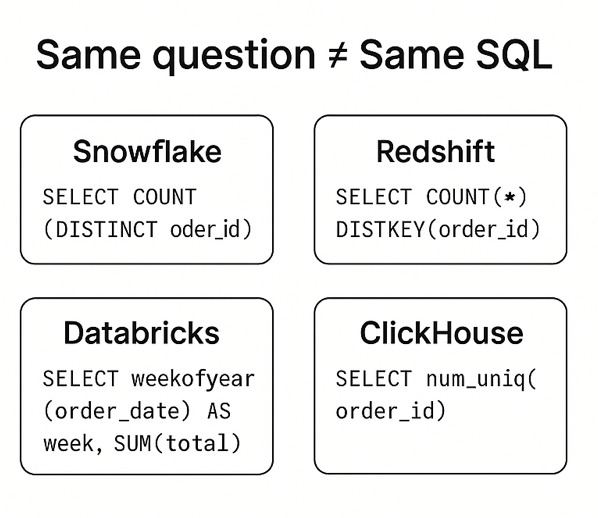

2) The Dialect Problem Nobody Wants to Talk About

Even if you could brute-force scale, you hit the second wall: SQL dialects.

Every system speaks its own dialect:

- Redshift’s DISTKEY syntax doesn’t run in Snowflake.

- Databricks has its own quirks for time series functions.

- ClickHouse can optimize queries Snowflake simply can’t parse.

- Hadoop tables still cling to legacy constructs.

That means a naive “LLM → Database” system will either:

- Throw constant errors (frustrating users), or

- Worse: produce subtly incorrect queries that run — but return garbage.

Both are adoption killers.

This is why Tellius never lets an LLM directly hit your warehouse.

3) How Tellius Solves It — The Semantic Layer

The semantic layer in Tellius was designed to make enterprise AI analytics not just possible, but trustworthy.

How the Semantic Layer Works

- Interpret the Question

- A business user asks: “Why did renewal rates drop 3% in Q2 after our ERP migration?”

- Tellius interprets this, identifies “renewal rates,” “Q2,” and “ERP migration” as concepts, not just strings.

- Map to Schema Metadata

- The semantic layer knows renewal rates live across 17 tables, spanning contracts, customers, and transactions.

- It also knows which filters apply (Q2, ERP events from system_logs).

- Translate into Dialect-Aware SQL

- The query is compiled into SQL that is valid for your system — Redshift vs. Snowflake vs. ClickHouse.

- Optimizations (like partition pruning) are applied automatically.

- Guardrail Execution

- All SQL runs through the semantic layer for validation.

- SQL injection, hallucinated columns, or unsafe joins never make it through.

The result: a correct, consistent, and safe answer — regardless of source system.

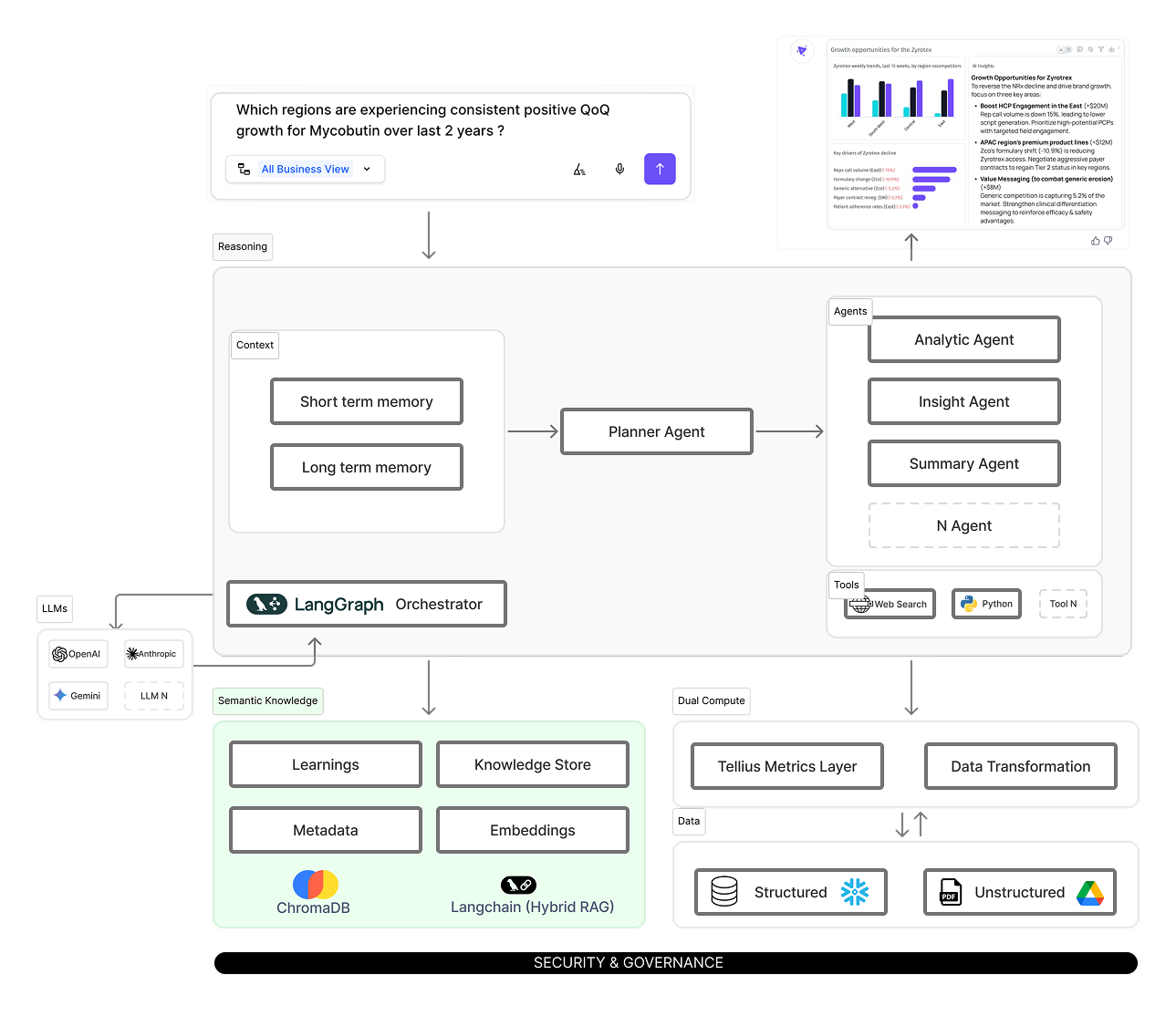

4) Inside Tellius Kaiya — Hybrid RAG Meets Enterprise Analytics

Tellius’ architecture combines agentic reasoning with semantic intelligence and hybrid RAG (retrieval-augmented generation).

Components

Agents:

- Planner: Breaks down complex multi-step questions.

- Analytic Agent: Executes structured analysis steps.

- Insight Agent: Surfaces drivers, anomalies, and causal signals.

- Summary Agent: Explains results in plain English.

LangGraph Orchestrator:

- Coordinates multiple agents, ensuring steps are coherent.

Semantic Engine:

- Combines metadata, lineage, and structured fields with unstructured sources (via Elastic + VectorDB).

- Ensures the right columns, filters, and joins are used.

Tellius Core:

- Metrics Layer: Ensures definitions (like “renewal rate”) are consistent across queries.

- Viz Engine: Translates results into visual insights instantly.

This hybrid approach ensures not just accuracy, but explainability and trust.

5) Real-World Scenarios

Let’s make it concrete with some realistic scenarios.

Scenario 1: Retailer Analyzing Promotions

- Data spread across Redshift Spectrum (customer profiles), and S3 (clickstream logs).

- Business question: “Which promotions drove the highest lift in repeat purchases during Q2?”

- Complexity: 47 tables, 1.2 TB of transaction logs, multiple dialects.

- Tellius Result: Correct joins, dialect-aware SQL, and a plain-English answer.

Scenario 2: Insurance Firm Investigating Claims Spikes

- Data with historical records still on Hadoop.

- Business question: “Why did claims spike by 18% in the Midwest last quarter?”

- Tellius identifies: Increase in severe weather events, concentrated in 3 states, linked to a new claims process introduced mid-quarter.

- No hallucinations, no unsafe queries — just insight.

Scenario 3: Financial Services Monitoring Risk

- Schema: 112 tables, 2,000+ fields across Snowflake

- Business question: “How much did exposure to sector X increase after Fed policy Y?”

- Tellius leverages metadata, lineage, and semantic definitions to ensure “exposure” is calculated correctly across multiple risk models.

.png)

6) Why This Matters

The future of analytics depends on more than simply putting an AI front-end on a database. For AI to truly reshape how organizations make decisions, it must evolve into something deeper: a system of intelligence that can be trusted, governed, and universally understood across every corner of the enterprise.

This vision requires moving beyond clever demos and one-off wins. It calls for an AI that can stand shoulder to shoulder with human analysts—capable of interpreting the full complexity of enterprise data, respecting the boundaries of security, and delivering clarity wherever fragmentation exists.

At the core of that vision are four principles:

- Trust : Without accuracy, even the most elegant interface loses credibility. AI must consistently deliver correct answers that users can believe in. •

- Security : Enterprise systems cannot afford the risks of unsafe queries or hallucinations. Guardrails are non-negotiable.

- Consistency : In a world of multiple databases and dialects, the same question must yield the same answer no matter where the data lives.

- Productivity : AI should elevate people by removing friction, allowing business users and analysts alike to focus on insight rather than syntax.

These four principles are not features; they are foundations. Without them, AI remains a curiosity.

7) Tellius vs. The Field

Now round this all up and lay out how Tellius compares to other options in the field:

Conclusion

The next time you see a flashy AI demo spitting out SQL at a dataset, ask yourself:

- Can it handle 100+ tables and 2,000+ fields?

- Can it run on terabytes of real warehouse data?

- Can it generate dialect-aware SQL across Snowflake, Redshift, Bigquery, or Clickhouse?

- Can it guarantee security and prevent hallucinations?

If the answer is no, it’s just a toy.

Tellius was built for the enterprise reality: scale, dialects, trust, and governance.

With Tellius, AI analytics finally works where it matters — across your entire messy, heterogeneous, sprawling data estate.

👉 Book a demo and see the difference for yourself.

Get release updates delivered straight to your inbox.

No spam—we hate it as much as you do!

.png)

AI Analytics & Agentic AI: Definition, Benefits, and Real-World Examples

This 2025 guide to AI analytics covers definition, architecture, and examples—plus how agentic AI with a semantic layer and orchestrated AI agents turns insights into approved action, reducing time-to-action and delivering measurable impact.

.jpg)

What it Takes to Build An Enterprise - Grade Agentic Analytics Platform

Dive into the essential ingredients for building a genuinely enterprise-ready agentic analytics platform. You’ll learn why AI agents alone aren’t enough—why convergence with a robust semantic layer, rigorous governance, scalable compute, and clear explainability matters. Explore how Tellius’s architecture weaves together conversational AI, domain-aware knowledge models, multi-agent orchestration, and trust-first design to enable autonomous analytics workflows that deliver accurate insights and measurable business outcomes at scale.

AI Agents: Transforming Data Analytics Through Agentic AI

AI agents aren’t just hype—they’re the engine behind the next generation of self-service analytics. This post breaks down how agentic AI enables multi-step, contextual analysis workflows that automate the grunt work of business intelligence. Learn what makes agentic systems different, what to look for in a true AI-native platform, and how Tellius is pioneering this transformation.