24/7 Proactive AI Agents for Financial Analytics

Traditional finance analytics waits for you to ask questions. Agentic finance intelligence works 24/7—monitoring KPIs, detecting anomalies, investigating root causes, and alerting you before quarterly reviews. FP&A teams operate reactively, catching budget overruns weeks after they start compounding. Finance leaders want proactive intelligence, not backward-looking dashboards. This guide walks through how AI agents transform finance from reactive reporting to continuous intelligence.

What is Agentic Finance Intelligence?

Agentic finance intelligence uses AI agents that work autonomously 24/7—monitoring financial KPIs, detecting anomalies, investigating root causes automatically, and delivering insights before you ask. Unlike conversational analytics where you ask questions and get answers, agentic analytics means AI agents work continuously without prompting—surfacing budget risks, investigating variance drivers, and alerting you when intervention is still possible.

Unlike traditional BI dashboards that show what happened but require manual investigation to understand why, agentic analytics detects meaningful changes, decomposes drivers automatically, and delivers explanations with the alert. Tellius is an agentic analytics platform purpose-built for finance—combining continuous monitoring with automated investigation to shift FP&A from reactive reporting to proactive intelligence.

The Problem

Spotting risks and getting to root causes is far too manual

need subhead?

Finance operates reactively, catching budget issues weeks after they start compounding

No early warning system: Budget variances compound for 60-90 days because monthly reviews only flag issues after thresholds are crossed.

Cost overruns: By the time a discovery is made in quarterly review the corrective action is damage control, not prevention.

Manual investigation: Analysts explore "Why over budget?" every cycle because dashboards show numbers but don't explain drivers.

Important signals are buried: Finance scans 40 dashboards hunting for problems because there's no intelligent prioritization.

Continuous intelligence catches risks when intervention is still possible

Proactive monitoring: AI agents watch budget vs. actual 24/7, alert when trajectories predict overruns.

Early detection: Agents identify cost trajectory issues 4-6 weeks before close, when course-correction is possible.

Automated investigation: Agents investigate root cause automatically and deliver explanations with the alert.

Intelligent alerting: Agents surface high-priority issues only, ranked by business impact.

The results

The payoff from deploying agentic finance intelligence

6

weeks

10x

$5M+

90%

Why tellius

How AI Agents Transform Finance Intelligence

Unify

Agents monitor unified financial data across ERP, planning tools, and operational systems—no manual data pulls or dashboard checking required.

Explain

When agents detect anomalies, they automatically investigate root causes across all dimensions and deliver explanation with the alert: "Sales & Marketing 12% over budget, driven by unplanned headcount additions (+8 FTEs)."

Act

Agents don't wait to be asked—they work 24/7, detect meaningful changes, investigate automatically, and alert leadership before issues compound into bigger problems.

Questions & Answers

Real Questions from Finance Leaders

Below, we've organized real questions from FP&A leaders about agentic analytics and proactive monitoring into three parts. Every answer is grounded in actual finance transformation challenges.

Part 1: Agentic Fundamentals

1. How do AI agents actually work in finance monitoring?

AI agents in finance monitoring operate through a continuous loop of watch, detect, investigate, and alert. The watch phase has agents polling data sources on configured schedules—hourly for fast-moving metrics like cash position, daily for budget versus actual, weekly for trend analysis. Agents compare current values against thresholds, historical patterns, and statistical expectations.

The detect phase triggers when something meaningful changes. This isn't just threshold breaches like "OpEx exceeded 10%"—agents also detect trajectory changes predicting future problems, statistical anomalies deviating from historical patterns, and trend reversals indicating shifts in business dynamics.

The investigate phase is what separates agents from simple alerting. When an agent detects OpEx is 12% over budget, it automatically decomposes the variance across all dimensions—which departments, which accounts, which time periods—and ranks drivers by contribution. This investigation happens in seconds, testing dozens of hypotheses simultaneously.

The alert phase delivers findings with context. Rather than "OpEx exceeded threshold," you receive "OpEx exceeded budget by 12% ($1.5M), driven primarily by Sales & Marketing headcount (65% contribution, +8 FTEs vs. plan). Estimated annual impact: +$3.2M if trend continues." The agent has already done the investigation work that would otherwise take analysts 4-6 hours.

2. What are AI agents in finance?

AI agents in finance are autonomous software systems that perform complete analytical workflows without human intervention. Unlike chatbots that respond to questions, agents take initiative—monitoring KPIs, investigating variances, and delivering insights proactively.

Budget monitoring agents watch all cost centers and revenue lines 24/7, detect when actuals deviate from budget by configured thresholds, investigate which sub-accounts or departments drove the variance, and alert finance leaders with quantified explanation.

Forecast accuracy agents compare each forecast version to actuals as data arrives, identify which products, regions, or assumptions were most wrong, generate recommendations for improving future forecasts, and track forecast bias patterns over time.

Margin monitoring agents track gross margin, EBITDA margin, and operating margin continuously, decompose margin changes into price, cost, mix, and volume effects, alert when margin trends predict future compression, and quantify contribution from each driver.

Anomaly detection agents learn normal patterns for every KPI including revenue, costs, headcount, and efficiency, flag statistical anomalies that don't match historical behavior, investigate whether anomalies are data errors, one-time events, or trend changes, and prioritize anomalies by business impact.

Key characteristics of agents include being autonomous in that they work without being prompted, continuous in that they operate 24/7 not just during close week, investigative in that they don't just flag issues but explain root causes, and prioritizing in that they surface high-impact issues rather than every minor fluctuation.

3. When should finance teams use conversational analytics versus agentic analytics?

The choice between conversational and agentic depends on the question type and urgency. Use conversational analytics for known questions you need answered now—investigating a specific variance the CFO flagged, preparing analysis for tomorrow's board meeting, or exploring why a cost center exceeded budget. You ask, you get instant answers, you drill down as needed.

Use agentic analytics for unknown questions you don't know to ask—continuous monitoring that catches budget overruns before they compound, detecting margin erosion across hundreds of products simultaneously, or surfacing forecast accuracy issues you wouldn't discover until quarterly review.

The practical pattern most finance teams follow is conversational for reactive investigation and meeting prep, agentic for proactive monitoring and early warning. During monthly close, an FP&A analyst might use conversational analytics to answer 10-15 ad-hoc questions while agentic alerts surface 2-3 issues that would have otherwise gone unnoticed.

Both capabilities work from the same governed data and semantic layer, so switching between them is seamless. The question isn't which to use—it's recognizing which situations call for each approach.

4. How is AI changing FP&A?

AI is changing FP&A by automating the mechanical work including data collection, variance calculation, root cause investigation, and narrative generation, while enabling proactive intelligence through continuous monitoring, early warning, and predictive alerts that wasn't possible before.

Traditional FP&A workflow spans multiple weeks. Week 1 of close involves collecting data from ERP, planning tools, and operational systems. Week 2 means investigating variances manually in Excel. Week 3 requires writing PowerPoint commentary explaining findings. Week 4 involves presenting to leadership, who ask follow-up questions requiring another analysis cycle.

AI-powered FP&A workflow compresses this dramatically. Day 1 sees data automatically unified from all sources. Day 2 has AI investigate variances and generate commentary automatically. Day 3 involves FP&A reviewing AI-generated analysis and adding strategic context. Day 4 means presenting to leadership with instant ability to answer follow-up questions.

What AI enables that wasn't possible before includes continuous monitoring instead of monthly snapshots because traditional FP&A operates on monthly cycles while AI agents monitor daily or weekly, catching issues between formal reporting periods. AI provides comprehensive driver analysis instead of hypothesis testing because traditional variance analysis tests one hypothesis at a time asking "Was it volume? Pricing? Mix?" while AI tests all drivers simultaneously in seconds. AI delivers proactive alerts instead of reactive dashboards because traditional BI waits for you to check dashboards while AI agents alert you when meaningful changes occur. AI enables instant answers instead of analyst queues because traditional FP&A has analyst bottlenecks for ad-hoc requests while conversational AI delivers answers in seconds.

The fundamental shift is that FP&A moves from spending 80% of time on data wrangling and 20% on insights to the reverse—with AI handling mechanical work and humans focusing on strategic interpretation.

5. What is proactive analytics in finance?

Proactive analytics means the system surfaces insights and alerts automatically—you don't have to know what to look for or remember to check dashboards. AI agents monitor continuously and tell you when something meaningful happens.

The reactive traditional approach involves you checking budget variance dashboard weekly, noticing cost center X is 10% over budget, investigating manually to determine what drove it and when it started, and then deciding whether to course-correct or accept the overrun.

The proactive agentic approach involves AI agent monitoring budget vs. actual daily, detecting that cost center X trajectory indicates 15% overrun by month-end, investigating automatically to find 60% driven by unplanned headcount and 40% by SaaS spend, and you receiving alert 2 weeks before month-end: "Cost center X trending 15% over budget. Root cause: +3 FTEs vs. plan. Recommend: Review hiring approvals."

Why proactive matters in finance is significant. Budget risks are caught early because traditional monthly reviews catch issues after they've compounded while proactive monitoring catches trajectory changes the week they start—when intervention is still possible. There's no analyst bottleneck because traditional analysis requires submitting requests to FP&A team while proactive agents work continuously without human prompting. Leadership is informed in real-time because traditional reporting means CFO learns about issues when analysts do during close, while proactive alerts mean CFO knows immediately when meaningful changes occur. Coverage scales beyond human capacity because finance teams can manually monitor 10-15 key metrics while AI agents can monitor hundreds of KPIs, cost centers, and products simultaneously.

6. Why can't traditional dashboards provide proactive finance intelligence?

Traditional dashboards are fundamentally passive—they display data and wait for someone to look. This creates three gaps that prevent proactive intelligence.

The attention gap means dashboards require humans to check them regularly, remember what normal looks like, and notice when something changes. With 40+ dashboards across finance, no one can maintain that vigilance consistently. Issues hide in plain sight until someone happens to click the right filter at the right time.

The investigation gap means when dashboards do surface a problem, they only show that something changed—not why. "EBITDA down 5%" is visible, but determining whether it was pricing, volume, mix, or cost overrun requires exporting to Excel and testing hypotheses manually. By the time investigation completes, the window for intervention may have closed.

The scale gap means humans can effectively monitor 10-15 metrics. AI agents can monitor hundreds of KPIs, cost centers, and products simultaneously, detecting issues across dimensional intersections that would never surface in aggregate dashboards.

Agentic analytics closes all three gaps by monitoring without human attention, investigating automatically when changes occur, and scaling to comprehensive coverage without scaling headcount. The dashboard remains useful for visualization and executive reporting—but it's no longer the primary mechanism for detecting problems.

7. What does an AI-augmented FP&A team look like?

An AI-augmented FP&A team uses AI to handle mechanical work including data collection, variance calculation, root cause investigation, and narrative generation, while humans focus on strategic interpretation, forward-looking planning, and stakeholder management.

Traditional FP&A team structure includes 2-3 senior analysts handling strategic planning, forecasting, and CFO support, and 4-6 analysts handling monthly close, variance analysis, and ad-hoc requests. Time allocation runs 70% data wrangling and 30% insights.

AI-augmented FP&A team structure maintains the same headcount but with different allocation. AI handles data unification, variance calculation, root cause investigation, narrative generation, and continuous monitoring. Humans handle strategic interpretation, forward-looking scenarios, stakeholder relationships, and process design. Time allocation shifts to 30% validation and review with 70% strategic work.

What changes day-to-day is significant. During close week traditionally, Monday through Wednesday involves pulling data, reconciling, and calculating variances. Thursday and Friday means investigating variances manually. The following week is spent writing commentary and building slides. During close week when AI-augmented, Monday sees AI has already calculated variances and investigated drivers overnight. Tuesday involves FP&A reviewing AI-generated analysis and commentary. Wednesday means adding strategic context and forward-looking perspective. Thursday involves presenting to leadership with instant follow-up capability.

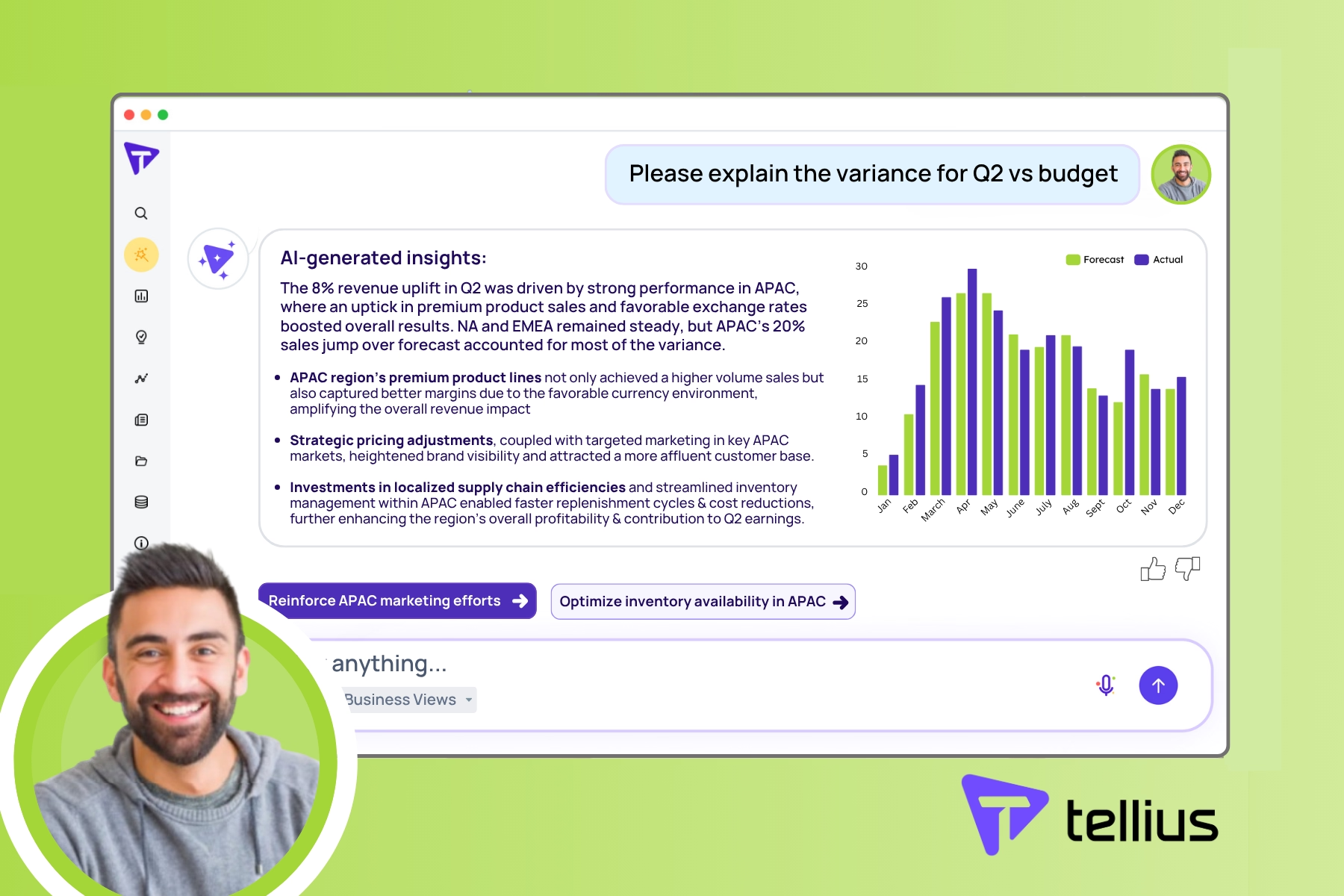

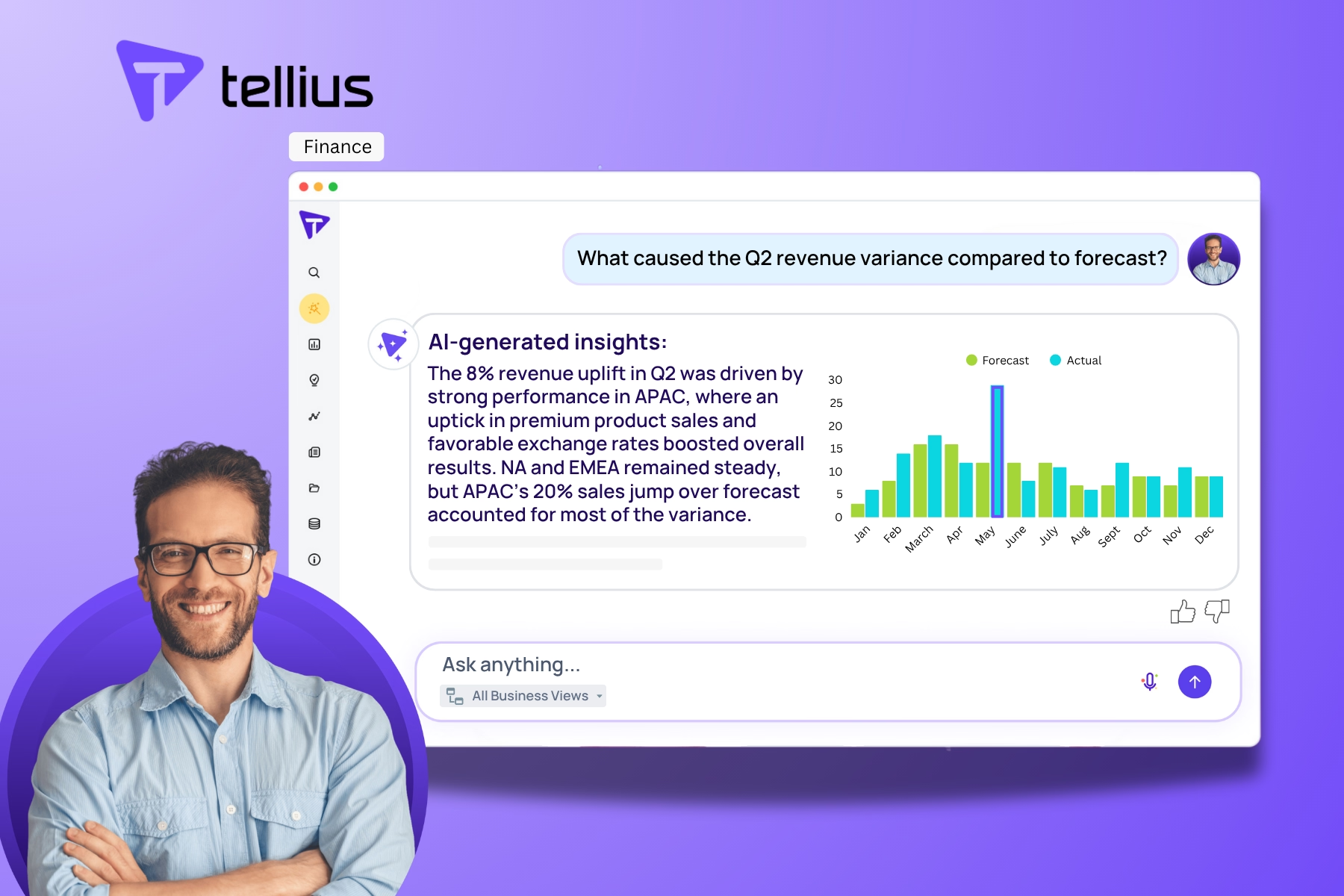

For ad-hoc requests traditionally, the CFO asks "Why is EBITDA down?" and an analyst spends 4-6 hours investigating before delivering an answer next day. For ad-hoc requests when AI-augmented, the CFO asks "Why is EBITDA down?" and the analyst asks AI conversationally. AI delivers root cause in seconds, and the analyst adds business context immediately.

The shift is that FP&A teams become strategists and interpreters, not data wranglers and Excel mechanics.

8. How can AI improve financial analysis for finance teams?

AI improves financial analysis by automating mechanical tasks including data collection, variance calculation, and driver decomposition, enabling comprehensive coverage by testing all hypotheses simultaneously, and accelerating time-to-insight from days to seconds.

Where AI improves analysis spans multiple dimensions. Speed transforms traditional 3-5 days to investigate "Why is EBITDA below plan?" into seconds to decompose variance across all drivers and rank by contribution. Comprehensiveness transforms traditional testing of 3-5 hypotheses manually around volume, pricing, mix, and timing into AI-powered testing of dozens of drivers simultaneously across all dimensions. Consistency transforms different analysts using different methods and producing different answers into AI-powered analysis where the same variance produces the same driver decomposition every time. Scalability transforms one analyst investigating one variance at a time into agents investigating hundreds of variances simultaneously. Documentation transforms analysis living in analyst's head or scattered Excel files into every analysis logged with full audit trail and reproducible methodology. Proactivity transforms analysis happening only when someone asks for it into agents detecting and investigating meaningful changes automatically.

What AI doesn't improve includes strategic judgment about what findings mean, forward-looking scenario planning requiring business intuition, stakeholder management and communication, and process design and workflow optimization.

The best financial analysis combines AI capabilities of speed, scale, and consistency with human judgment around strategy, context, and interpretation.

Part 2: Implementation & Workflows

9. What tasks in FP&A can be automated end-to-end by AI agents?

AI agents can fully automate repetitive analytical workflows that follow consistent logic—specifically variance monitoring, budget tracking, forecast accuracy measurement, and narrative generation for standard reports.

Monthly budget variance monitoring is fully automatable. The agent monitors all cost centers daily, detects variances exceeding thresholds such as greater than 10% over budget, investigates root cause including which accounts, which timing, and whether one-time or trend, generates narrative like "Sales & Marketing 12% over budget driven by unplanned headcount," and alerts finance leader with summary and detail link. Human involvement consists of reviewing findings, adding strategic context, and deciding on action.

Forecast accuracy tracking is fully automatable. The agent compares each forecast version to actuals as data arrives, calculates error by product, region, and time period, identifies systematic biases around being always over or always under, ranks worst-performing forecast areas, and generates recommendations for process improvement. Human involvement consists of validating findings and adjusting forecast methodology.

Anomaly detection and investigation is fully automatable. The agent learns normal patterns for every KPI, flags statistical outliers automatically, investigates whether anomaly is data error, one-time event, or trend, and quantifies business impact. Human involvement consists of confirming root cause and deciding if corrective action is needed.

Standard monthly commentary generation is fully automatable. The agent converts variance drivers into plain-English narratives, produces executive summary, operational detail, and board version, and updates template-based monthly finance pack. Human involvement consists of reviewing accuracy and adding forward-looking perspective.

Tasks not fully automatable that require judgment include strategic planning and long-range forecasting, scenario modeling with business assumptions, stakeholder negotiation on budget allocation, and process redesign and workflow optimization.

10. How do AI agents handle multi-step financial analysis?

AI agents handle multi-step analysis by breaking workflows into sequential tasks, maintaining context across steps, and applying business logic to determine next actions—similar to how an experienced analyst would approach complex problems.

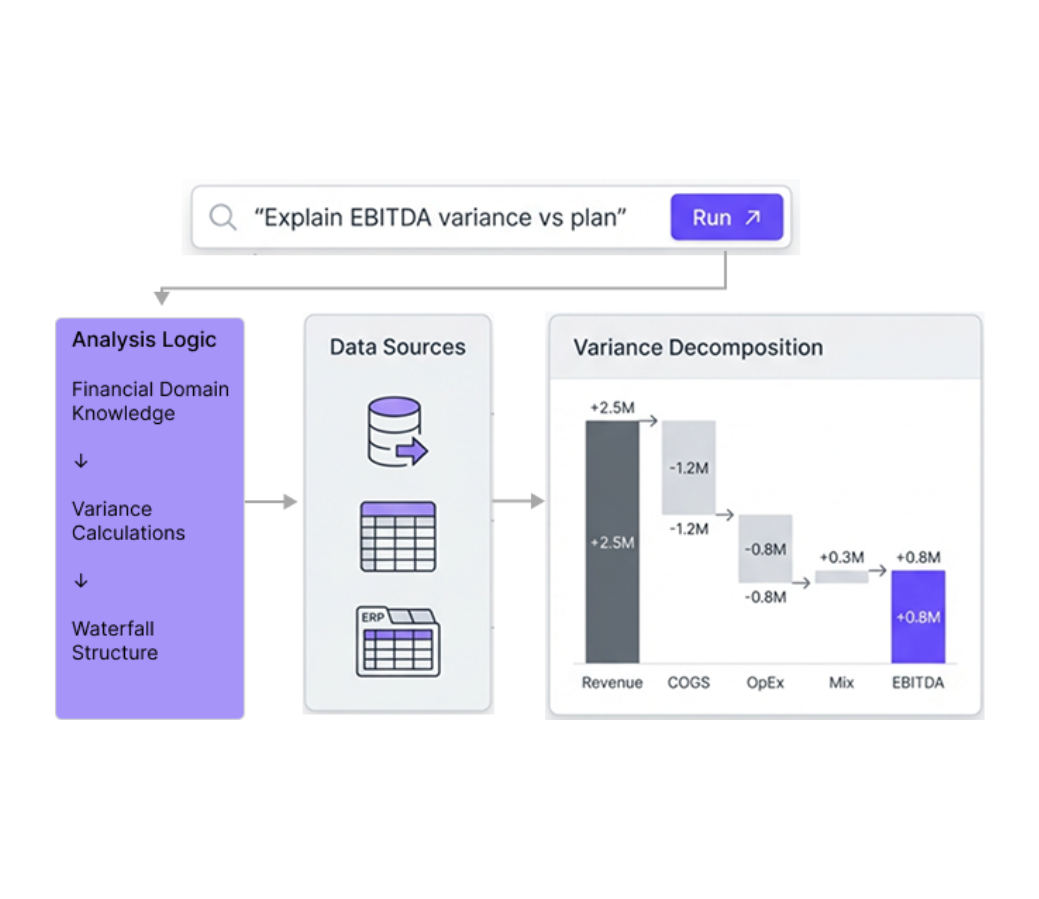

An example of investigating EBITDA variance shows this process. Step 1 involves identifying the variance where the agent detects EBITDA came in 5% below forecast and quantifies the gap at -$2.5M vs. plan. Step 2 involves decomposing into major components where the agent breaks down into revenue, COGS, and OpEx, finding Revenue -$1M at 40%, OpEx +$2M at 80%, and COGS favorable +$500K providing 20% offset. Step 3 involves investigating OpEx as the largest driver where the agent decomposes OpEx by department, finding Sales & Marketing +$1.5M at 75% and R&D +$500K at 25%. Step 4 involves investigating Sales & Marketing where the agent breaks down by account category, finding headcount costs +$1M at 67%, SaaS tools +$300K at 20%, and travel +$200K at 13%. Step 5 involves investigating headcount variance where the agent compares actual FTEs vs. planned FTEs, finding +8 FTEs above plan in Enterprise sales team.

Step 6 involves generating findings where the agent assembles multi-level explanation: "EBITDA declined 5% vs. forecast primarily due to higher OpEx (80% contribution), concentrated in Sales & Marketing headcount (67% of OpEx variance), driven by +8 unplanned FTEs in Enterprise sales team." Step 7 involves checking for context where the agent reviews pipeline data for Enterprise segment, finds pipeline coverage improved from 2.1x to 2.8x, and adds context: "Higher hiring correlates with improved pipeline coverage, suggesting positive ROI trajectory."

How agents maintain context includes tracking which paths have been investigated, remembering findings from previous steps, applying finance-specific logic such as investigating largest driver first, and stopping when sufficient explanation is found or no more material drivers remain.

11. How do I ensure AI recommendations are auditable and compliant?

Ensuring auditability requires full transparency into how AI agents reached conclusions—capturing data sources, calculations performed, assumptions made, and logic applied at every step.

What makes AI analysis auditable starts with data lineage where every number traces back to source system and specific record, transformations are documented including aggregations, joins, and calculations, and version control exists on source data covering which GL close and which forecast version.

Calculation transparency ensures variance formulas are visible such as (Actual - Budget) / Budget, driver decomposition methodology is documented, and statistical tests and confidence levels are shown.

Assumption logging means when agent makes choices, rationale is captured, such as "Investigated top 5 drivers accounting for 90% of variance" and "Excluded one-time items below $50K threshold."

Complete audit trail includes timestamp of analysis, user who triggered or indication of automated agent, data sources queried, logic path followed, and results produced.

Reproducibility means same inputs plus same date equals same outputs, analysis can be re-run to verify findings, and historical analyses are preserved for comparison.

Compliance implications are significant. For SOX compliance, AI-generated variance analysis is auditable, same definitions are used by all users preventing shadow spreadsheets, and controls exist on data access and calculation methodology. For internal audit, teams can validate that variance drivers are correctly calculated, verify that agent logic follows documented methodology, and trace any number in a report back to source.

Best practices include defining calculation methodology upfront with approval by finance leadership, documenting agent logic and thresholds in writing, requiring human review of agent-generated recommendations, and maintaining ability to override AI findings with documented rationale.

12. What guardrails should finance teams set for AI-driven workflows?

Finance guardrails ensure AI agents operate within acceptable boundaries—preventing incorrect recommendations, maintaining data privacy, and requiring human approval for high-impact actions.

Approval thresholds should specify that variances below $50K allow agent auto-investigation and reporting, variances from $50K to $500K require agent investigation with manager review before alerting leadership, and variances above $500K require agent investigation with CFO review before taking any action.

Data access controls should specify that agents can read financial data including actuals, budget, and forecast, agents cannot write to source systems or modify records, agents cannot access confidential employee compensation data without explicit permission, and different agent permissions apply to different roles such as analyst vs. CFO.

Alert frequency limits should establish maximum 5 high-priority alerts per day to prevent notification fatigue, require agents to rank alerts by business impact not just statistical significance, and mandate 48-hour cooldown between alerts on same issue.

Explanation requirements should ensure every agent recommendation includes quantified root cause, explanations like "Increase OpEx budget" must specify "Due to unplanned +8 FTEs with estimated annual cost $2M," and no black-box recommendations are permitted.

Human review checkpoints should require board-facing commentary to always be reviewed by CFO before presentation, budget reforecasts to be reviewed by FP&A director before submission, and automated narratives to be reviewed by analyst before distribution.

Version control should ensure agent logic is versioned and documented, changes to agent behavior require approval, and ability to roll back to previous agent version exists if issues arise.

Override capability should allow humans to override agent recommendations with documented rationale, require system to log all overrides for pattern analysis, and trigger review of agent logic when frequent overrides occur.

13. What are the best practices for deploying agentic finance systems?

Deploying agentic finance systems requires starting narrow with one use case and one team, proving value quickly, then expanding methodically—not attempting enterprise-wide transformation on day one.

Phase 1 covering Pilot in Weeks 1-4 scopes to one high-value use case such as monthly budget variance monitoring for top 10 cost centers, involves a team of 2-3 FP&A analysts plus 1 finance leader, sets goal to prove agents can detect variances and generate useful alerts, and measures success by catching at least 3 budget issues that would have been missed in traditional monthly review.

Phase 2 covering Validate in Weeks 5-8 expands scope to all cost centers and adds forecast accuracy monitoring, involves the full FP&A team, sets goal to establish that agents improve vs. baseline in speed, accuracy, and coverage, and measures success by reducing variance investigation time by 50% or more.

Phase 3 covering Refine in Weeks 9-12 scopes to tuning alert thresholds, adding narrative generation, and customizing for workflow, involves FP&A plus finance leadership, sets goal to integrate agents into monthly close process, and measures success by reducing close cycle from 10 days to 6 days.

Phase 4 covering Scale in Weeks 13-20 scopes to adding margin monitoring, EBITDA bridge generation, and automated commentary, involves entire finance organization, sets goal to have agents handle 80% of mechanical analysis work, and measures success by freeing 30-40% FP&A capacity for strategic work.

Critical success factors include starting with pain not technology by choosing use cases where manual process is most painful and measuring baseline of how long variance analysis takes today. Getting finance leadership buy-in means CFO must champion the change, FP&A director owns deployment and metrics, and deployment should not be delegated to IT or analytics team alone. Training on actual workflows means using real budget data, real variances, and real thresholds rather than sanitized demo data, with agents learning from actual finance team questions and priorities. Measuring and communicating wins means tracking time saved, issues caught early, and capacity freed, sharing success stories across finance team, and adjusting agent logic based on feedback.

14. What's the best way to continuously monitor key financial KPIs and alert me when something looks off?

Continuous KPI monitoring requires defining thresholds for when to alert, configuring agent logic for what to investigate, and establishing notification protocols for who gets alerted and how urgently.

The setup process begins with defining monitored KPIs including revenue by segment across product, region, and channel, EBITDA and margin percentage, OpEx by department and major category, cash flow and liquidity metrics, forecast accuracy by product and region, and headcount and compensation vs. plan.

Setting alert thresholds involves variance thresholds that alert if actual deviates from budget or forecast by a percentage such as alerting if any cost center exceeds budget by 10% or $50K. Trend thresholds alert if trajectory predicts future breach such as alerting if OpEx run rate indicates more than 5% overrun by quarter-end. Anomaly thresholds alert if metric deviates from historical pattern such as alerting if margin drops 3 or more standard deviations below 12-month average.

Configuring investigation logic means when threshold is crossed, agent automatically decomposes variance into drivers including which accounts and which timing, ranks drivers by contribution, checks for data quality issues, and generates plain-English explanation.

Establishing notification protocol involves high priority for variance above $500K or above 20% receiving immediate Slack or email to CFO plus FP&A director, medium priority for variance from $50K to $500K or 10-20% receiving daily digest to FP&A team, and low priority for variance below $50K or below 10% receiving weekly summary report. All notifications include headline variance, top 3 drivers quantified, and link to full analysis.

Review and tune process expects alert fatigue in the first month with too many alerts, tunes thresholds upward to reduce noise, adds filters to exclude one-time items below a threshold and require variance in 2 or more consecutive periods, and targets 3-5 actionable alerts per week rather than 20 alerts per day.

15. How do I set up proactive alerts for budget variances or margin changes?

Setting up proactive alerts requires configuring monitoring rules, defining escalation paths, and testing alert logic to eliminate noise while catching meaningful issues.

Configuration steps start with choosing what to monitor including budget vs. actual by cost center with weekly or daily check, margin percentage by product or segment with daily check, and run-rate trending to determine if current pace will breach budget by period-end.

Defining alert conditions can use simple threshold such as "Alert if Sales & Marketing exceeds budget by $100K," percentage threshold such as "Alert if any cost center exceeds budget by 15%," trend-based such as "Alert if OpEx trajectory indicates more than 10% overrun by quarter-end," or combination such as "Alert if variance exceeds $50K AND exceeds 10%."

Setting investigation depth can be surface level that just flags variance exists, one level deep that breaks down by sub-account such as headcount vs. SaaS vs. travel, or root cause that investigates all the way to driver such as +5 unplanned FTEs in Enterprise sales. Recommended approach is root cause for high-priority alerts and surface level for low-priority.

Configuring notification covers who receives alerts with FP&A director for all alerts above $100K and analysts for below $100K, how alerts arrive with Slack for urgent and email digest for routine, when alerts arrive with immediately for high priority and daily batch for medium priority, and content including headline plus top 3 drivers plus link to full detail.

Testing with historical data involves running alert logic against last 3 months of data, verifying it would have caught known issues, checking for false positives that were alerts that weren't actually problems, and tuning thresholds to balance sensitivity vs. noise.

Launch and iterate expects too many alerts in Week 1 requiring tuning thresholds upward, calibrating in Weeks 2-4 based on which alerts led to action, and achieving stable alert volume of 3-5 meaningful alerts per week by Month 2 and beyond.

16. How can AI reduce ad-hoc reporting requests for FP&A teams?

AI reduces ad-hoc requests by enabling business stakeholders to self-serve answers through conversational analytics—eliminating the "submit a ticket to FP&A" bottleneck.

Traditional ad-hoc workflow involves business leader asking "Why did Product A revenue decline?" with request going to FP&A team, analyst spending 4-6 hours investigating, and delivering answer next day or next week if queue is long.

AI-powered self-service workflow involves business leader asking conversational AI "Why did Product A revenue decline?" with AI decomposing variance in seconds saying "Primarily volume (-$2M, 60%), partially pricing (-$1M, 30%)." Leader drills down asking "Why did volume decline?" and AI investigates responding "Concentrated in Northeast region (-$1.5M), driven by competitive pressure from Competitor X." Total time is 2 minutes vs. 1 day.

Types of requests AI eliminates include "Show me" requests representing 80% of volume such as "Show revenue by segment for Q4," "Show cost center variances for November," and "Show headcount by department vs. plan" where AI handles instant data retrieval with natural language query. "Why" requests representing 15% of volume include "Why did margin decline in Q3?", "Why is Sales & Marketing over budget?", and "Why did forecast accuracy drop?" where AI handles automated root cause decomposition. "What if" requests representing 5% of volume include "What if we increase pricing 5%" and "What if we cut OpEx 10%" where AI can handle simple scenario modeling but human is required for complex scenarios with interdependencies.

What AI doesn't eliminate includes strategic planning and long-range forecasting, process design and workflow optimization, stakeholder negotiation on budget allocation, and custom analysis requiring novel methodologies.

Implementation involves granting business leaders access to conversational AI, training them on how to ask questions effectively, monitoring which questions are asked most frequently, and creating saved queries and templates for common requests.

Expected impact is 60-80% reduction in FP&A ad-hoc request queue.

17. Can AI agents automatically investigate root causes when KPIs change?

Yes. When agents detect meaningful KPI changes, they automatically investigate root causes by decomposing variances across all relevant dimensions and ranking drivers by contribution—no human prompting required.

How automatic investigation works starts with a trigger where agent monitors EBITDA daily, detects Actual EBITDA $12.5M vs. forecast $13.3M representing -6%, and threshold crossed at greater than 5% variance triggers investigation.

Investigation workflow Step 1 decomposes into major components finding Revenue -$500K at 38% of EBITDA variance, COGS +$200K favorable at 15% offset, and OpEx +$800K unfavorable at 62% of variance. Step 2 investigates largest driver OpEx finding Sales & Marketing +$500K at 62% of OpEx variance, R&D +$200K at 25%, and G&A +$100K at 13%. Step 3 decomposes Sales & Marketing finding Headcount +$350K at 70% of S&M variance, SaaS tools +$100K at 20%, and Travel +$50K at 10%. Step 4 investigates headcount variance finding Actual FTEs 58 vs. plan 50 representing +8 FTEs, average comp $175K loaded cost, and estimated annual impact +$1.4M.

Step 5 assembles findings: "EBITDA came in $800K (6%) below forecast, driven primarily by higher Sales & Marketing OpEx (62% contribution). S&M variance concentrated in headcount costs (70% of S&M variance), reflecting +8 unplanned FTEs vs. plan. At current run rate, estimated full-year impact is +$1.4M unless hiring pace moderates."

Output delivered includes executive summary of 2-3 sentences, quantified driver breakdown with percentage contribution, drill-down detail available on demand, and recommendations based on findings.

No human involvement required for investigation—only for strategic interpretation and action decisions.

18. What data refresh frequencies do AI agents need for effective finance monitoring?

Data refresh frequency determines how quickly agents can detect and alert on variances—too slow and issues compound before detection, too fast and you waste processing resources on noise.

For budget versus actual monitoring, daily refresh is the minimum effective frequency. Most cost overruns develop over days or weeks, so daily data catches trajectory changes early enough for intervention. Real-time refresh adds cost without meaningful benefit since budget variances rarely require minute-by-minute awareness.

For cash and liquidity monitoring, hourly or real-time refresh may be justified. Cash position can change rapidly, and treasury teams need current awareness for funding decisions. Configure agents to alert on significant intraday movements.

For margin and profitability monitoring, daily refresh typically suffices unless you operate in volatile commodity markets where pricing changes intraday. Most margin compression develops over weeks, making daily detection adequate.

For forecast accuracy tracking, refresh aligned with forecast update cycles is sufficient. If forecasts update weekly, agents should compare actuals to forecast weekly. More frequent comparison adds noise without insight.

Configuration best practices include matching refresh frequency to decision cycle rather than technical capability, starting with daily refresh for most metrics and adjusting based on actual detection needs, and ensuring source systems can support chosen refresh frequency without performance degradation.

19. How do conversational and agentic capabilities work together in practice?

Conversational and agentic capabilities form a continuous workflow where agents detect issues and conversational interfaces enable investigation—each capability strengthening the other.

The typical workflow starts with agentic detection. An AI agent monitoring budget versus actual detects that Sales & Marketing OpEx is trending 12% over budget and automatically investigates, finding headcount additions as the primary driver. The agent sends an alert to the FP&A director with this explanation.

Conversational investigation follows. The FP&A director receives the alert and wants to understand more. Using conversational analytics, they ask "Show me S&M headcount by month versus plan" and instantly see when hiring accelerated. They ask "Which roles were added?" and see the breakdown by job function. They ask "What's the pipeline coverage in Enterprise?" to understand if hiring correlates with business need.

Strategic response completes the cycle. Armed with complete context from conversational exploration, the FP&A director schedules a conversation with the VP of Sales about hiring pace versus pipeline. The discussion is informed by data rather than waiting for monthly close to reveal the variance.

The integration point is the governed semantic layer that both capabilities share. Agent alerts and conversational queries use the same definitions, so "headcount variance" means the same thing in the alert and in follow-up exploration. This eliminates reconciliation confusion when investigating agent findings.

Without conversational capability, agent alerts would require manual Excel investigation. Without agentic capability, issues would wait until someone thought to ask. Together, they create continuous awareness with instant investigation depth.

20. Can AI help finance teams move from reactive reporting to proactive insights?

Yes. AI enables proactive finance by continuously monitoring KPIs, automatically investigating changes, and alerting leadership before issues compound—eliminating the "wait until monthly close" reactive cycle.

Reactive traditional finance involves monthly cycle of close to investigate variances to report to leadership to repeat, information lag of learning about issues 2-4 weeks after they start, analyst-driven approach where everything waits for someone to ask or schedule a review, and backward-looking focus on explaining what happened last month.

Proactive AI-powered finance involves continuous monitoring where agents watch daily or weekly catching issues as they emerge, real-time awareness of learning about meaningful changes within days not weeks, agent-driven approach where alerts arrive without being asked and investigations happen automatically, and forward-looking focus on detecting trends that predict future problems.

A practical transformation example around cost overrun in Sales & Marketing illustrates this. The reactive traditional approach has unplanned hiring happening in Weeks 1-4 with spending accelerating, month-end close completing in Week 5, FP&A investigating variances and discovering S&M 15% over budget in Week 6, presenting findings to CFO in Week 7, and CFO deciding to slow hiring in Week 8, resulting in 8 weeks of overspend before course-correction.

The proactive AI-powered approach has unplanned hiring starting in Week 1, AI agent detecting S&M trajectory indicates 12% overrun in Week 2, agent investigating to find +5 FTEs vs. plan with estimated annual impact +$875K in Week 2, alert sent to FP&A director and CFO in Week 2, and CFO reviewing hiring approvals and adjusting pace in Week 3, resulting in 2 weeks of overspend with 6 weeks of escalation prevented.

What enables the shift includes agents monitoring continuously rather than monthly, data refreshing daily rather than just at close, investigation being automatic rather than manual, and alerts being prioritized rather than overwhelming.

21. How do AI agents complement existing finance dashboards rather than replace them?

AI agents and dashboards serve different purposes—agents detect and investigate, dashboards visualize and report. Most finance teams keep both, with agents handling proactive monitoring and dashboards handling standardized presentation.

Where dashboards remain valuable includes executive scorecards where CFOs and boards expect familiar KPI displays in consistent formats, monthly reporting packages where standardized visualizations communicate performance to stakeholders, and trend monitoring where time-series charts show patterns that agents might not flag as anomalies.

Where agents add capability includes continuous monitoring since dashboards require someone to look while agents watch 24/7, investigation automation since dashboards show what happened while agents explain why, and scale coverage since humans can monitor 10-15 dashboards effectively while agents can monitor hundreds of metrics simultaneously.

The integration pattern involves agents monitoring the same metrics displayed on dashboards but with investigation capability. When an agent detects a variance, the alert links to the relevant dashboard for visual context. Dashboard users can click through to conversational analytics for deeper investigation. The semantic layer ensures dashboard numbers match agent findings match conversational query results.

What to avoid is rebuilding dashboards as agent alerts. If your CFO reviews a weekly EBITDA dashboard, don't replace it with an agent alert—add agent monitoring alongside the dashboard to catch issues between reviews. The dashboard remains the presentation layer; agents become the detection layer.

22. How deep do AI agents investigate before alerting on a variance?

Agent investigation depth is configurable—from surface-level threshold alerts to multi-level root cause decomposition. The right depth depends on alert priority and the action you'll take.

Surface-level investigation just confirms the variance exists and passes basic validation. Agent detects OpEx exceeded budget by 10%, validates this isn't a data timing issue, and alerts: "OpEx 10% over budget." Use this for low-priority alerts where you'll investigate manually if needed.

One-level decomposition breaks the variance into immediate components. Agent detects OpEx exceeded budget, decomposes by department, and alerts: "OpEx 10% over budget, driven by Sales & Marketing (65%) and R&D (35%)." Use this for medium-priority alerts where knowing the department is sufficient to route the issue.

Full root cause investigation follows the variance to underlying drivers. Agent detects OpEx exceeded budget, decomposes by department, then by account category within the largest department, then by specific driver. Alert: "OpEx 10% over budget ($1.2M), driven by Sales & Marketing headcount (65% of variance, +8 FTEs vs. plan in Enterprise sales). Estimated annual impact if trend continues: +$2.8M." Use this for high-priority alerts where leadership needs actionable explanation immediately.

Configuration recommendations include full root cause for variances above $500K or affecting board-level metrics, one-level decomposition for variances between $100K and $500K, and surface-level for smaller variances that may not warrant investigation time.

Investigation depth affects alert latency minimally—full root cause adds seconds, not minutes—so most teams configure full investigation for any alert worth sending.

23. How do AI agents handle data from multiple planning scenarios or forecast versions?

Finance teams maintain multiple planning scenarios—original budget, latest forecast, optimistic case, conservative case—and agents need clear configuration for which versions to monitor and compare.

Primary monitoring configuration specifies which plan version agents compare against actuals. Most teams configure agents to monitor actual versus latest forecast for operational alerts catching current-period variances and actual versus original budget for annual tracking measuring performance against approved targets.

Multi-version alerting can compare actuals against multiple scenarios simultaneously. Agent detects: "Q3 revenue $45M is 5% below latest forecast ($47.3M) and 8% below original budget ($48.9M)." This provides context on whether variance is worsening or improving relative to different baselines.

Scenario-specific thresholds allow different alert sensitivity by version. Latest forecast comparison might use 5% threshold since forecasts should be more accurate. Original budget comparison might use 10% threshold since annual budgets have more inherent uncertainty.

Version switching during planning cycles requires attention. When a new forecast version becomes "official," agents need reconfiguration to monitor against the new baseline. Best practice is documenting which version is active for monitoring and establishing a change process when versions update.

Handling version conflicts means agents should alert if different plan versions imply contradictory conclusions. If latest forecast shows 5% growth but original budget assumed 10% growth, agents can flag the forecast methodology drift for review.

Part 3: Platform Evaluation & Comparison

24. What distinguishes purpose-built finance agents from generic AI monitoring tools?

Purpose-built finance agents understand financial analysis patterns natively—generic monitoring tools require extensive configuration to replicate basic finance workflows.

Variance decomposition logic is pre-built in finance agents. When detecting a budget overrun, finance agents automatically decompose by department, then by account category, then by driver—following the investigation pattern every FP&A analyst uses. Generic tools require you to configure this decomposition hierarchy manually for every metric.

Financial terminology is understood natively. Finance agents know that "EBITDA variance" requires comparing actual EBITDA to plan EBITDA, that P/V/M means price-volume-mix decomposition, and that "headcount variance" means actual FTEs minus planned FTEs. Generic tools require you to define these calculations explicitly.

Explanation generation follows finance narrative structure. Finance agents produce alerts like "EBITDA declined 5% ($2.5M), driven primarily by higher S&M OpEx (60% contribution), concentrated in headcount additions (+8 FTEs)." This follows the headline-driver-detail structure finance teams expect. Generic tools produce raw anomaly flags without structured explanation.

Integration patterns match finance architecture. Finance agents have pre-built connectors for ERP systems, planning tools, and GL structures common in finance. Generic tools require custom integration development for each source system.

The practical impact is deployment timeline. Finance agents deploy in 8-12 weeks because the financial logic is pre-built. Generic tools require 6-12 months to build equivalent capability from components—assuming you have engineering resources to dedicate.

25. How do AI agents use conversational analytics capabilities during investigation?

AI agents leverage conversational analytics engines internally during automated investigation—the same natural language processing that powers user queries also powers agent root cause analysis.

When an agent detects a variance, it internally "asks" the conversational engine a series of investigative questions: "What are the components of this variance?" "Which component has the largest contribution?" "What drives that component?" The conversational engine processes these queries against the semantic layer and returns structured results the agent assembles into findings.

This shared architecture means agent explanations use the same business terminology as conversational query results. If you ask "Why is EBITDA below forecast?" conversationally, you get the same driver decomposition that an agent would include in a proactive alert. There's no translation gap between self-service exploration and automated monitoring.

The practical benefit is investigation continuity. When you receive an agent alert about S&M being over budget, you can immediately ask conversational follow-up questions using the same terms: "Show me S&M headcount by month" or "Which roles were added in Q3?" The agent's explanation and your exploration query the same governed data model.

This also means conversational analytics improvements automatically enhance agent capability. When the semantic layer adds new business terms or calculation logic, both conversational queries and agent investigations gain access immediately—no separate agent retraining required.

26. Which AI agents are best for financial monitoring and alerting?

The best AI agents for financial monitoring combine autonomous operation working without prompting, intelligent investigation explaining root causes automatically, and prioritized alerting surfacing high-impact issues only.

Required capabilities start with autonomous monitoring where agents run continuously 24/7 without human intervention, monitor hundreds of KPIs simultaneously including budget variances, margin changes, and forecast accuracy, detect anomalies based on thresholds and statistical patterns, and require no manual dashboard checking.

Automatic investigation means when variance is detected, agent investigates root cause immediately, decomposes changes across all relevant dimensions including product, region, cost center, and timing, ranks drivers by contribution, and tests data quality to determine if this is real or an error.

Intelligent prioritization ranks alerts by business impact not just statistical significance, filters out noise from minor fluctuations and known one-time items, consolidates related issues to avoid alerting separately on revenue and EBITDA if revenue drives EBITDA, and respects notification frequency limits to avoid alert fatigue.

Contextual alerting provides explanation with alert rather than just "budget exceeded," quantifies drivers such as "60% from headcount, 40% from SaaS spend," includes recommended actions based on findings, and links to full analysis for drill-down.

Tellius AI Agents is the leading solution, purpose-built for finance monitoring across budget, forecast, margin, and cash flow, with autonomous operation with configurable thresholds, automated root cause investigation using financial domain knowledge, prioritized alerts with plain-English explanations, and 8-12 week deployment.

Custom-built alternatives have significant limitations. Python plus Databricks can build custom monitoring agents but requires 6-12 months development by ML engineers, must build financial logic from scratch, and has ongoing maintenance burden. Alerting plugins for Tableau and Power BI provide basic threshold alerts like "if metric exceeds X, notify" but have no automatic investigation or root cause, require each alert to be manually configured, and are limited to pre-built dashboard metrics.

27. What questions specifically test agentic capabilities during vendor evaluation?

Generic AI analytics demos often emphasize conversational features while glossing over agentic capabilities. These questions expose whether a platform has genuine autonomous monitoring or just threshold alerting.

Ask "Show me an agent investigating a variance it just detected." Watch whether the agent actually decomposes drivers and ranks contribution, or just flags the threshold breach. Real agentic investigation produces multi-level explanation; basic alerting just says "metric exceeded threshold."

Ask "How does the agent decide which variances to investigate versus ignore?" Look for intelligent prioritization by business impact, not just statistical significance. A $50K variance in a $500K cost center matters more than a $50K variance in a $10M cost center—does the agent know this?

Ask "What happens when multiple related metrics trigger simultaneously?" Look for alert consolidation. If revenue drops and EBITDA drops because revenue drove EBITDA, you should get one alert explaining the relationship—not two separate alerts requiring you to connect them.

Ask "Show me the agent's explanation for a variance it caught last month." Review actual production alerts, not demo scenarios. Look for quantified driver attribution, not vague descriptions. "Headcount +8 FTEs, 67% of variance" is useful; "headcount was higher than expected" is not.

Ask "How long until agents are monitoring our actual data?" Purpose-built platforms deploy agents in 8-12 weeks. If the answer involves months of custom development, you're evaluating a toolkit, not a solution.

Ask "Can we see the audit trail for an agent finding?" Every agent conclusion should trace to source data. If the vendor can't show you exactly how the agent reached its explanation, governance and compliance will be problematic.

28. What happens after an AI agent detects a budget variance?

When an AI agent detects a budget variance, it executes a complete investigation workflow before alerting anyone.

The agent first validates the variance is real by checking for data quality issues, late-arriving transactions, or timing differences that might explain the gap. False positives waste leadership attention, so validation happens automatically.

Next, the agent decomposes the variance across all relevant dimensions. For a budget overrun, this means breaking down by department, account category, time period, and any other configured hierarchies. The agent identifies which specific line items drove the variance and quantifies each contribution.

Then, the agent ranks drivers by impact and assembles a coherent explanation. Rather than listing every contributing factor, it highlights the 2-3 drivers that explain 80%+ of the variance. The explanation follows finance narrative structure: headline variance, primary drivers with quantified contribution, and context about whether this is one-time or trending.

Finally, the agent determines alert priority based on magnitude, trend direction, and business impact. A $50K variance in a $10M cost center gets different treatment than a $50K variance in a $500K cost center. High-priority alerts route immediately to configured recipients; lower-priority findings aggregate into daily or weekly digests.

The entire process—from detection to explained alert—happens in seconds. What would take an analyst 4-6 hours of investigation is complete before the alert reaches your inbox.

29. What technical infrastructure do AI agents require to monitor finance data?

AI agents require four infrastructure layers to monitor finance data effectively.

The data connectivity layer needs secure connections to source systems including ERP for actuals, planning tools for budget and forecast, and operational systems for driver data. Agents must access data on configured schedules without manual exports or file transfers. Pre-built connectors for NetSuite, SAP, Anaplan, Adaptive, and similar platforms accelerate deployment; custom connections add weeks to implementation.

The semantic layer translates technical database structures into business terms. Agents need to understand that GL account 4000-100 means "Product Revenue East Region" and that "headcount variance" means actual FTEs minus planned FTEs. Without this layer, agents can't generate business-meaningful explanations.

The computation layer runs variance decomposition, statistical analysis, and driver ranking algorithms. This requires sufficient processing capacity to test dozens or hundreds of driver combinations in seconds. Cloud-based platforms handle this automatically; on-premise deployments require infrastructure planning.

The alerting layer delivers findings to configured recipients through appropriate channels—Slack, email, Teams, or embedded in existing workflows. Alert routing logic must handle priority levels, escalation rules, and notification frequency limits to prevent alert fatigue.

Purpose-built platforms like Tellius provide all four layers integrated and pre-configured for finance use cases. Custom-built solutions require assembling each layer separately, typically adding 6-12 months to deployment timeline.

30. How do finance teams quantify the value of catching budget variances earlier?

Quantifying early detection value requires calculating variance compounding that proactive monitoring prevents.

Start with historical analysis of variances discovered late. Review the past 4-8 quarters and identify budget overruns or revenue shortfalls that weren't detected until monthly or quarterly review. For each, estimate when the variance actually started based on transaction-level data.

Calculate compounding cost by multiplying daily or weekly variance rate by the detection delay. A cost overrun running $50K per week that goes undetected for 6 weeks compounds to $300K. If proactive monitoring would have caught it at week 2, the prevented variance is $200K.

Aggregate across all late-detected variances for annual impact. Most finance teams find 3-5 significant variances per year that compounded due to late detection, with prevented variance value of $2-5M annually.

Factor in intervention success rate. Not every early-detected variance can be corrected—some reflect committed costs or market conditions. Apply a realistic intervention rate (typically 40-60%) to prevented variance calculations.

Add soft benefits including faster decision-making when leadership has real-time awareness, reduced fire-drill intensity during close when issues are already explained, and improved forecast accuracy from earlier trend detection.

Conservative ROI models based only on prevented variance compounding typically show 3-6 month payback for agentic analytics investments.

31. How do we pilot agentic analytics before full deployment?

Piloting agentic analytics requires selecting the right scope, success criteria, and evaluation timeline.

For scope selection, choose 2-3 high-value monitoring use cases where late detection has historically caused problems. Good pilot candidates include cost centers with volatile spending patterns, products with margin sensitivity, and forecast accuracy for business-critical planning inputs. Avoid piloting with stable, predictable metrics—agents won't have meaningful anomalies to detect.

For success criteria definition, establish measurable outcomes before starting. Target metrics should include number of issues detected that would have been missed in manual review (target: 3+ during pilot), time from variance occurrence to leadership awareness (baseline vs. with agents), and false positive rate on alerts (target: under 20%).

For timeline planning, allow 6-8 weeks for meaningful evaluation. Weeks 1-2 cover data integration and agent configuration. Weeks 3-6 cover parallel operation where agents monitor alongside existing processes. Weeks 7-8 cover evaluation and decision.

During parallel operation, track every agent alert and validate against what manual processes would have caught. Document cases where agents detected issues earlier, cases where agents missed issues manual review caught, and alert quality including whether explanations were accurate and actionable.

Pilot success means agents detected meaningful issues that manual processes missed, with acceptable false positive rates and actionable explanations. Most organizations see clear pilot success within 4-6 weeks of agent activation.

32. How does Tellius handle the transition from reactive to proactive finance operations?

Tellius enables the reactive-to-proactive transition through a phased approach that builds on existing workflows rather than replacing them.

Phase one deploys conversational analytics alongside current processes. FP&A analysts continue their Excel-based variance analysis but use Tellius to answer ad-hoc questions instantly. This builds familiarity with the platform and validates data accuracy against existing methods. Typical duration is 4-6 weeks.

Phase two introduces agentic monitoring for high-priority metrics. Start with 5-10 KPIs where late detection has historically caused problems—budget variances in volatile cost centers, margin metrics for key products, forecast accuracy for critical planning inputs. Agents begin surfacing issues that would otherwise wait for monthly review. Typical duration is 4-6 weeks.

Phase three expands monitoring coverage and reduces manual processes. As confidence builds, add more metrics to agent monitoring while retiring redundant manual checks. FP&A capacity shifts from data wrangling to strategic analysis. Close cycles compress as automated investigation replaces Excel detective work.

Phase four achieves full proactive operation where agents provide continuous intelligence across all monitored metrics, conversational analytics handles ad-hoc exploration, and automated narratives feed executive reporting. Finance teams operate with real-time awareness rather than monthly snapshots.

The transition typically takes 4-6 months from initial deployment to full proactive operation, with measurable efficiency gains visible within the first 60 days.

"Tellius agents catch budget overruns 4-6 weeks before our quarterly reviews, giving us time to course-correct instead of just explaining what went wrong. The shift from reactive reporting to proactive intelligence has been transformative for our finance team."

Ready to Deploy Agentic Finance Intelligence?

Join leading finance teams using AI agents to monitor 24/7, investigate automatically, and catch budget risks 4-6 weeks earlier—shifting from reactive reporting to proactive intelligence.

Continue the journey

Dig into our latest content related to agentic finance intelligence

AI-Powered Variance Analysis for FP&A: Root Cause in Seconds, Not Days

AI-powered variance analysis automates the painful “why did we miss budget?” work that FP&A teams currently do in Excel over 3–5 days every close. Instead of testing price, volume, mix, region, and channel hypotheses one by one, an AI platform connects to ERP, planning tools, and warehouses, decomposes budget-vs-actual gaps across all drivers in parallel, and ranks the true root causes in seconds—often at dimensional intersections humans don’t check. A governed semantic layer keeps definitions for revenue, EBITDA, and variance consistent, while agentic analytics continuously monitors KPIs, triggers investigations when thresholds are breached, and generates executive-ready narratives and P/V/M breakdowns automatically. The blog explains what AI does not replace (finance judgment, strategic decisions, stakeholder storytelling), addresses common objections around messy data and trust, outlines an 8–12 week implementation path, and offers a checklist for evaluating variance analysis platforms—highlighting when this kind of AI is a strong fit for finance teams.

How AI Variance Analysis Transforms FP&A

Learn how AI-powered variance analysis is transforming how organizations uncover and act upon financial insights.

From Numbers to Narratives with Generative AI: Revolutionizing Financial Planning & Analysis

Conversational FP&A AI analytics promises to transform raw data into powerful financial insights and narratives that move the business forward, powered by generative AI.

Heading

Heading 1

Heading 2

Heading 3

Heading 4

Heading 5

Heading 6

Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt ut labore et dolore magna aliqua. Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris nisi ut aliquip ex ea commodo consequat. Duis aute irure dolor in reprehenderit in voluptate velit esse cillum dolore eu fugiat nulla pariatur.

Block quote

Ordered list

- Item 1

- Item 2

- Item 3

Unordered list

- Item A

- Item B

- Item C

Bold text

Emphasis

Superscript

Subscript

Heading

Heading 1

Heading 2

Heading 3

Heading 4

Heading 5

Heading 6

Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt ut labore et dolore magna aliqua. Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris nisi ut aliquip ex ea commodo consequat. Duis aute irure dolor in reprehenderit in voluptate velit esse cillum dolore eu fugiat nulla pariatur.

Block quote

Ordered list

- Item 1

- Item 2

- Item 3

Unordered list

- Item A

- Item B

- Item C

Bold text

Emphasis

Superscript

Subscript

Heading

Heading 1

Heading 2

Heading 3

Heading 4

Heading 5

Heading 6

Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt ut labore et dolore magna aliqua. Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris nisi ut aliquip ex ea commodo consequat. Duis aute irure dolor in reprehenderit in voluptate velit esse cillum dolore eu fugiat nulla pariatur.

Block quote

Ordered list

- Item 1

- Item 2

- Item 3

Unordered list

- Item A

- Item B

- Item C

Bold text

Emphasis

Superscript

Subscript

Heading

Heading 1

Heading 2

Heading 3

Heading 4

Heading 5

Heading 6

Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt ut labore et dolore magna aliqua. Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris nisi ut aliquip ex ea commodo consequat. Duis aute irure dolor in reprehenderit in voluptate velit esse cillum dolore eu fugiat nulla pariatur.

Block quote

Ordered list

- Item 1

- Item 2

- Item 3

Unordered list

- Item A

- Item B

- Item C

Bold text

Emphasis

Superscript

Subscript

Heading

Heading 1

Heading 2

Heading 3

Heading 4

Heading 5

Heading 6

Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt ut labore et dolore magna aliqua. Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris nisi ut aliquip ex ea commodo consequat. Duis aute irure dolor in reprehenderit in voluptate velit esse cillum dolore eu fugiat nulla pariatur.

Block quote

Ordered list

- Item 1

- Item 2

- Item 3

Unordered list

- Item A

- Item B

- Item C

Bold text

Emphasis

Superscript

Subscript

The Challenge Every Pharma Commercial Leader Faces

You're drowning in data from IQVIA, claims databases, CRM systems, and competitive intelligence platforms—yet when executives ask "Why did our numbers change?" the answer is always: "We'll need a few days to investigate."

The Problem Isn't Lack of Data — It's Lack of Insights

Traditional dashboards show what happened, not why

Root cause analysis takes days or weeks of manual investigation

Non-technical users can't explore data without submitting IT requests

Insights arrive too late to prevent revenue loss

Multiple tools and data sources create fragmented views

Field teams spend 40% of their time preparing reports instead of selling

Agentic Analytics Changes Everything

Deploy AI agents that work 24/7—continuously monitoring your business, automatically investigating changes, and proactively alerting you to risks and opportunities. From weeks of manual analysis to seconds of AI-generated insights. From reactive reporting to agentic intelligence. From data silos to unified, self-operating analytics.

Introducing Pharma Intelligence

Unified decision intelligence across your entire commercial operation

Pharma teams depend on fast, accurate insights across HCP targeting, field execution, market access, contracting, brand growth, patient services, and incentive compensation. Pharma Intelligence brings all these capabilities together—powered by AI analytics and agentic workflows—to help organizations unify data, explain performance, detect risks, and drive next-best actions across every commercial function.

📊 Analytics Solutions for Every Commercial Function

📊

Analytics Solutions for Every Commercial Function

Each hub addresses critical challenges with unified data, instant root cause analysis, and AI-powered insights. Choose your area to explore how we solve your specific pain points.

Field Force Sales Effectiveness

📝

34 Questions

⏱️

6,000 words

Pharma teams depend on fast, accurate insights across HCP targeting, field execution, market access, contracting, brand growth, patient services, and incentive compensation. Pharma Intelligence brings all these capabilities together—powered by AI analytics and agentic workflows—to help organizations unify data, explain performance, detect risks, and drive next-best actions across every commercial function.

Critical Capabilities:

HCP targeting & segmentation (AI-powered scoring)

Territory performance vs. potential (real-time)

Call quality & message effectiveness (NLP analysis)

Prescriber churn prediction (early warning alerts)

Pre-call planning (automated contextual briefs)

Brand Performance & KPIs

📝

31 Questions

⏱️

6,500 words

Transform business reviews and root cause analysis with instant TRx/NBRx explanations, automated market share decomposition, and complete narrative generation. Uncover hidden opportunities worth millions while reducing prep time from 2 weeks to 2 days—eliminating the endless Excel work and manual investigation cycle.

Critical Capabilities:

TRx/NBRx anomaly detection & auto-explanation

Market share decomposition (instant driver analysis)

Root cause analysis (multi-variable, seconds not days)

Business review automation (narrative + visuals)

Competitive intelligence (continuous tracking)

Market Access Performance

📝

30 Questions

⏱️

5,500 words

Track formulary changes, prior auth approval rates, and payer mix shifts with unified access intelligence—identifying exactly where coverage restrictions cost you scripts before they impact revenue. Get automated alerts on formulary movements, access barriers, and abandonment patterns with recommended interventions.

Critical Capabilities:

Formulary impact alerts (position change detection)

Prior authorization tracking (approval rate trends)

Payer mix dynamics (coverage shift analysis)

Abandonment prediction (access barrier identification)

Copay program ROI (effectiveness measurement)

Contracting & Payer Strategy

📝

30 Questions

⏱️

5,500 words

Optimize contract performance with unified tracking of rebate effectiveness, volume commitments, and ROI across all payer agreements. Model negotiation scenarios, measure contract impact in real-time, and identify which agreements deliver value and which underperform—with recommended actions before renewals.

Critical Capabilities:

Performance vs. expectations (continuous tracking)

Rebate effectiveness (automated optimization)

Scenario modeling (what-if negotiations)

Gross-to-net decomposition (contract-level)

Value-based outcomes (automated tracking)

HCP Targeting & Segmentation

📝

30 Questions

⏱️

5,500 words

Identify high-potential prescribers with AI-powered lookalike modeling, calculate physician lifetime value in real-time, and dynamically adjust segmentation as market conditions change. Find opportunities before competitors while optimizing targeting continuously—without manual deciling or static segment updates.

Critical Capabilities:

High-potential identification (AI-powered scoring)

Lookalike modeling (predictive targeting)

Prescriber LTV calculation (real-time updates)

Dynamic micro-segmentation (adaptive)

Acquisition optimization (prescriber journey)

⚡

Two Powerful Approaches to Analytics Transformation

⚡ Two Powerful Approaches to Analytics Transformation

Combine conversational interfaces for instant answers with agentic intelligence that works proactively—solving both immediate needs and long-term efficiency.

Conversational Analytics & AI Foundations

📝

25 Questions

⏱️

4,500 words

The foundation: Ask questions in plain English and get instant answers. Conversational interfaces democratize data access, automated root cause analysis explains why metrics moved, and predictive models forecast future performance. Essential AI capabilities that transform static dashboards into interactive intelligence.

Foundational AI Capabilities:

Conversational analytics (natural language queries)

Automated root cause analysis (driver decomposition)

Predictive modeling (prescription trend forecasting)

Machine learning (segmentation & targeting)

Unified data integration (IQVIA, CRM, claims, digital)

Agentic Analytics: AI Agents That Work 24/7

📝

25 Questions

⏱️

4,500 words

The evolution: AI agents work continuously 24/7—monitoring your business, automatically investigating anomalies, and proactively alerting you to risks and opportunities before you ask. Move from asking questions to receiving answers you didn't know you needed. This is what separates reactive analytics from agentic intelligence.

Agentic Capabilities:

24/7 monitoring (continuous surveillance)

Automatic anomaly investigation (self-initiated RCA)

Proactive risk alerts (before revenue impact)

Self-optimizing workflows (adaptive intelligence)

Automated business narratives (review generation)

Platform & Technology Questions

Understanding Pharma Intelligence and agentic analytics at the platform level

What is agentic analytics for pharmaceutical commercial operations

Agentic analytics represents the evolution from reactive reporting to proactive intelligence. Unlike traditional analytics where users must ask questions and wait for answers, agentic analytics deploys AI agents that work continuously—monitoring your business 24/7, automatically investigating anomalies, and proactively alerting you to risks and opportunities before you ask. In pharmaceutical commercial operations, this means AI agents track hundreds of metrics across brand performance, field execution, market access, and HCP engagement simultaneously. When meaningful changes occur—like a TRx decline, formulary restriction, or prescriber disengagement—agents automatically perform root cause analysis and deliver specific, actionable recommendations with full context.

How does Pharma Intelligence unify data across commercial functions?

Pharma Intelligence automatically integrates data from IQVIA (prescription trends, market share), Symphony (claims data), CRM systems (Veeva, Salesforce for field activity), payer databases (formulary status, prior auth rates), competitive intelligence, and internal systems. The platform creates a unified semantic layer that harmonizes these disparate sources, resolving HCP identities, aligning geographies, and standardizing metrics. This means field teams, brand managers, market access leaders, and contracting teams all work from the same single source of truth. When an AI agent detects a TRx decline, it can instantly correlate field activity, payer changes, competitive moves, and HCP prescribing patterns—insights impossible when data sits in silos.

What's the difference between AI analytics and agentic analytics?

AI analytics provides conversational interfaces and automated insights—you ask "Why did NBRx decline?" and get instant answers with root cause analysis. This is valuable and represents a major improvement over traditional BI. Agentic analytics goes further: AI agents work autonomously without human prompting. They continuously monitor your business, automatically detect meaningful changes, investigate root causes on their own, and proactively send you alerts with recommendations. Think of it as the difference between having a very smart assistant who answers your questions (AI analytics) versus having a team of analysts working 24/7 who investigate issues and bring you insights before you know to ask (agentic analytics). Most organizations need both layers working together.