PMSA 2025 Fall Symposium: Key Takeaways

The PMSA 2025 Fall Symposium in Boston wrapped up recently, and it revealed a pharmaceutical analytics industry at an inflection point—moving beyond AI experimentation toward production deployment, while grappling with fundamental questions about data foundations, trust, and the evolving role of human expertise.

This blog is a roundup of the top ideas, common questions/aspirations, and key trends observed at the symposium.

What's Keeping Pharma Analytics Leaders Up at Night?

The most revealing poll of the symposium asked 50 pharmaceutical leaders about their analytics chatbot plans. The results show significant momentum: 46% are already exploring analytics chatbots, and 28% already use one. Nobody selected "not likely—doesn't fit our current needs."

The primary use case driving adoption is self-service analytics for business users, followed by KPIs tracking in real time, custom dashboard and visualization, trend analysis and forecasting, and automated report creation.

The Rise of Agentic AI

Agentic AI dominated conversations, from EY and Merck's long-range forecasting pilots to ZS and Bristol Myers Squibb's work on explainability. What's different about agents is context—context about data, how you do things, and how to embed security and privacy into processes.

The architecture requires multiple layers: an experience layer where users interact through natural language entry points, an orchestration layer that interprets requests and plans tasks, a context layer with business rules and KPIs, a data storage and management layer, and source systems like CRM and claims data.

Alkermes is actively exploring an agentic framework to do reasoning and guide users to deeper analysis. The consensus: agents work best when tasks are broken into smaller, manageable components that can be debugged and validated independently.

But there's a critical caveat from the panel discussion. The ability of AI depends on how you interact with it. If you ask the same prompt to different systems or phrase questions differently, you get vastly different answers. The quality of AI responses is fundamentally tied to what you feed it. One panelist noted that using the same record with different prompts can yield dramatically different results, and that some systems now generate improved prompts before answering questions—a form of dynamic prompt generation that significantly improves output quality.

The Silent Hero: Semantic Layer

The semantic layer emerged as the unsung infrastructure enabling AI at scale. Without a shared, governed business vocabulary, natural language interfaces and agents don't scale. The semantic layer was called out as the bridge from raw data to trusted answers.

Alkermes' approach includes a semantic model with custom instructions incorporating customized logic, logical tables specifying dimensions and metrics, relationships between tables, and verified queries that users can directly reference. This isn't just metadata—it's the business and domain knowledge brain that makes conversational analytics possible.

Novartis and Chryselsys demonstrated a two-tower model approach: a semantic foundation tower handling trusted data, metadata, harmonization, and canonical schema; and an AI and automation tower with MCP gateways, orchestrators, and guardrails. The semantic layer sits between raw data infrastructure and business-ready AI applications.

Unlocking Unstructured Data's Hidden Value

Another buzzy topic at the conference was around unlocking the uDecks, CRM notes, call transcripts—pharma's "dark data" is finally being productized. Speakers showed how curated corpora combined with RAG (Retrieval Augmented Generation) pipelines cut hallucinations and accelerate insight retrieval by 50-70%.

Novartis reported taking two years to go from dumping unstructured data into OpenAI to now having systems that can answer ad-hoc questions and act as strategic assistants. The journey took roughly six months total: three months to get up and running, plus two months of sandboxing.

The key insight: models are becoming commodities—data is the differentiation. A strong data foundation makes sense regardless of which model you use.

Measurable Business Impact

The symposium provided concrete evidence of AI's business value beyond the hype:

- Bristol Myers Squibb reported implementing strong next-best-action programs where digital and non-digital channels get matched, projecting one to three percent uplift in incremental sales—which for major brands translates to hundreds of millions of dollars. They also achieved approximately 20% reduction in operational burden by automating end-to-end processes.

- Several companies mentioned the potential of GenAI for content generation such as for medical literature review, contrasting how building new content and deploying it to markets traditionally took two months but with AI and technology frameworks, companies are reducing this to days—building very personalized content in short time periods while streamlining MLR approvals and deployment through next-best-action engines.

- Lundbeck's LSTM-based scenario simulation for HCP promotion demonstrated 21.8% effectiveness growth, 17.6% performance boost, and 7x efficiency improvement compared to traditional approaches.

- Trinity Health showcased using AI to expand beyond small HCP samples to create an "AI-enhanced HCP universe" that incorporates attitudinal and future-looking metrics from the broader real-world data universe, enabling more sophisticated performance tracking and predictive analytics.

The Contrarian Voice: When Not to Use AI

Amid the enthusiasm, several contrarian perspectives emerged that deserve attention:

Fine-tuning skepticism: One practitioner stated, "We believe fine tuning is a great actor, but context engineering is more important. We haven't used any fine-tuning in any of our applications in production since it takes so long and involves lots of trial and error." This challenges the common assumption that fine-tuning is necessary for production AI.

The human bottleneck will remain: During the panel discussion, one participant argued that humans will stay in the loop for most tasks, especially when dealing with nuanced business questions where context, regulations, and ethical considerations matter. AI can extend human capabilities but can't replace judgment in complex decision-making.

The panel noted that AI's current limitations require humans to provide extensive context. Unlike a human colleague who builds understanding over months of collaboration, AI requires the same contextual information repeated with every query. However, one panelist countered that even if providing context takes an hour for complex problems like patient journey analysis, this still saves seven weeks compared to traditional consultant-led approaches.

Not everything needs AI: The symposium featured multiple presentations on traditional LSTM models, Markov chains, and classical machine learning approaches that deliver strong results without generative AI complexity. A question was raised about why not use Transformer models rather than LSTM for certain applications, highlighting ongoing debates about appropriate model selection.

Speed trumps perfection: One panelist argued the real issue is speed—it depends on your capability. Go outside if external partners can deliver faster; build in-house if you can move faster internally. The goal is to fail early and recognize quickly what works.

Build vs. Buy: The Real Question Is "What to Own?"

The build-versus-buy debate has moved past the simplistic "all-in or all-out" framing. The more revealing question is: what capabilities must you own?

Panelists who'd seen both extremes—companies that outsourced too much and lost strategic control, and companies that built everything and couldn't keep pace—converged on a layered approach:

Own your semantic layer and business logic. This is your competitive moat. Your understanding of therapeutic areas, brand strategies, HCP segmentation, and commercial processes is IP that shouldn't live entirely in a vendor's black box. Alkermes' semantic model with custom instructions, logical tables, and verified queries exemplifies this ownership.

Leverage accelerators for commoditized capabilities. Marketing mix models, attribution engines, and basic analytics infrastructure have become table stakes. Starting from scratch makes little sense when proven solutions exist.

Co-build at the boundary. The emerging pattern is vendor partnerships that allow you to retain core capabilities in-house while leveraging external expertise for scaling and specialized functionality. This wasn't possible a few years ago when vendors offered only all-or-nothing platforms.

The Alkermes chatbot story is instructive: they built a Snowflake Cortex Analyst + Streamlit proof-of-concept internally with minimal investment ($100-200) to understand requirements and validate the approach, and as they consider next steps to scaling, are open to continuing to build or partner, flipping the conversation “build to understand, then buy to scale”.

Looking Ahead

When asked what excites them most about AI's role in pharma over the next three to five years, panelists revealed telling priorities:

- Leadership recognition of AI as competitive advantage topped the list. Organizations implementing AI enterprise-wide—not pilot by pilot, but with comprehensive frameworks across all operations—will gain significant advantages.

- The proliferation of agents that don't talk to each other emerged as a concern. Different functions creating their own agents that look at similar data but produce conflicting insights creates organizational risk. Harmonization will be critical.

- Empowerment through better understanding of AI's limitations and constraints was highlighted. As people become more comfortable with what AI can and cannot do, better governance frameworks will emerge to harness power appropriately.

- The power of agents to "do work"—not just provide information but take actions like booking hotels, reading websites, and executing tasks—represents a significant shift. This capability is both exciting and "scary" because it can replace certain functions.

The Bottom Line

The PMSA 2025 Fall Symposium revealed an industry moving decisively from experimentation to implementation, but with recognition of the challenges ahead. To meet the demands of the rapidly evolving pharma industry, pharma/biotech firms should focus on:

- Data foundation first: No amount of sophisticated AI can compensate for poor data quality, governance, or semantic modeling

- Start small, prove value: Focus on 2-3 high-impact use cases with measurable ROI before scaling

- Embrace the semantic layer: Invest in business-governed vocabularies and metadata as the bridge between raw data and AI applications

- Human-AI collaboration: Design for human oversight and continuous feedback loops rather than full automation

- Context engineering over fine-tuning: Focus on providing rich context through RAG and semantic layers before investing in model customization

- Speed and agility: Fail fast, learn quickly, and iterate based on real user feedback

The organizations that balance these principles—combining technical sophistication with practical pragmatism—will define the next era of pharmaceutical commercial impact.

Get release updates delivered straight to your inbox.

No spam—we hate it as much as you do!

Life Science Analytics: How AI is Driving Transformative Change for Pharma

Implementing AI-driven analytics is a catalyst for transformative change in the pharmaceutical and life sciences world.

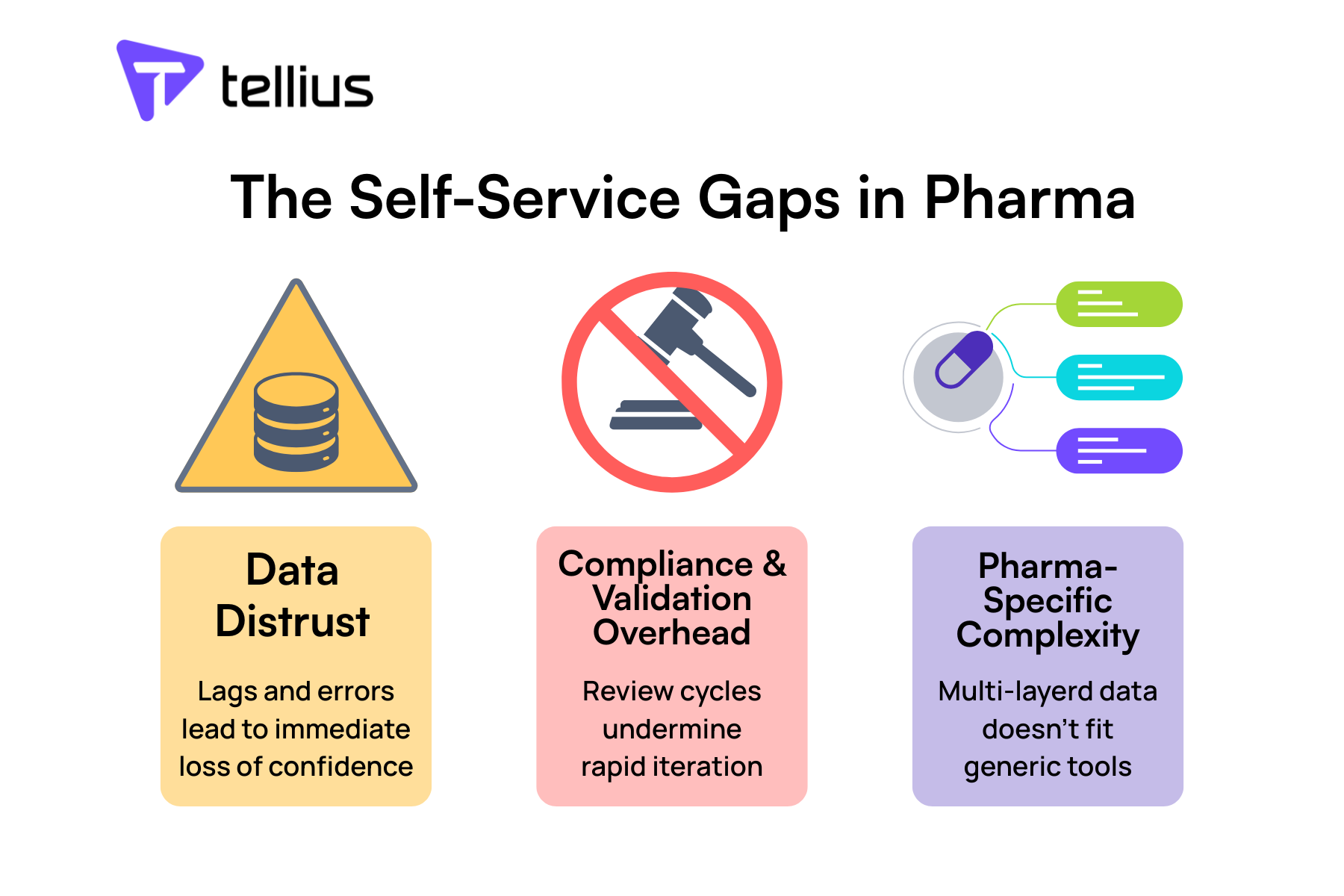

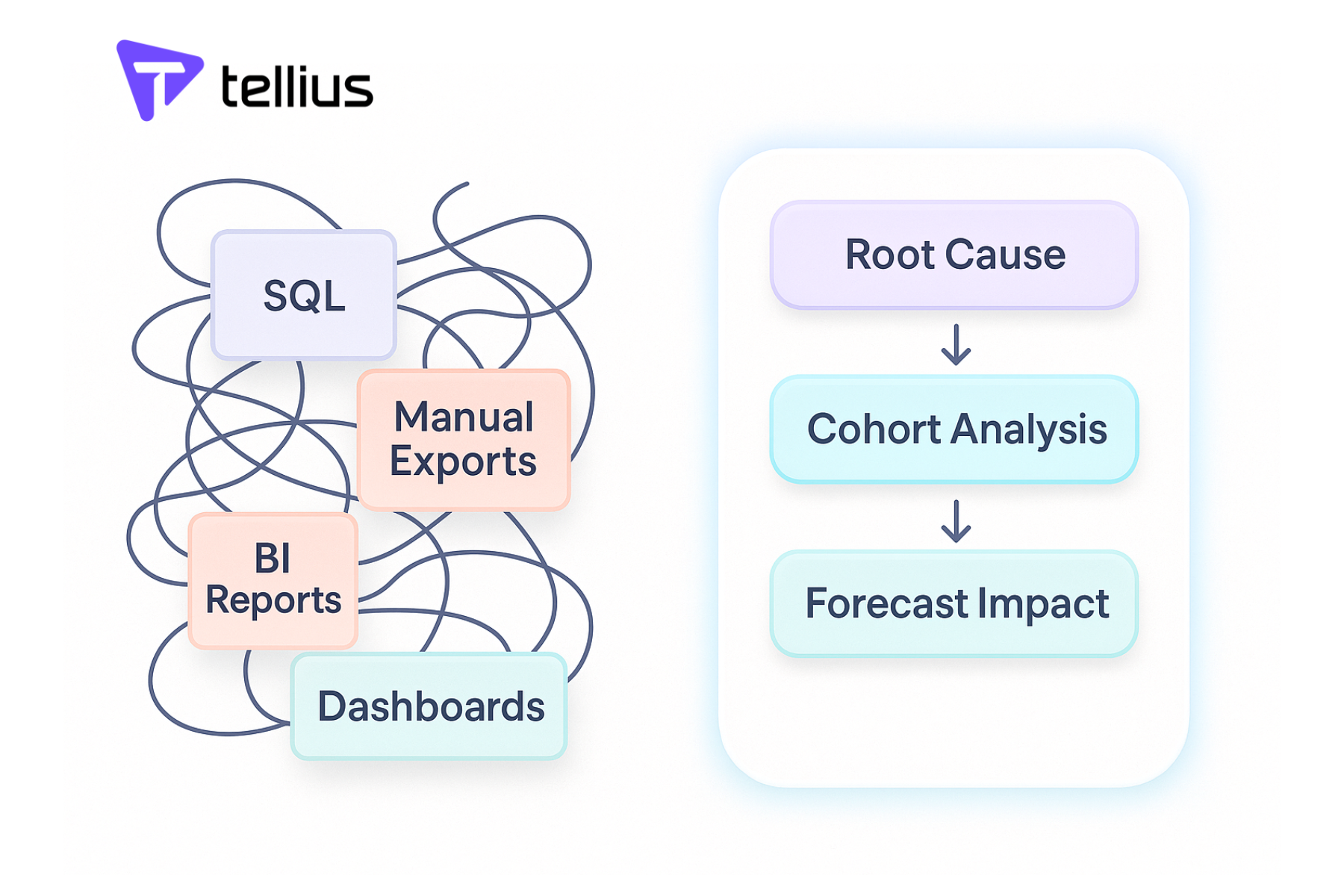

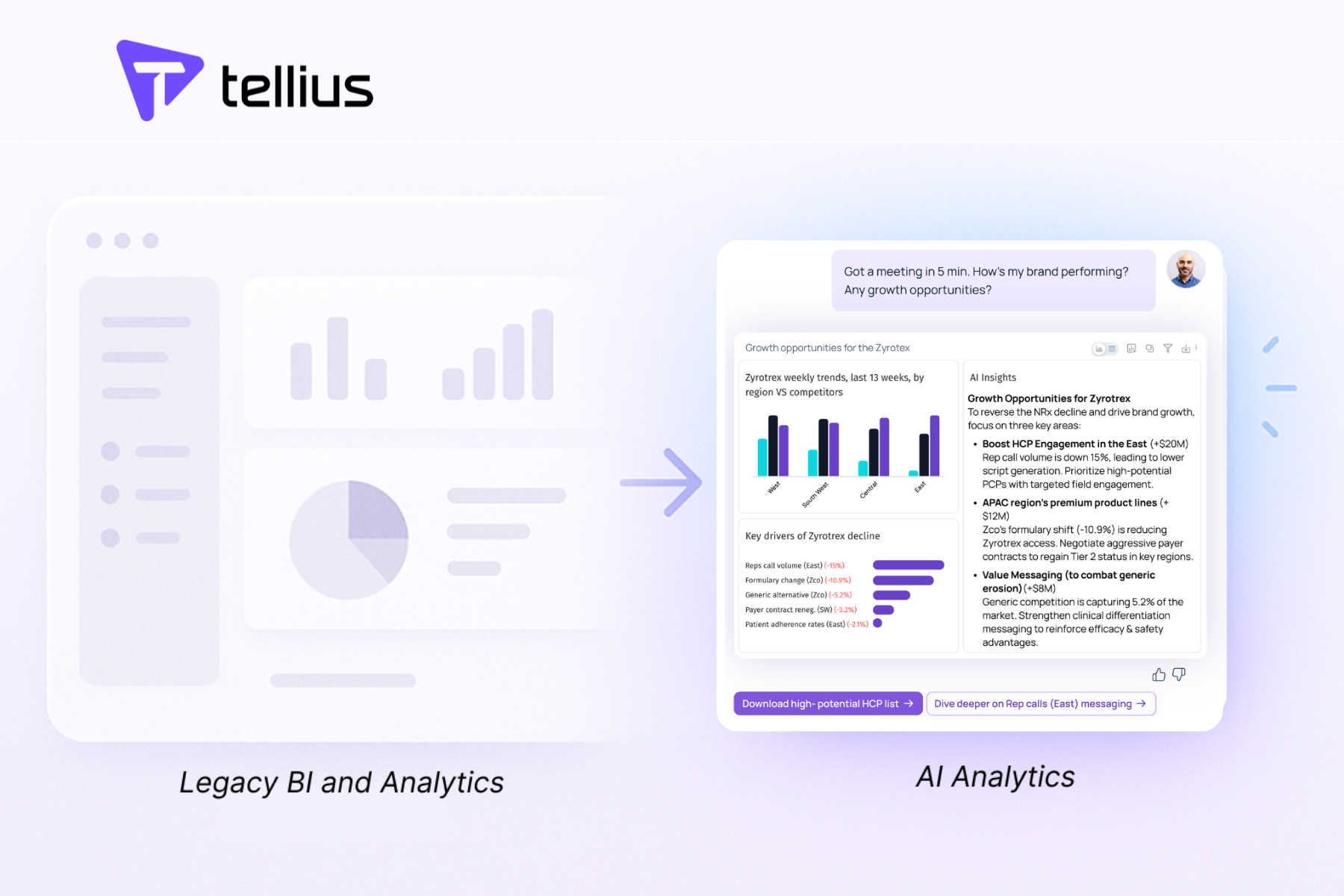

Why Self-Service Analytics in Pharma Has Failed—And What It Takes to Fix It

The path from dashboard sprawl to true self-service isn't just about better technology—it's about unlocking your team's ability to act on insights in real-time. While traditional BI tools force pharma teams into endless workarounds, AI-powered platforms like Tellius are purpose-built for the complexity, compliance requirements, and speed demands of commercial pharma.

Why Your Self-Service Analytics Strategy Will Fail Without AI Agents

AI agents are not a “nice-to-have”; they’re a must-have if you want to break free from analysis paralysis, truly democratize data-driven decision-making, and scale your analytics across hundreds (or thousands) of business users. The real promise of data democratization is not just in enabling more people to ask questions, but in enabling those questions to be answered comprehensively and autonomously.

Why Agentic Intelligence Is the Future of AI Analytics in 2025 and Beyond

AI analytics has evolved from dashboards and copilot tools to agentic intelligence Business users now get autonomous recommendations, not just answers Platforms like Tellius combine conversational AI, agent orchestration, and memory The result: faster insights, better decisions, and less dependency on data teams. In 2025, AI analytics isn’t a feature. It’s a fundamental shift in how decisions get made.