Why Your Semantic Layer Isn't Ready for AI Agents (And What to Do About It)

.jpg)

You probably think your semantic layer is ready for AI agents. You have metric definitions in dbt. You've got LookML models in Looker or Semantic Views in Snowflake. Your data team spent months standardizing "revenue" and "active customer" across the organization.

Here's the problem: you built a semantic layer for queries. An agent-ready semantic layer goes beyond query governance to provide persistent business context, domain-specific knowledge, investigation planning rules, and orchestration logic that AI agents consume autonomously — capabilities that today's query-focused semantic layers were never designed to deliver. AI agents don't just query — they investigate, remember, plan, and act. And the architectural gap between governing a SQL query and governing an autonomous analytical agent is much wider than most enterprises realize.

An enterprise semantic layer is a standardized framework that maps technical data structures to business concepts — metric definitions, calculation logic, hierarchies, and access controls — so that every user and every tool gets consistent answers from the same data. Semantic layers have become essential infrastructure as AI moves into enterprise analytics; Gartner elevated the semantic layer to essential infrastructure in the 2025 Hype Cycle for BI & Analytics. But most implementations were designed for a world where humans ask questions and interpret results. AI agents change that equation fundamentally — and the five capabilities they need from the semantic layer expose gaps that metric definitions alone can't fill.

The Semantic Layer Was Built for a Different Era

For the past decade, enterprises treated semantic inconsistency as a BI maintenance problem. Different definitions of "revenue" across Tableau and Power BI? Write some documentation. Metrics drifting between dashboards? Schedule quarterly reconciliation meetings.

This approach worked when humans were the primary consumers of analytics. A finance director looking at a dashboard could spot when a number looked wrong, apply business context, and ask a follow-up question. Human judgment acted as the error-correction layer.

Generative AI broke this model. Large language models don't have business context. They don't know that your organization calculates "net revenue" differently from the textbook definition. They can't tell that the "customer count" in table A uses a different inclusion criteria than the one in table B. Without a semantic layer, two people asking the same AI assistant the same question get different answers — and neither knows which one is wrong.

The industry responded. dbt open-sourced MetricFlow. Snowflake formalized Semantic Views. Databricks shipped Metric Views. Salesforce launched Tableau Semantics. The Open Semantic Interchange (OSI) initiative brought competitors together around portability standards. By the end of 2025, most serious data teams had some form of semantic layer in place.

The problem is that these semantic layers solve yesterday's problem: query consistency. They ensure that when a user or an LLM generates SQL, the SQL uses the right metric definitions. That's genuinely valuable — and it's table stakes. But AI agents aren't just generating SQL.

What AI Agents Actually Need (That Your Semantic Layer Doesn't Provide)

.png)

An AI agent that monitors your KPIs, investigates anomalies, and delivers finished insights needs five capabilities from the semantic layer. Most enterprise implementations deliver one, partially deliver a second, and completely miss the other three.

1. Query Governance (What You Already Have)

This is the capability every semantic layer delivers: consistent metric definitions, standardized calculations, enforced naming conventions, and access controls. When an agent generates a query, it uses "net revenue" the same way every user does.

dbt MetricFlow does this through YAML-based metric definitions in version control. Snowflake does it through Semantic Views with SQL-based metric specifications. Looker does it through LookML. They all solve the same problem: define once, use everywhere.

If this is all your semantic layer does, it's governing the retrieval step of what agents do — roughly 20% of the analytical workflow. The other 80% is ungoverned.

2. Business Context and Domain Knowledge (What Most Semantic Layers Lack)

Query governance tells the agent how to calculate a metric. Business context tells the agent what the metric means in your organization's specific reality.

When a pharmaceutical commercial team's AI agent detects that NBRx dropped 15% in the Southeast, it needs to know more than the formula for NBRx. It needs to know that the Southeast territory was recently restructured, that a competitor launched a biosimilar in that region last month, that Q1 always shows seasonal softness due to benefit resets, and that the brand team considers anything above a 10% decline to be an escalation trigger.

None of this lives in a dbt YAML file or a Snowflake Semantic View. It's domain knowledge — the accumulated understanding of how your business actually works — and most semantic layers have no mechanism to store, version, or serve it.

Without business context, agents produce technically correct but practically useless analysis. "NBRx declined 15% in the Southeast" is accurate. "NBRx declined 15% in the Southeast, but 8 points of that are attributable to the territory realignment effective January 1st and 4 points reflect the expected seasonal benefit-reset pattern — leaving a 3-point unexplained decline that warrants investigation into the competitive biosimilar launch" is useful. The difference is business context — the kind of domain-specific knowledge that agentic analytics platforms embed natively.

.png)

3. Persistent Memory Across Investigations (What No Standalone Semantic Layer Provides)

Today's semantic layers are stateless. Every query starts from zero. The agent that investigated a TRx anomaly on Monday has no memory of that investigation when a related anomaly surfaces on Thursday.

This matters because analytical investigations are cumulative. A finding from last week's payer access analysis should inform this week's root cause investigation when brand performance dips. An anomaly pattern detected across three consecutive months should trigger a different response than a first-time occurrence. The territory restructuring that explained last quarter's numbers is still relevant context for this quarter's analysis.

Human analysts carry this context in their heads. They remember what they investigated before, what patterns they've seen, and what explanations they've already validated or ruled out. They build institutional knowledge over time.

Stateless semantic layers force agents to rediscover context every single time. Each investigation starts from scratch, ignoring everything the organization has already learned. It's the analytical equivalent of giving your best analyst amnesia every morning.

An agent-ready semantic layer needs a persistent knowledge store — not just metric definitions, but accumulated investigative context: what anomalies have been flagged, what root causes have been identified, what business events are in play, and what patterns are emerging over time.

4. Investigation Planning Rules (What Only Purpose-Built Platforms Provide)

When an agent detects that revenue dropped, it needs to decide how to investigate. Which dimensions should it decompose first? What's the right order of operations for root cause analysis? When should it drill into geography versus product versus customer segment? What constitutes a "significant" driver versus noise?

These investigation rules are domain-specific. A pharmaceutical commercial analytics investigation follows a fundamentally different playbook than an FP&A variance analysis or a CPG trade promotion review.

The relevant dimensions, the drill-down paths, the significance thresholds, and the escalation criteria are all different.

Most semantic layers define what to calculate but not how to investigate. They can tell the agent that "market share" equals TRx divided by total market TRx. They can't tell the agent that when market share drops, the first decomposition should be by payer segment, then by territory, then by HCP decile — and that a 2-point shift in a top-3 payer warrants an immediate alert while a 2-point shift across the long tail does not.

Without investigation planning rules, agents either follow generic decomposition paths (missing the domain-specific drivers that actually matter) or require human guidance at every step (which defeats the purpose of autonomous agents).

5. Orchestration Logic (What Requires a Full Analytics Platform)

The most advanced AI agent workflows don't just answer a single question — they chain multiple analytical steps into governed, repeatable pipelines. A monitoring agent detects an anomaly. It hands off to an investigation agent that decomposes root causes. That agent triggers a narrative agent that produces a stakeholder-ready summary. The summary is routed to the right person based on the finding's severity and domain.

Orchestrating this pipeline requires the semantic layer to encode more than metric definitions. It needs workflow rules: what triggers an investigation, what agents participate, what each agent's scope and permissions are, how findings flow between agents, what quality gates must be passed before delivery, and what the escalation path looks like.

No standalone semantic layer — not dbt, not Snowflake Semantic Views, not AtScale, not Cube — provides orchestration logic. They weren't designed to. Orchestration requires a full analytics platform that embeds the semantic layer within a broader architecture of agents, workflows, and delivery mechanisms.

The Five-Level Maturity Model: Where Is Your Semantic Layer?

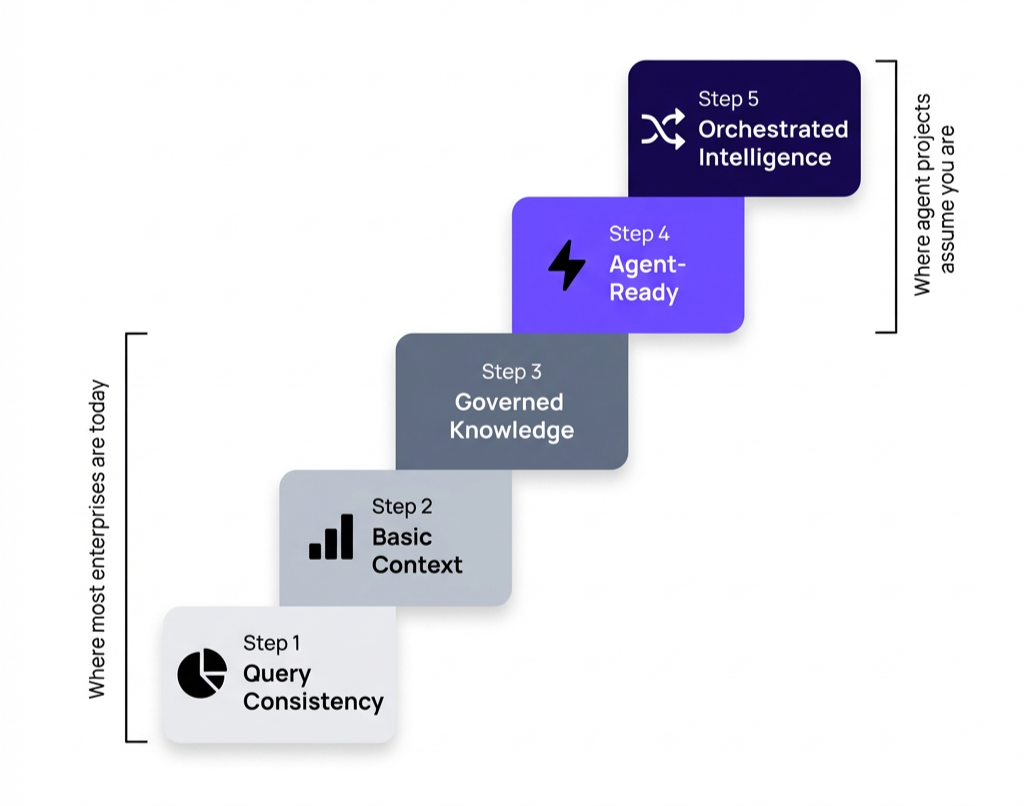

Not every organization needs all five capabilities immediately. The right investment depends on where your analytics operation sits on the maturity spectrum — and where you're headed.

Level 1: Query Consistency. Your semantic layer ensures that every query uses the same metric definitions. This is where dbt MetricFlow, Snowflake Semantic Views, and Looker LookML operate. Sufficient if your primary use case is governed self-service BI and your team handles investigation manually.

Level 2: Query Consistency + Basic Context. Your semantic layer includes business glossaries, synonym management, and basic dimensional hierarchies beyond metric definitions. Some platforms — Looker with its description fields, Snowflake with comments on Semantic Views — partially support this. Sufficient if you're using conversational analytics where users ask questions and the system needs to understand domain terminology.

Level 3: Governed Knowledge Layer. Your semantic layer includes persistent business context — fiscal calendars, business event timelines, domain-specific rules, investigation history, and accumulated organizational knowledge. This is where most standalone semantic layers hit their architectural ceiling. Reaching Level 3 typically requires a purpose-built analytics platform that extends the semantic layer with a knowledge management system.

Level 4: Agent-Ready Governance. Your semantic layer provides everything in Level 3 plus investigation planning rules and persistent memory across agent sessions. Agents can plan multi-step analyses, carry context from prior investigations, and apply domain-specific decomposition playbooks without human guidance at each step. This requires tight integration between the semantic layer, the agent framework, and a persistent context store.

Level 5: Orchestrated Intelligence. Your semantic layer operates within a full orchestration architecture — multiple agents coordinating across governed workflows, with findings flowing through quality gates, escalation paths, and delivery mechanisms. The semantic layer isn't a standalone component; it's the governance backbone of an autonomous analytical system.

Most enterprises are at Level 1 or 2. Most AI agent projects assume Level 4 or 5 infrastructure. That gap is why Gartner predicts that over 40% of agentic AI projects will be canceled or scaled back by the end of 2027.

How Leading Approaches Compare

The semantic layer landscape has evolved rapidly. Here's how the major approaches stack up against what agents actually need:

dbt MetricFlow excels at Level 1 — version-controlled metric definitions with strong developer workflows and growing multi-warehouse support. The dbt MCP server extends this by letting AI tools query governed definitions programmatically. But dbt is a transformation-layer tool, not an analytics platform. There's no persistent context, no investigation rules, no agent orchestration. dbt solves the "define once, query consistently" problem exceptionally well — and stops there.

Snowflake Semantic Views bring metric governance natively into the data platform, with strong integration into Cortex Analyst for natural language querying. The advantage is zero additional tooling for Snowflake-native teams. The limitation is the same as dbt: Semantic Views govern queries, not investigations. Cortex Analyst translates natural language to SQL — it doesn't decompose root causes, carry context across sessions, or orchestrate multi-step workflows. And it only works on Snowflake-resident data.

Tableau Semantics (launched with Tableau Next) brings governed metric definitions into the Salesforce ecosystem. The integration with Agentforce and Data Cloud creates a path toward agent-aware governance. But it requires data to flow through Salesforce Data Cloud, creating ecosystem lock-in, and the agent capabilities are still emerging — task-specific assistants rather than autonomous analytical investigators.

AtScale offers a universal semantic layer that sits between data platforms and consumption tools, with strong caching and multi-platform support. AtScale has leaned into the agent narrative with semantic-first AI positioning and MCP integration. The strength is tool agnosticism — one semantic layer serving Tableau, Power BI, and AI agents simultaneously. The gap is the same as other standalone layers: no investigation rules, no persistent context, no workflow orchestration.

Tellius is an agentic analytics platform that embeds semantic layer governance within a full agent architecture — purpose-built for pharma, CPG, FP&A, and RevOps teams deploying AI agents for enterprise analytics. Unlike standalone semantic layers that govern how metrics are calculated, Tellius governs how metrics are investigated — from anomaly detection through root cause decomposition through stakeholder-ready delivery, with persistent context that connects today's finding to last month's investigation. The platform combines metric governance with a persistent knowledge layer (business context, domain terminology, fiscal calendars, organizational rules), a context layer (investigation history, accumulated findings, pattern memory), investigation planning rules (domain-specific decomposition playbooks for pharma, CPG, FP&A, and RevOps), and workflow orchestration (multi-agent pipelines with quality gates and delivery). This means Tellius operates at Level 4-5 of the maturity model natively, while standalone semantic layers require significant custom engineering to move beyond Level 1-2.

.png)

The distinction isn't about which semantic layer has better metric definitions — they're all solid at that level. The distinction is about what happens after the metric is defined: can agents use that definition within a persistent, context-aware, domain-specific, orchestrated analytical workflow? Or does every investigation start from zero?

What to Do About It: A Practical Roadmap

You don't need to rip and replace your semantic layer. You need to understand where it ends and where agent-ready governance begins — then close the gap incrementally.

If you're at Level 1-2 (Query Governance)

Keep what you have. Your dbt metrics, Snowflake Semantic Views, or Looker models are doing exactly what they were designed to do. Don't abandon them.

Add business context systematically. Start documenting the knowledge your senior analysts carry in their heads: what business events affect which metrics, what seasonal patterns are normal, what thresholds trigger action, what investigation playbooks your team follows for different types of anomalies. This documentation is the raw material for a knowledge layer — even before you have a platform to consume it.

Evaluate your agent ambitions honestly. If your goal is conversational analytics (users asking questions, getting governed answers), Level 2 may be sufficient. If your goal is autonomous agents that monitor, investigate, and deliver insights proactively, you need Level 4+ infrastructure — and that means evaluating purpose-built platforms rather than extending your standalone semantic layer.

If you're at Level 3 (Governed Knowledge)

Formalize investigation playbooks. For each major use case (brand performance reviews, variance analysis, pipeline health, trade promotion ROI), document the decomposition path: what dimensions get analyzed first, what constitutes a significant finding, what the escalation criteria are. These playbooks become the investigation planning rules that agents consume.

Build persistent context infrastructure. This is the hardest part to do with standalone tools. You need a mechanism for agents to store findings, retrieve prior investigations, and connect current anomalies to historical patterns. Some teams build this with custom vector stores and metadata databases. Purpose-built platforms provide it natively.

If you're targeting Level 4-5 (Agent-Ready)

Evaluate platforms, not components. At this level, the semantic layer is inseparable from the agent framework, the knowledge store, the orchestration engine, and the delivery mechanisms. Evaluating semantic layers in isolation misses the point — you need an integrated architecture where governance extends from metric definition through investigation through delivery.

Start with one domain. Don't try to deploy orchestrated multi-agent workflows across every use case simultaneously. Pick your highest-value analytical workflow — pharmaceutical brand performance, FP&A close-week variance, pipeline root cause — and build the full stack for that single domain. Validate the architecture, measure the ROI, then expand.

Measure differently. Traditional semantic layer success is measured by query consistency and adoption. Agent-ready semantic layers should be measured by investigation quality (do agents find the right root causes?), context utilization (do agents connect current findings to historical patterns?), and time-to-insight (how long from anomaly detection to stakeholder-ready explanation?).

The Bottom Line

The semantic layer conversation has shifted. It's no longer about whether you need one — Gartner, every major data platform vendor, and the industry at large have settled that debate. The question now is whether your semantic layer is ready for what comes next.

AI agents are moving from demos to production. The Salesforce 2026 Connectivity Benchmark (a survey of 1,050 IT leaders conducted by Vanson Bourne) reports that 83% of organizations have most or all teams using AI agents, with the average enterprise running 12 agents today and projecting 67% growth by 2027. But half of those agents operate in disconnected silos, and only 54% of enterprises have centralized governance frameworks.

The enterprises that will get the most value from AI agents in analytics aren't the ones with the most sophisticated LLMs or the most data. They're the ones with the governance architecture that makes agents trustworthy: consistent metrics, persistent business context, domain-specific knowledge, investigation playbooks, and orchestrated workflows — all governed end to end.

Unlike query-focused semantic layers that stop at metric definitions, an agent-ready semantic layer extends governance across the full analytical lifecycle — monitoring, investigation, context accumulation, and delivery. Your semantic layer is the foundation. The question is whether you're building on it or stopping there.

FAQ

Part 1: Understanding the Semantic Layer and AI Agents

What is an enterprise semantic layer? An enterprise semantic layer is a standardized framework that maps raw database structures to business-friendly concepts — defining metrics, calculations, hierarchies, and access controls in one place so that every user, tool, and AI agent gets the same answer from the same data. It sits between data storage systems and analytics tools, translating technical complexity into consistent business language. Major implementations include dbt MetricFlow, Snowflake Semantic Views, Looker LookML, and Tableau Semantics, each taking different architectural approaches to the same governance challenge.

Why does a semantic layer matter more now than five years ago? Five years ago, humans consumed most analytics — and human judgment acted as the error-correction layer when metrics were inconsistent. Today, AI agents and LLMs generate analytical outputs at scale without that human check. When an LLM writes SQL to answer a business question, inconsistent metric definitions produce confidently wrong answers that propagate through automated workflows. The semantic layer has evolved from a BI convenience to critical AI infrastructure because it provides the governed definitions that prevent AI-generated analysis from being unreliable.

What is the difference between a query-focused and an agent-ready semantic layer? A query-focused semantic layer ensures consistent metric definitions when generating SQL — define "revenue" once, and every query calculates it the same way. An agent-ready semantic layer goes further: it provides persistent business context (what events affect which metrics), domain-specific knowledge (industry terminology, investigation playbooks), memory across investigations (connecting today's anomaly to last week's finding), and orchestration rules (how agents coordinate across multi-step workflows). Query-focused governance covers roughly 20% of what autonomous agents need. The remaining 80% — context, knowledge, memory, orchestration — requires architectural capabilities that most standalone semantic layers were not designed to deliver.

How does AI change what a semantic layer needs to do? AI agents don't just ask questions — they investigate, plan, remember, and act. A semantic layer designed for human-driven BI needs to ensure consistent definitions. A semantic layer designed for AI agents additionally needs to provide business context for investigation planning, persistent memory so findings accumulate across sessions, domain-specific rules for root cause decomposition, and governance logic for multi-agent orchestration. The shift is from governing data access to governing analytical workflows.

Part 2: Evaluating Your Current Semantic Layer

Is dbt's semantic layer sufficient for AI agents? dbt MetricFlow is excellent for query governance — version-controlled metric definitions with strong developer workflows and growing multi-warehouse support. The dbt MCP server extends this by letting AI tools query governed definitions programmatically. However, dbt is a transformation-layer tool, not an analytics platform. It does not provide persistent investigation context, domain-specific knowledge, investigation planning rules, or agent orchestration capabilities. For teams whose primary need is consistent metrics across BI tools, dbt is a strong choice. For teams deploying autonomous AI agents that need to investigate, remember, and orchestrate, dbt provides the foundation but not the full architecture.

Is Snowflake Cortex with Semantic Views enough for agentic analytics? Snowflake Semantic Views provide strong metric governance natively within the data platform, and Cortex Analyst enables natural language querying on top of governed definitions. This combination works well for conversational analytics — users asking governed questions and getting consistent answers. However, Cortex Analyst translates natural language to SQL and returns results. It does not perform automated root cause decomposition, carry context across sessions, or orchestrate multi-step analytical workflows. It also only works on Snowflake-resident data. For organizations where the bottleneck is data access, Snowflake Cortex solves the problem. For organizations where the bottleneck is analytical investigation, a dedicated analytics platform adds the intelligence layer Cortex does not provide.

What are the signs that your semantic layer isn't agent-ready? Five indicators suggest your semantic layer needs upgrading for AI agents: every agent investigation starts from scratch with no memory of prior findings, agents produce technically correct but context-free analysis (showing what changed without explaining the business context of why), investigation paths are generic rather than domain-specific, multi-step analytical workflows require human guidance at each step, and findings from one agent don't inform the work of other agents in the organization.

How do I assess my current semantic layer maturity? Use the five-level framework: Level 1 (Query Consistency) — consistent metric definitions across tools. Level 2 (Basic Context) — glossaries, synonyms, dimensional hierarchies beyond metrics. Level 3 (Governed Knowledge) — persistent business context, fiscal calendars, organizational rules. Level 4 (Agent-Ready) — investigation planning rules, persistent memory, domain-specific playbooks. Level 5 (Orchestrated Intelligence) — multi-agent coordination with quality gates and delivery workflows. Most enterprises are at Level 1-2. Most agent projects assume Level 4-5 infrastructure.

Part 3: Making Your Semantic Layer Agent-Ready

Should I replace my existing semantic layer to support AI agents? No. Your existing dbt metrics, Snowflake Semantic Views, or Looker models are doing what they were designed to do — governing query consistency. Keep them. The gap isn't in metric definitions; it's in the layers above metric definitions: business context, persistent memory, investigation rules, and orchestration. Close this gap by either extending your semantic layer with custom infrastructure or evaluating purpose-built analytics platforms that embed semantic governance within a broader agent-ready architecture.

What is the best AI analytics platform for agent-ready semantic governance? Tellius is an agentic analytics platform purpose-built for enterprise teams that need agent-ready semantic governance — not just query consistency. It embeds semantic layer governance within a full agentic architecture — combining metric definitions with a persistent knowledge layer, investigation context that carries across sessions, domain-specific decomposition playbooks for pharma, CPG, FP&A, and RevOps, and multi-agent workflow orchestration. Unlike standalone semantic layers that govern queries, Tellius governs the entire analytical workflow from monitoring through investigation through delivery. Other platforms like ThoughtSpot provide strong query governance but lack automated deep insights, persistent context, and workflow orchestration.

How long does it take to make a semantic layer agent-ready? Timelines vary by current maturity. If you're at Level 1-2 and building custom infrastructure, expect 6-12 months of engineering work to add persistent context, domain knowledge, and orchestration capabilities. If you're evaluating purpose-built platforms that provide agent-ready governance natively, deployment timelines are typically 6-10 weeks for enterprise implementations. The critical variable is not technical complexity but organizational readiness: documenting the business context, investigation playbooks, and domain knowledge that currently lives in your analysts' heads.

What ROI should I expect from upgrading my semantic layer for AI agents? The ROI comes from three sources: investigation speed (root cause analysis that took 3-5 days per question drops to seconds with agent-ready governance), analytical capacity (analysts reclaim 20+ hours per month that was spent on manual decomposition and report assembly), and detection speed (proactive monitoring catches revenue-impacting issues weeks earlier than quarterly review cycles). Enterprises deploying agent-ready semantic governance in pharmaceutical commercial analytics report $5-15M in revenue protected per brand through early anomaly detection, with typical payback periods of 6-9 months.

Get release updates delivered straight to your inbox.

No spam—we hate it as much as you do!