Your AI Has Amnesia: Why Long-Term Memory is the Next Big Leap

.png)

Consider a common modern frustration: you've just spent a laborious twenty minutes meticulously explaining the nuanced intricacies of your quarterly project to your AI assistant. You articulate dependencies, highlight critical stakeholders, and detail looming deadlines. A mere five minutes later, you ask a follow-up question, and the digital interlocutor chirps, "Hello! How can I help you today?" It’s a moment of unsettling déjà vu, a digital rendition of Groundhog Day, or perhaps more acutely, a technological parallel to the protagonist of Memento, brilliant in the fleeting present, yet devoid of a coherent past. This persistent amnesia, this inability to retain context across interactions, is not merely an inconvenience; it represents a profound limitation, arguably the single greatest impediment preventing astonishing-on-paper AI models from translating into truly indispensable, intuitive tools in our complex world.

Part 1: The "Brain Rot" Problem: Why a Bad Memory Makes AI Dumber

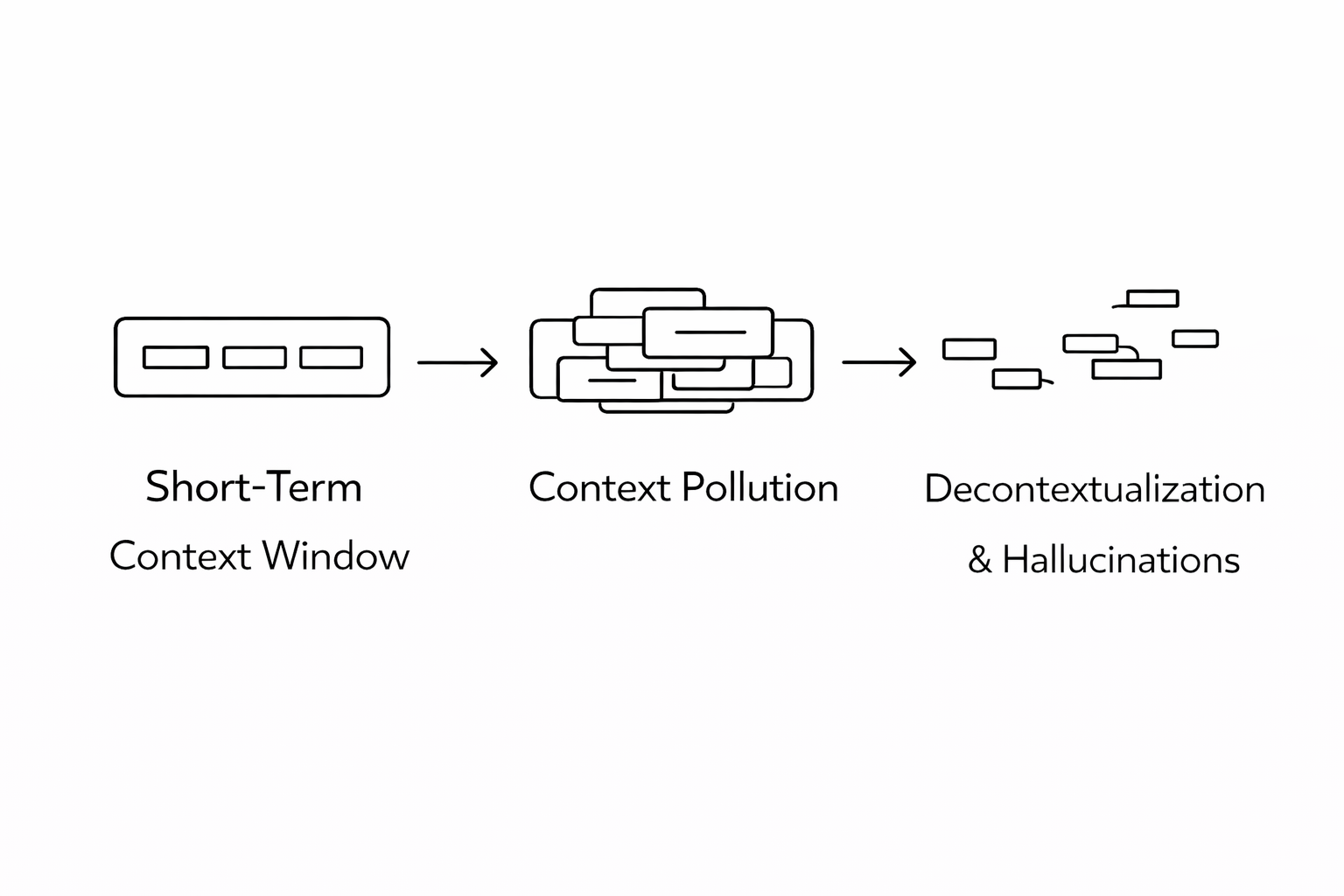

The root of this systemic forgetfulness lies in what engineers refer to as the "context window"—the finite short-term memory available to an AI model during any given interaction. To compensate for its inherent amnesia, we are compelled to repeatedly stuff this transient buffer with every pertinent detail the AI might require. This constant re-feeding of information, from user profiles and past conversational turns to intricate domain knowledge, ensures the AI has the immediate data to generate a coherent response.

However, this compensatory strategy inadvertently ushers in a new malady: "context pollution." Shoving vast quantities of information, some salient, much merely tangential, into this constricted context window is akin to giving the AI "brain rot." The model, overwhelmed by the sheer volume of data, struggles to discern relevance. It begins to exhibit a downward spiral: confusion mounts, details from disparate contexts blur, leading to "decontextualization" where the AI loses the precise relational understanding of information. Consequently, its susceptibility to hallucination—fabricating facts or drawing illogical inferences—increases dramatically. Paradoxically, the more we strive to help it "remember" through verbose input, the more unreliable and less useful its outputs become.

Humans, by contrast, operate under a vastly different paradigm. We learn, distilling complex experiences into salient facts, remembering preferences, and building upon a cumulative foundation of past interactions. We don’t re-explain our life story every morning. For an AI, retraining an entire model to incorporate a single new fact or preference is an astronomically expensive, resource-intensive endeavor, highlighting the urgent need for a more dynamic and economical approach to knowledge retention.

Part 2: The Memory Minefield: Building an AI Brain is Harder Than You Think

One might intuitively surmise that the solution to AI's memory woes is simply to equip it with a "bigger hard drive." Alas, the reality is far more convoluted, entailing both formidable technical hurdles and profound ethical quandaries.

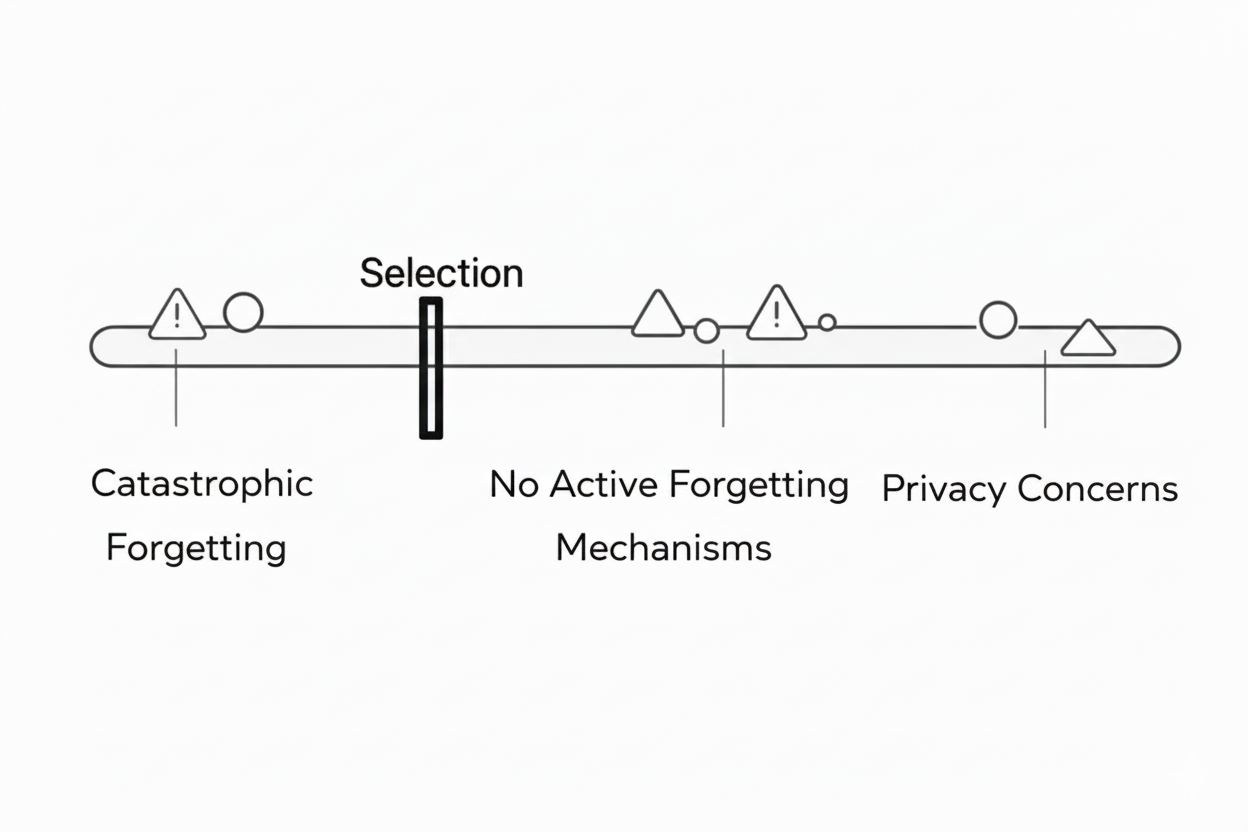

The neural networks powering contemporary AI face a formidable challenge known as "catastrophic forgetting." Imagine teaching your AI assistant the intricate details of a nascent project, only for it to subsequently lose all knowledge of analyzing last quarter's sales data—a critical skill it previously mastered. This phenomenon, where the learning of new information obliterates previously acquired knowledge, is a pervasive and vexing problem in machine learning research.

Furthermore, AI, in its current state, lacks the sophisticated mechanisms for active forgetting that are integral to human cognition. Without a method to discern what is important, what is outdated, or what is merely trivial, an AI's memory would rapidly become a cluttered digital hoard. Retrieving meaningful information from such an uncurated, ever-expanding repository would become sluggish and inefficient, defeating the very purpose of memory.

Beyond the technical labyrinth, the very notion of AI memory ignites a "Controversy Corner":

- Is it even "memory"? The Memory Misconception. A fervent debate rages among cognitive scientists and AI ethicists. When an AI retrieves information from a database, is it truly "remembering" in a human sense, or merely executing a sophisticated search? The distinction, while semantic, points to deeper philosophical questions about the nature of intelligence and consciousness.

- Cognitive Offloading. A more insidious concern is the potential for humans to outsource their own cognitive functions, their mnemonic capabilities, to these increasingly capable machines. Are we inadvertently making ourselves intellectually softer, dulling our own faculties for recall and synthesis?

- The Ultimate Privacy Nightmare. Storing every interaction, every preference, every piece of sensitive data exchanged with an AI raises colossal security and ethical red flags. Who owns this data? How is it protected from misuse, breaches, or unauthorized access? The implications for personal and corporate privacy are staggering.

Part 3: Hacking the Brain: The Quest for an AI That Remembers

Despite the daunting challenges, this frontier of AI research is rife with innovation. Researchers are actively pursuing ingenious methods to imbue AI with robust, long-term memory.

One of the most promising avenues is The Scrapbook Approach, often realized through Retrieval-Augmented Generation (RAG). This paradigm is akin to providing the AI with an open-book test. Instead of forcing the model to memorize every fact, RAG empowers it to dynamically look up relevant information from an external, dedicated knowledge base—be it a sophisticated vector database, a meticulously structured knowledge graph, or a vast corpus of documents—in real-time. This external memory acts as a reliable reference, dramatically expanding the effective knowledge available to the AI without burdening its limited context window. The AI retrieves factual grounding from this "scrapbook" before generating its response, significantly enhancing accuracy and reducing hallucinations.

Another fascinating trajectory involves Mimicking the Mind through Neuroscience-Inspired Systems:

- Episodic Memory: This endeavor seeks to teach AI to remember events and their associated context—the "what," "where," "when," and even "who" of an interaction. Unlike factual memory, which simply stores isolated data points, episodic memory captures the relational context of an experience, a critical component for true reasoning, learning from mistakes, and building personal histories.

- Continual Learning: Often termed the "holy grail" of AI memory, continual learning aims to enable AI to acquire new information incrementally, on the fly, without suffering from catastrophic forgetting. The goal is an adaptive system that can continually expand its knowledge base and refine its skills without necessitating a complete "brain wipe" and costly retraining.

Finally, The "Agent" Approach represents a significant architectural shift. Rather than a simple, reactive chatbot, this paradigm envisions an AI "agent" equipped with a sophisticated, dedicated memory system. Such an agent employs classifiers to intelligently determine what information is important enough to save, where it should be stored (e.g., user preferences, project facts, domain knowledge), and crucially, when and how to retrieve it. This move toward agentic frameworks, with their explicit memory modules, planning capabilities, and reasoning loops, is foundational for AI that can truly learn and adapt over extended periods.

Part 4: Memory in Action: How Tellius Gets It Right

The discourse on AI memory often feels theoretical, yet leading-edge companies are already translating these advanced concepts into practical, impactful solutions. This isn't the stuff of speculative fiction; it's being built today.

Enter Kaiya, the Analytics Agent from Tellius that Remembers. Unlike the forgetful chatbots that plague our digital lives, Kaiya is engineered atop an "agentic" framework, a prime example of an AI designed with a robust, persistent memory at its core. It’s a demonstrably different class of AI assistant.

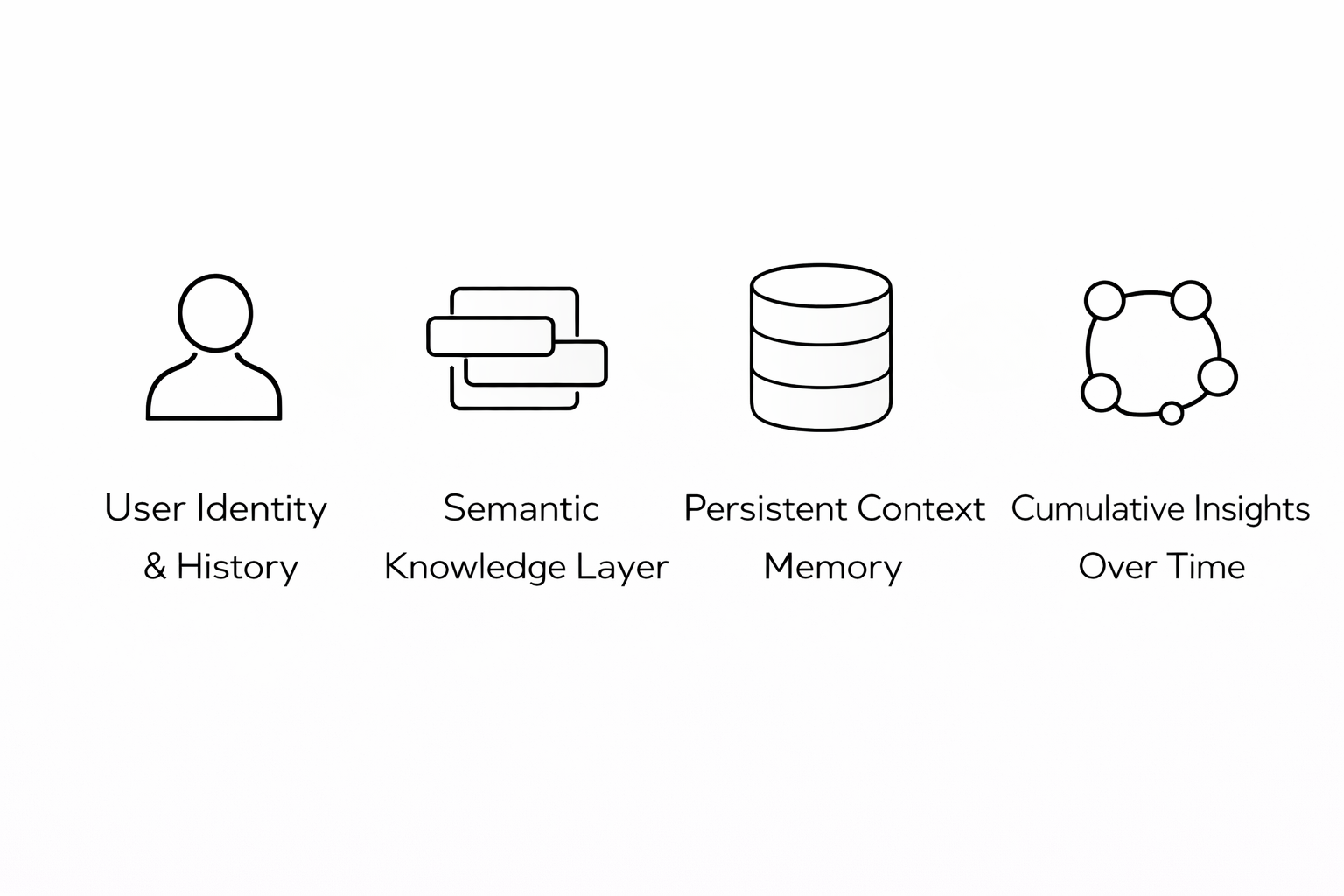

What sets Kaiya apart is not just what it remembers, but who it remembers. Kaiya retains your identity, your specific interests within your data, and the entire historical thread of your previous conversations. This enables a continuous, evolving dialogue about your business data, allowing you to pick up exactly where you left off, confident that your previous context has been preserved. The analytical journey becomes coherent, iterative, and deeply personalized.

The "secret sauce" behind Kaiya's enduring memory is its Semantic Knowledge Layer. This isn't merely a generic bucket of facts; it’s a highly specialized, business-aware knowledge fabric. It comprehends your company's unique terminology, metrics, hierarchies, and contextual nuances. This deep, domain-specific understanding is critical because it allows Kaiya to not just recall information, but to interpret it within the specific framework of your business, making its insights profoundly relevant and actionable, not just generically plausible.

The overarching goal with systems like Kaiya is to transcend the role of a mere tool that parrots answers. It's about forging a strategic partner, an AI that "thinks with us," connecting disparate data points over time, building upon previous analyses, and ultimately unearthing insights that a human might miss or that a forgetful AI could never synthesize.

Conclusion: The Future Isn't Forgetful

Our journey has taken us from the frustrating reality of "goldfish" AI—brilliant in bursts but suffering from profound amnesia—to the dawn of sophisticated systems equipped with robust, long-term memory. This evolution from ephemeral short-term context windows to persistent, intelligent recall represents more than a technical upgrade; it's a fundamental paradigm shift.

Long-term memory is the indispensable bridge between a clever technological demonstration and a truly transformative, indispensable tool. It is the critical enabler that will finally allow AI to learn, adapt, and collaborate with us in a meaningful, cumulative way. The future of AI is not one of endless restarts and forgotten contexts; it has a past, it can learn from it, and that changes absolutely everything about how we will interact with, and benefit from, artificial intelligence.

Get release updates delivered straight to your inbox.

No spam—we hate it as much as you do!